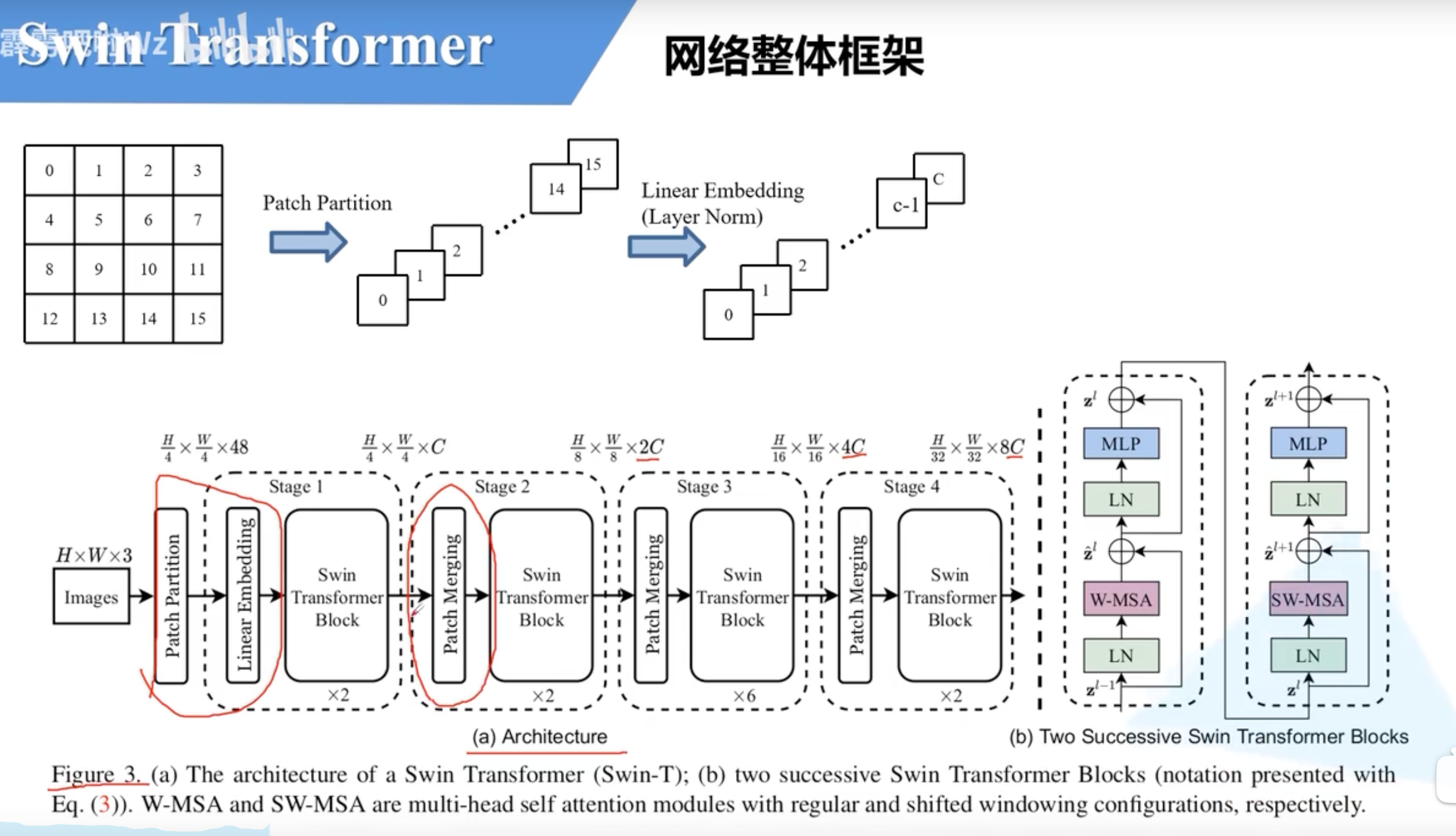

Swin Transformer architecture

what is layer norm

Layer normalization (LayerNorm) is a technique to normalize the distributions of intermediate layers. It enables smoother gradients, faster training, and better generalization accuracy

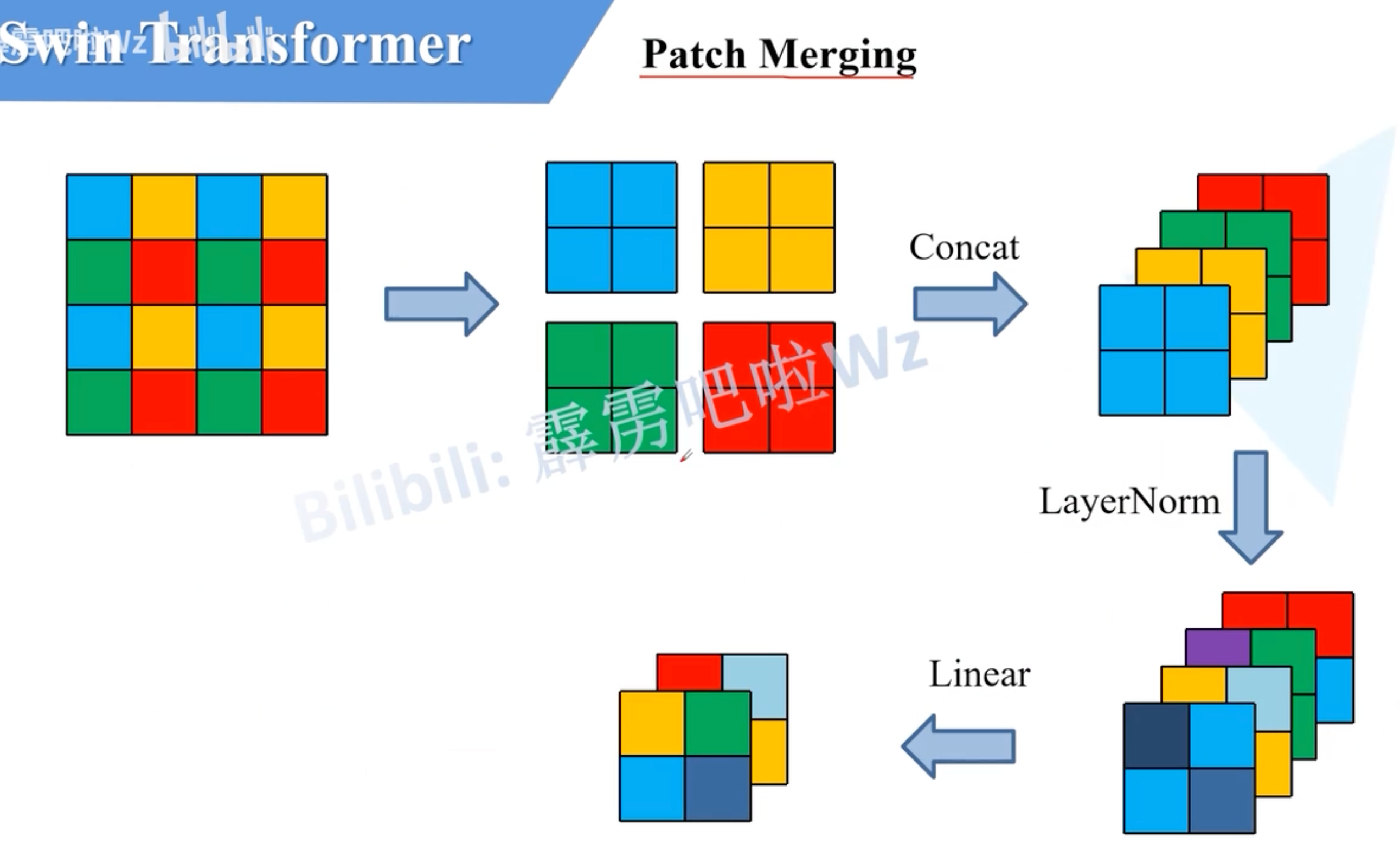

what is patch merging

it is something like down-sampling

the image is further cut into patches, and then concatenate along the channel dimension.

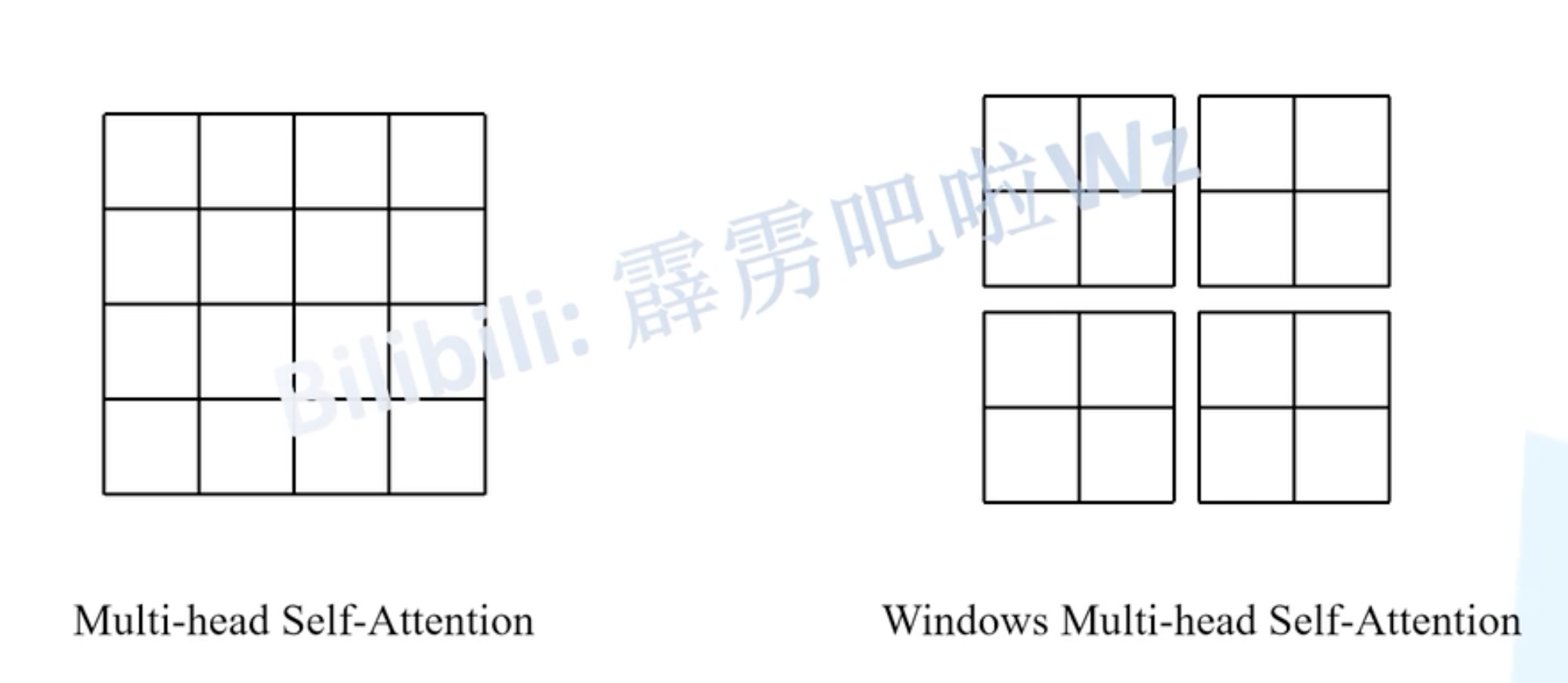

what is window multi-head self-attention (W-MSA)

compared to vanilla multi-head self attention

only perform local self attention within the patch group

advantages:

computational efficient (similar to ResNeXt)

limitation:

there is no communication across the windows

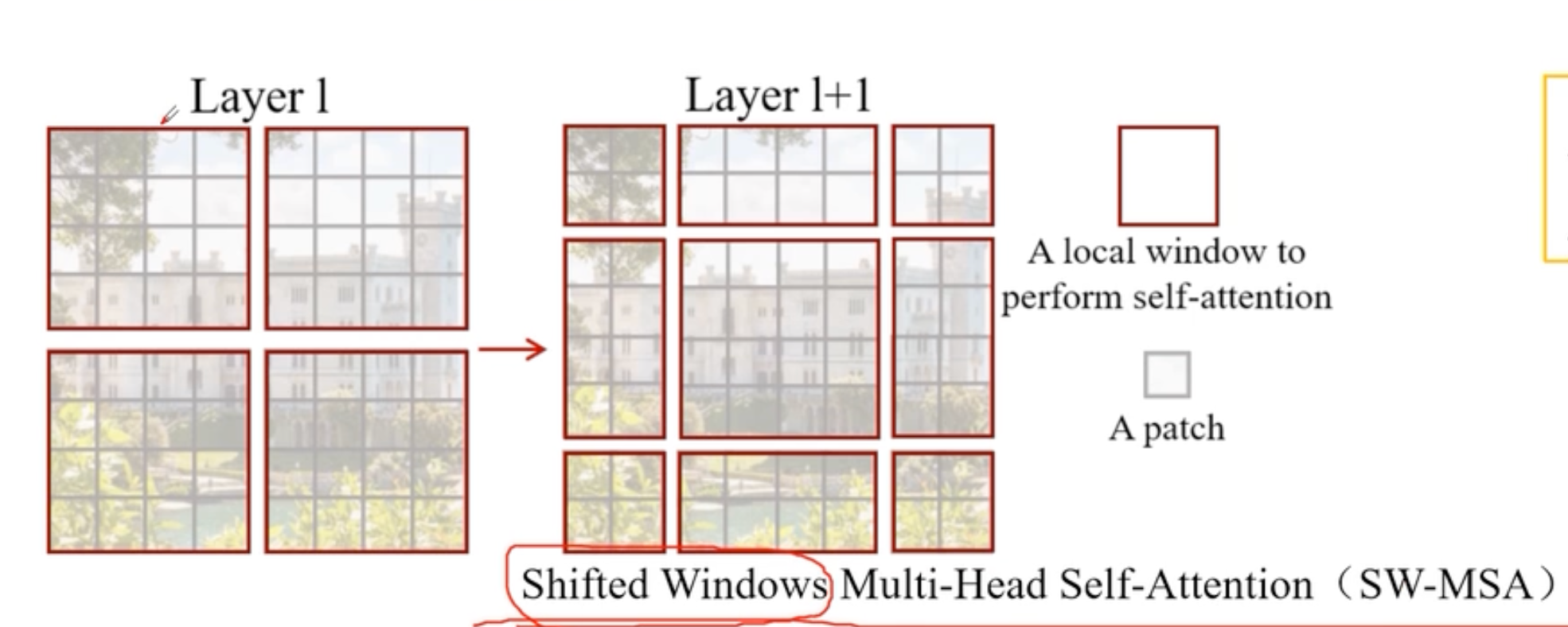

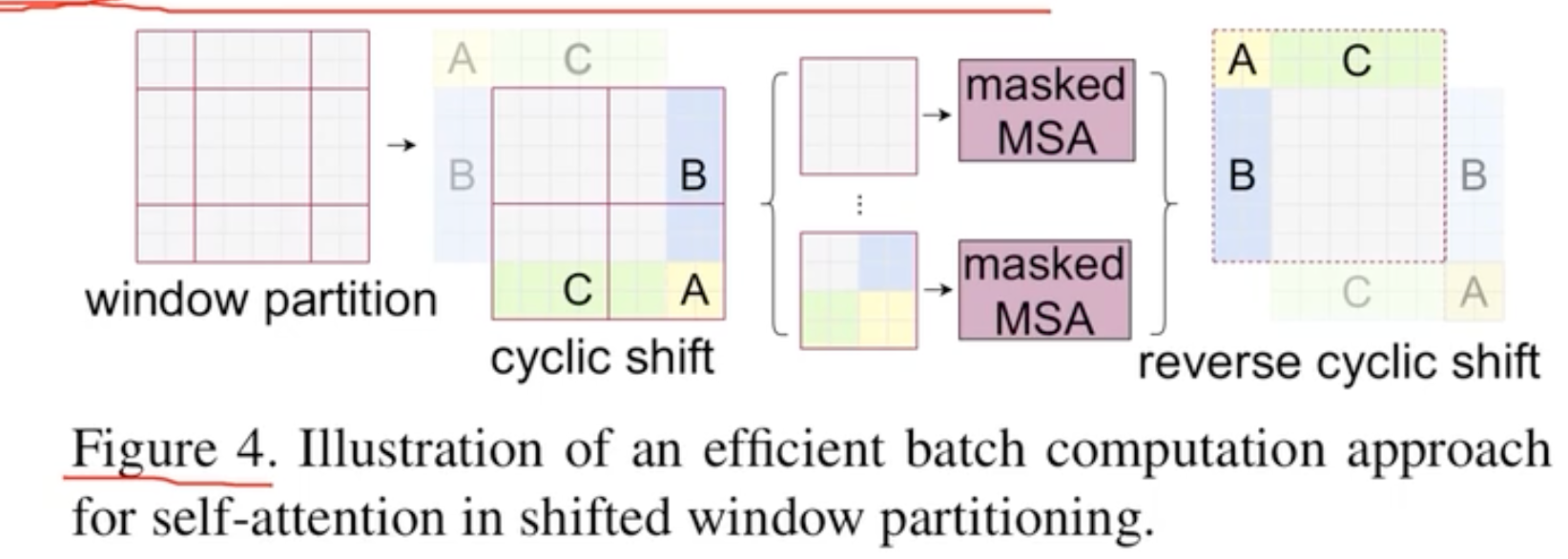

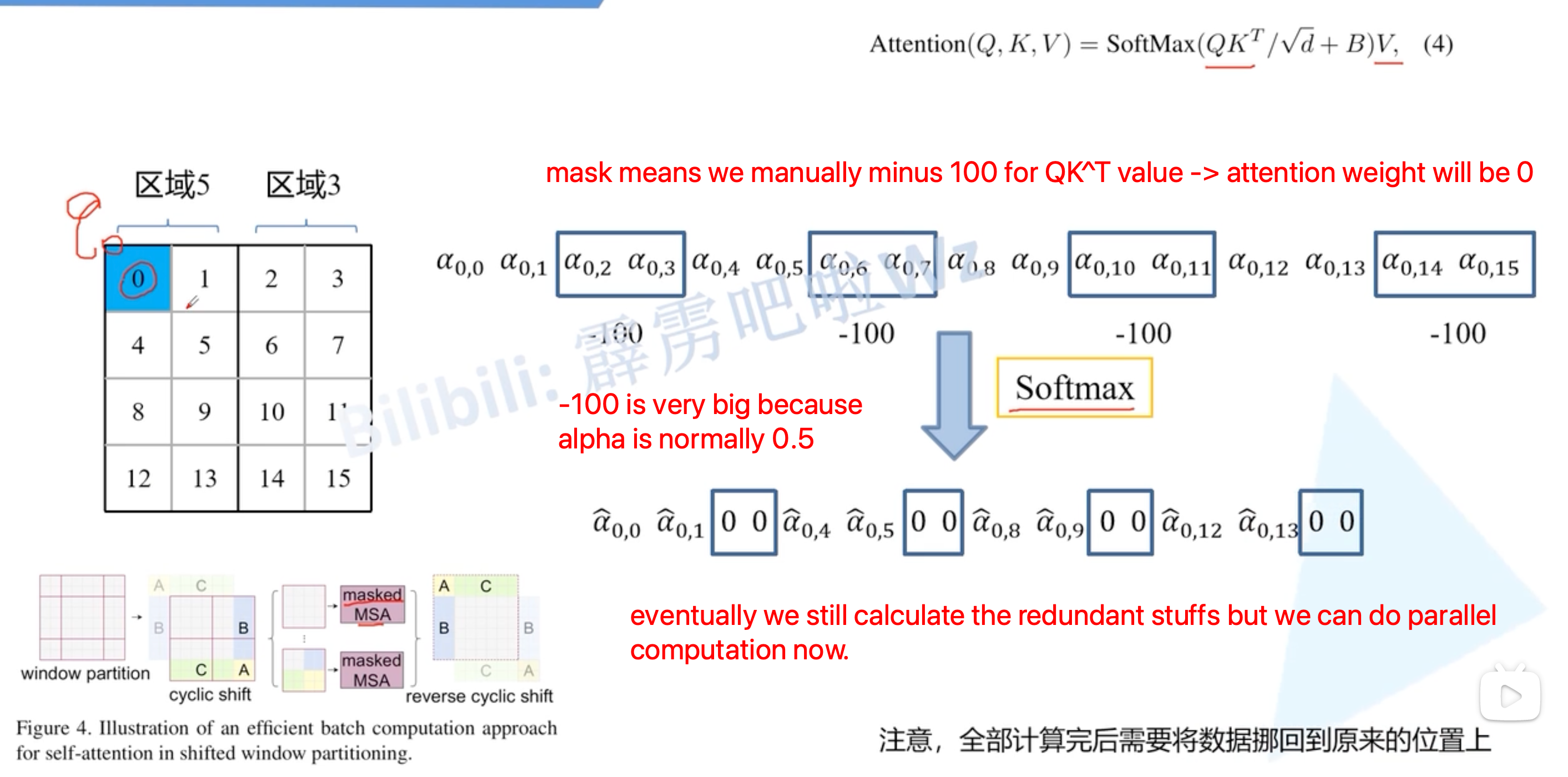

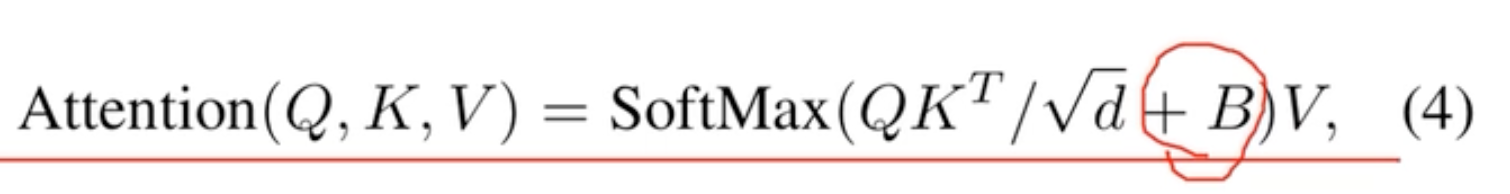

what is shifted window MSA

this allows the communication across the neighbour window

but now we have 9 windows instead of 4 windows

if we want to perform parallel computation, we need to do something to make it back to 4 windows

Solution:

we need to add mask so that we only calculate the attention over neighbours

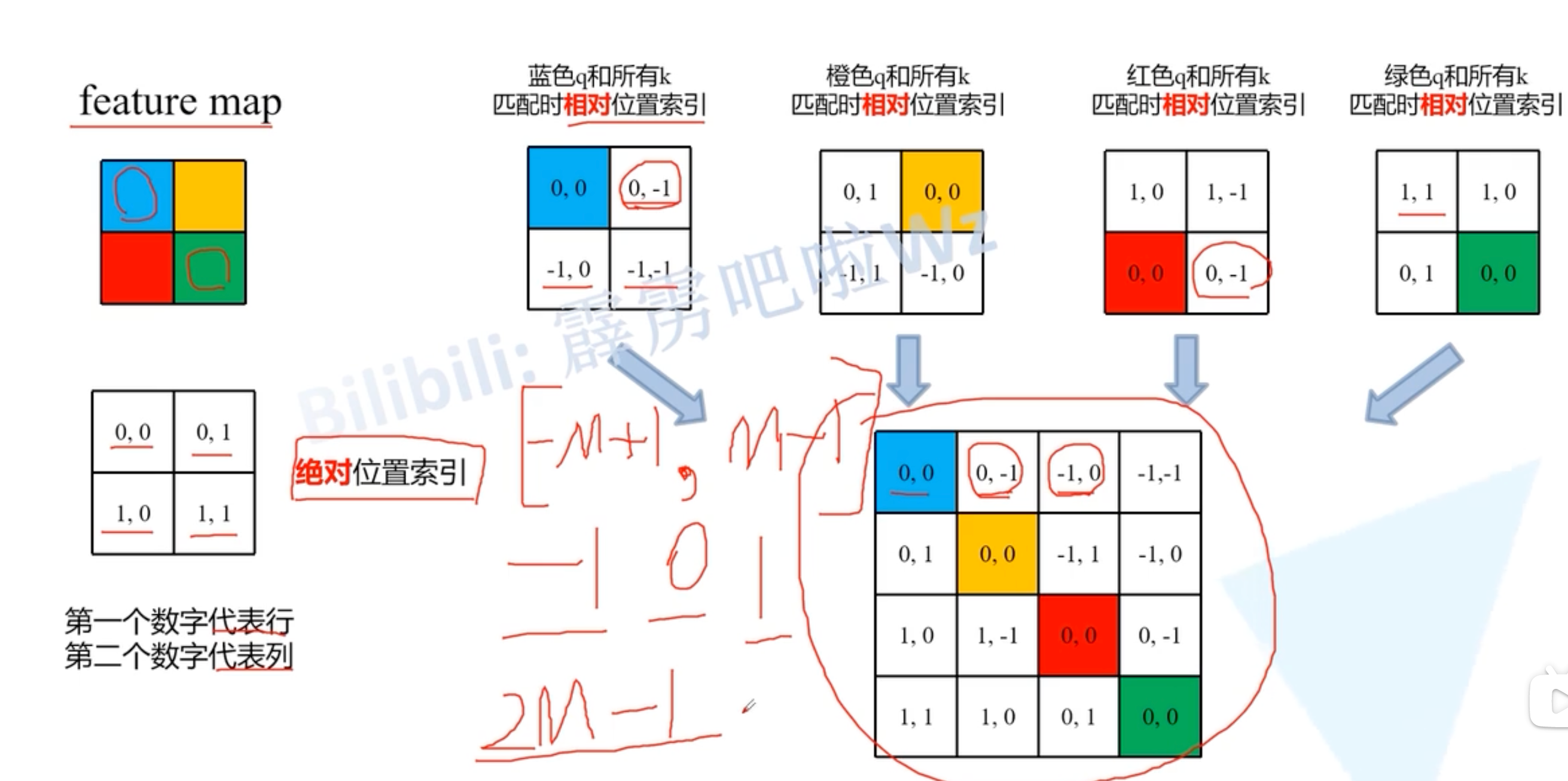

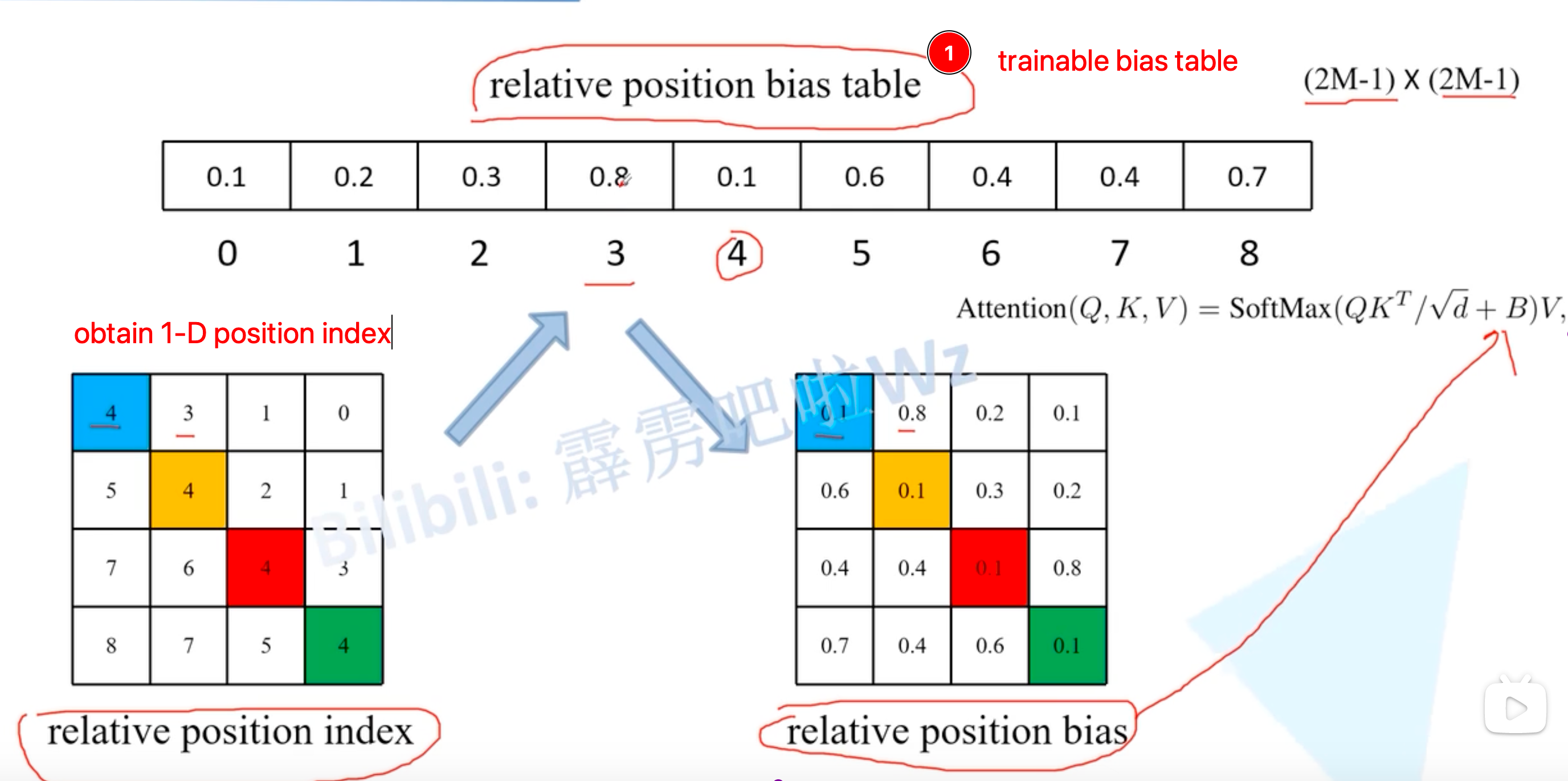

what is relative position bias

we need to add positional embedding to the patch because transformer is not CNN, we have to add additional positional information to the model.

- but absolute positional embedding is less effective than relative positional bias term

- we label the position based on the current query patch

No need to know the detail of this relative position bias term