Alekh_agarwal PC-PG Policy Cover Directed Exploration for Provable Policy Gradient Learning 2020

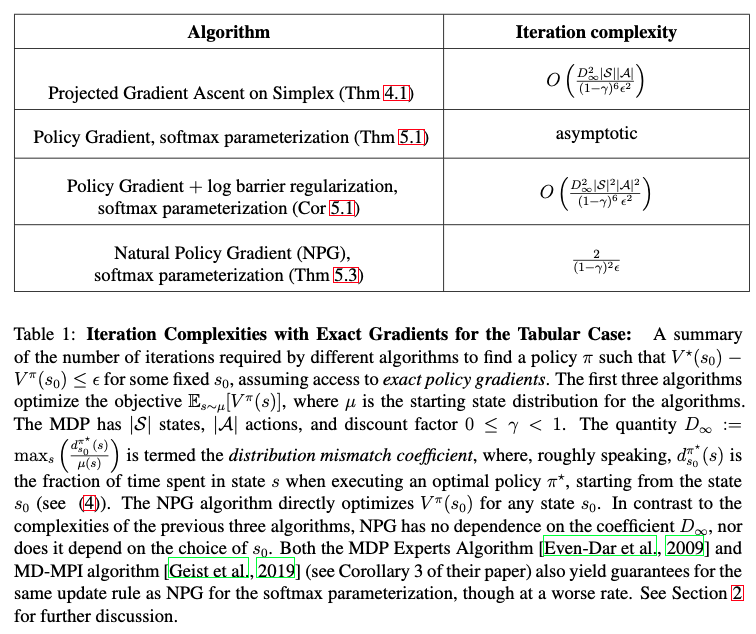

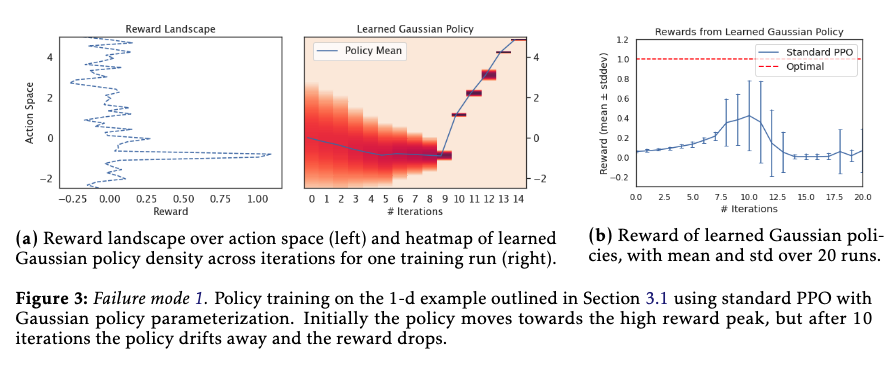

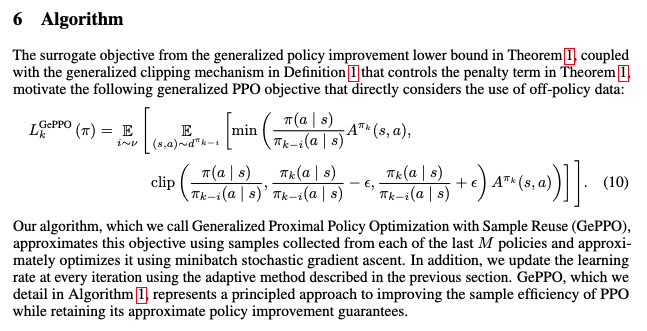

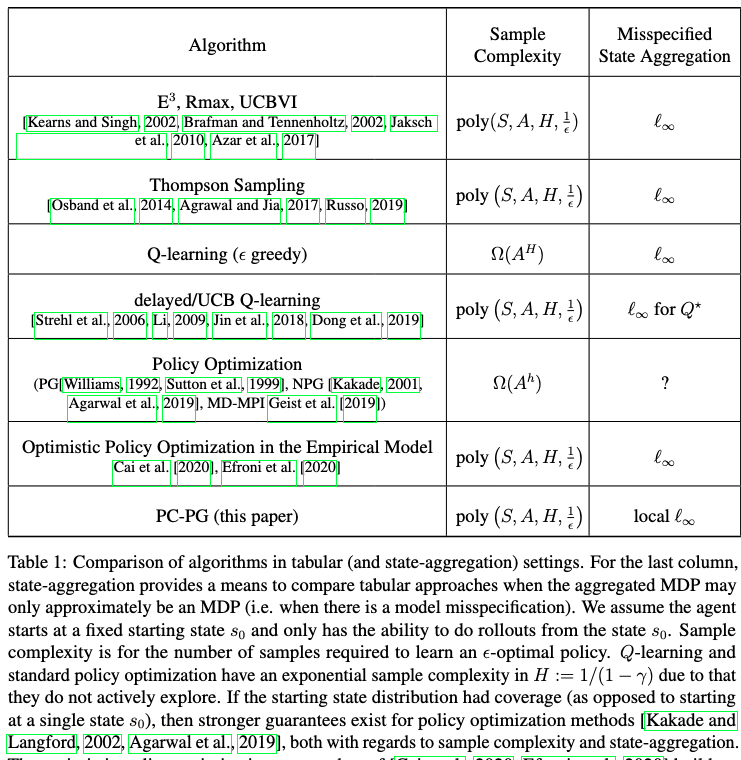

[TOC] Title: PC-PG Policy Cover Directed Exploration for Provable Policy Gradient Learning Author: Alekh Agarwal et. al. Publish Year: Review Date: Wed, Dec 28, 2022 Summary of paper Motivation The primary drawback of direct policy gradient methods is that, by being local in nature, they fail to adequately explore the environment. In contrast, while model-based approach and Q-learning directly handle exploration through the use of optimism. Contribution Policy Cover-Policy Gradient algorithm (PC-PG), a direct, model-free, policy optimisation approach which addresses exploration through the use of a learned ensemble of policies, the latter provides a policy cover over the state space. the use of a learned policy cover address exploration, and also address what is the catastrophic forgetting problem in policy gradient approaches (which use reward bonuses); the on-policy algorithm, where approximation errors due to model mispecification amplify (see [Lu et al., 2018] for discussion) Some key terms suffering from sparse reward ...