Tom_everitt Reinforcement Learning With a Corrupted Reward Channel 2017

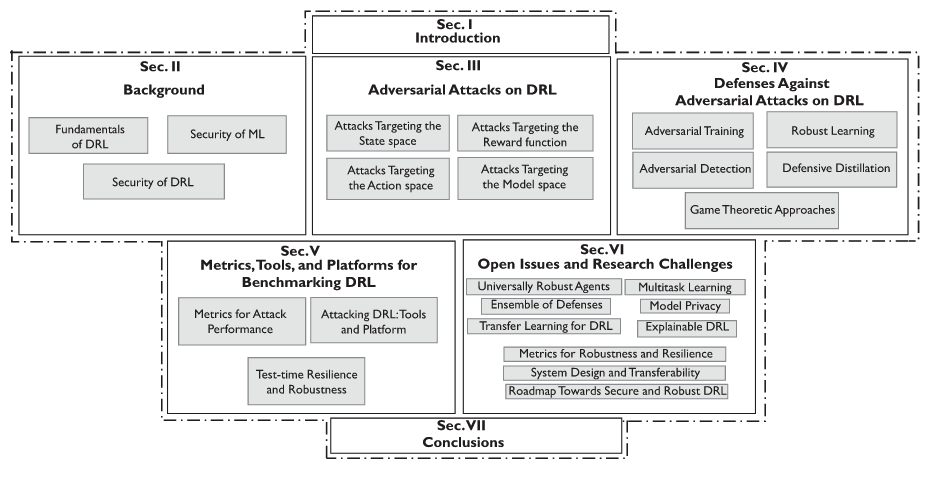

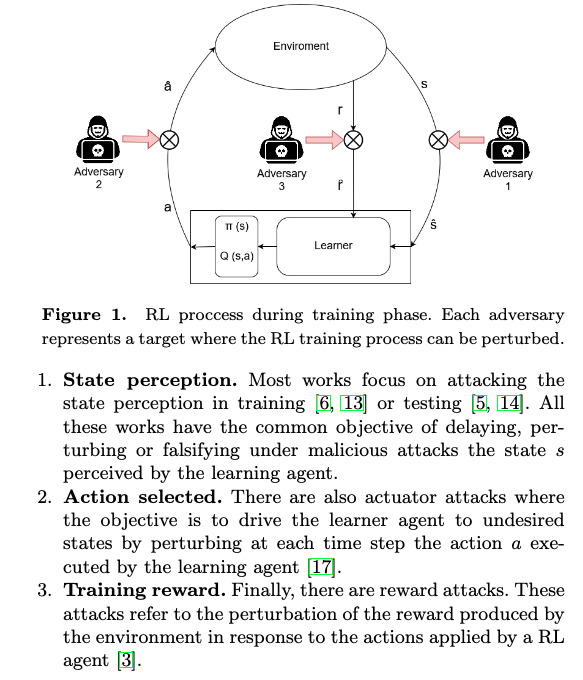

[TOC] Title: Reinforcement Learning With a Corrupted Reward Channel Author: Tom Everitt Publish Year: August 22, 2017 Review Date: Mon, Dec 26, 2022 Summary of paper Motivation we formalise this problem as a generalised Markov Decision Problem called Corrupt Reward MDP Traditional RL methods fare poorly in CRMDPs, even under strong simplifying assumptions and when trying to compensate for the possibly corrupt rewards Contribution two ways around the problem are investigated. First, by giving the agent richer data, such as in inverse reinforcement learning and semi-supervised reinforcement learning, reward corruption stemming from systematic sensory errors may sometimes be completely managed second, by using randomisation to blunt the agent’s optimisation, reward corruption can be partially managed under some assumption Limitation ...