Alexander_nikulin Anti Exploration by Random Network Distillation 2023

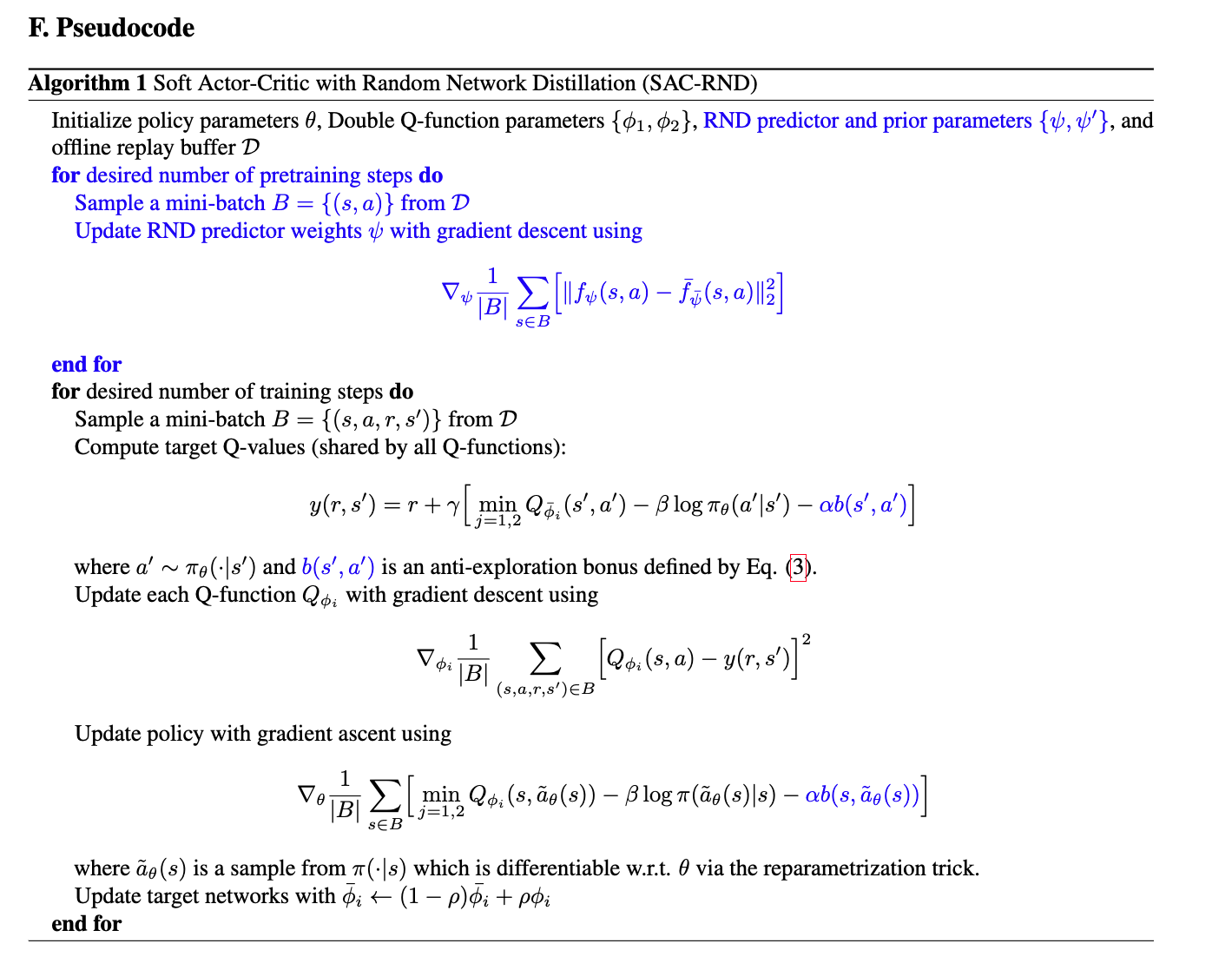

[TOC] Title: Anti Exploration by Random Network Distillation Author: Alexander Nikulin et. al. Publish Year: 31 Jan 2023 Review Date: Wed, Mar 1, 2023 url: https://arxiv.org/pdf/2301.13616.pdf Summary of paper Motivation despite the success of Random Network Distillation (RND) in various domains, it was shown as not discriminative enough to be used as an uncertainty estimator for penalizing out-of-distribution actions in offline reinforcement learning ?? wait, why we want to penalizing out-of-distribution actions? Contribution With a naive choice of conditioning for the RND prior, it becomes infeasible for the actor to effectively minimize the anti-exploration bonus and discriminativity is not an issue. We show that this limitation can be avoided with conditioning based on Feature-wise Linear Modulation (FiLM), resulting in a simple and efficient ensemble-free algorithm based on Soft Actor-Critic. Some key terms why we want uncertainty-based penalization ...