Harsh_jhamtani Natural Language Decomposition and Interpretation of Complex Utterances 2023

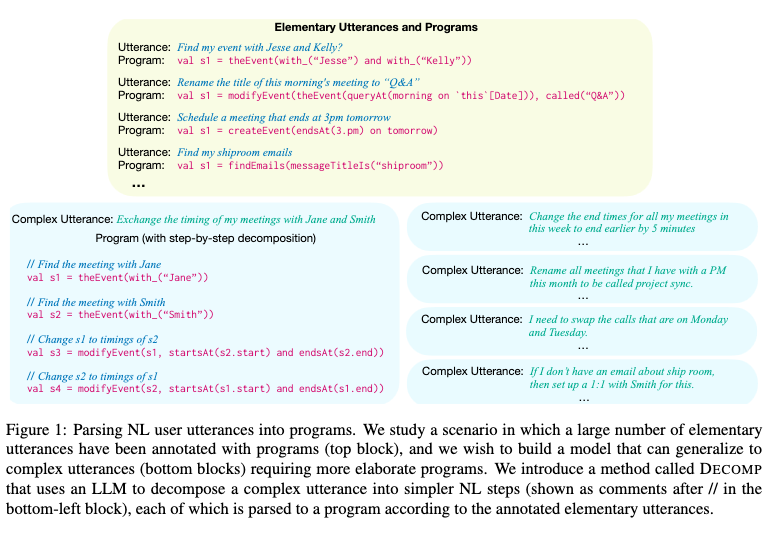

[TOC] Title: Natural Language Decomposition and Interpretation of Complex Utterances Author: Jacob Andreas Publish Year: 15 May 2023 Review Date: Mon, May 22, 2023 url: https://arxiv.org/pdf/2305.08677.pdf Summary of paper Motivation natural language interface often require supervised data to translate user request into structure intent representations however, during data collection, it can be difficult to anticipate and formalise the full range of user needs we introduce an approach for equipping a simple language to code model to handle complex utterances via a process of hierarchical natural language decomposition. Contribution Experiments show that the proposed approach enables the interpretation of complex utterances with almost no complex training data, while outperforming standard few-shot prompting approaches. Some key terms Methodology ...