Jiannan_xiang Language Models Meet World Models 2023

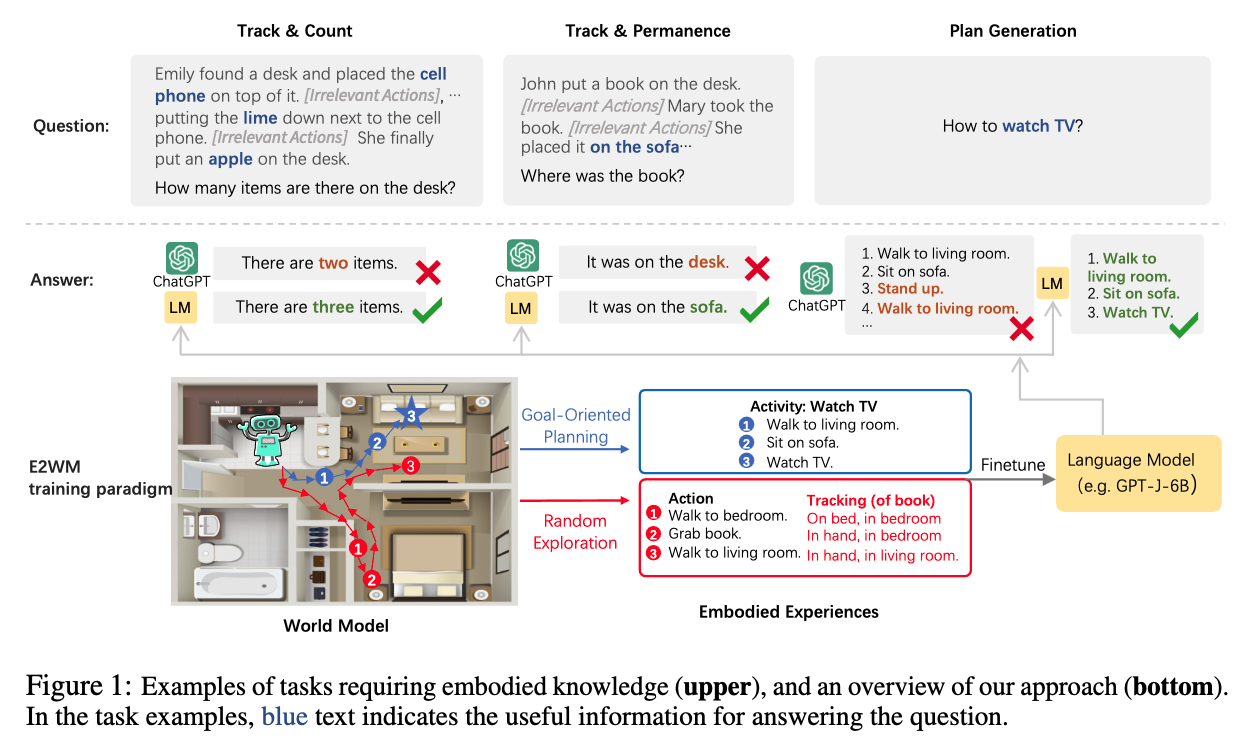

[TOC] Title: Language Models Meet World Models: Embodied Experiences Enhance Language Models Author: Jiannan Xiang et. al. Publish Year: 22 May 2023 Review Date: Fri, May 26, 2023 url: https://arxiv.org/pdf/2305.10626v2.pdf Summary of paper Motivation LLM often struggle with simple reasoning and planning in physical environment the limitation arises from the fact that LMs are trained only on written text and miss essential embodied knowledge and skills. Contribution we propose a new paradigm of enhancing LMs by finetuning them with world models, to gain diverse embodied knowledge while retaining their general language capabilities. the experiments in a virtual physical world simulation environment will be used to finetune LMs to teach diverse abilities of reasoning and acting in the physical world, e.g., planning and completing goals, object permanence and tracking etc. to preserve the generalisation ability of LM models, we use elastic weight consolidation (EWC) for selective weight updates, combined with low-rank adapters (LoRA) for training efficiency. Some key terms ...