Jia Li Structured Cot Prompting for Code Generation 2023

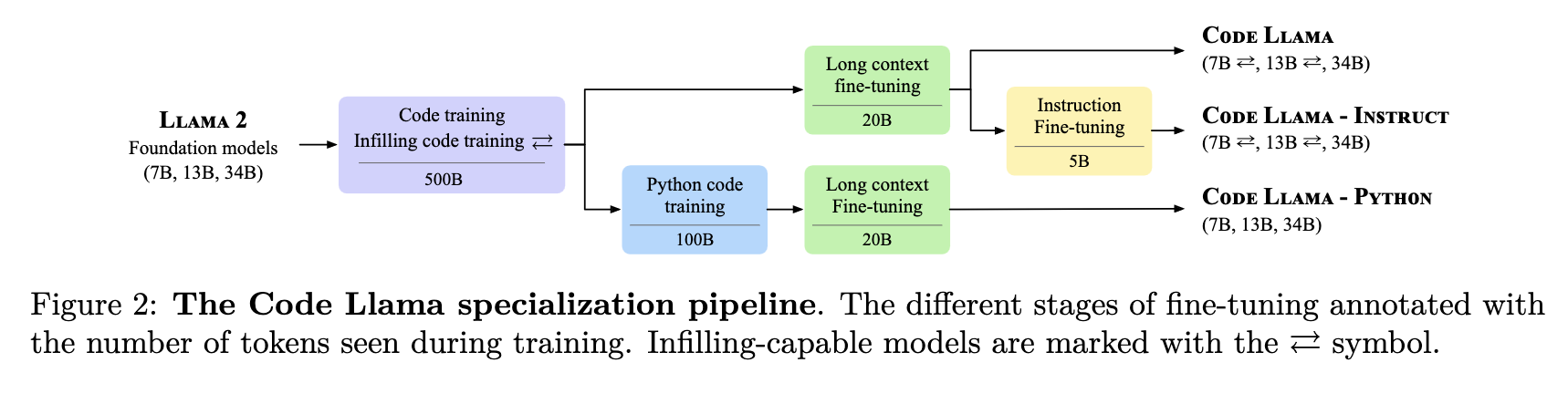

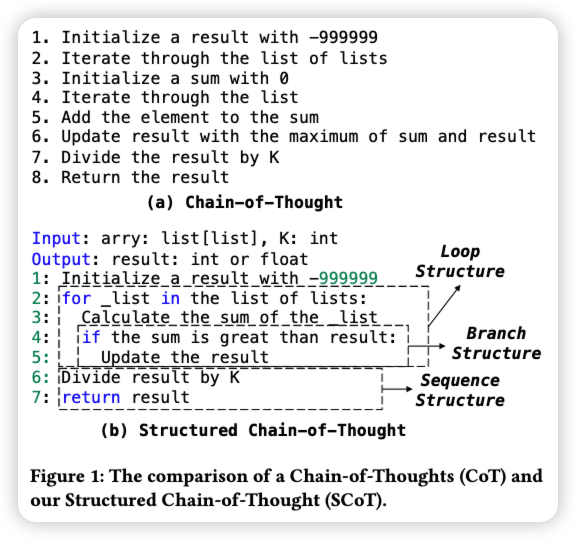

[TOC] Title: Structured Chaint of Thought Prompting for Code Generation 2023 Author: Jia Li et. al. Publish Year: 7 Sep 2023 Review Date: Wed, Feb 28, 2024 url: https://arxiv.org/pdf/2305.06599.pdf Summary of paper Contribution The paper introduces Structured CoTs (SCoTs) and a novel prompting technique called SCoT prompting for improving code generation with Large Language Models (LLMs) like ChatGPT and Codex. Unlike the previous Chain-of-Thought (CoT) prompting, which focuses on natural language reasoning steps, SCoT prompting leverages the structural information inherent in source code. By incorporating program structures (sequence, branch, and loop structures) into intermediate reasoning steps (SCoTs), LLMs are guided to generate more structured and accurate code. Evaluation on three benchmarks demonstrates that SCoT prompting outperforms CoT prompting by up to 13.79% in Pass@1, is preferred by human developers in terms of program quality, and exhibits robustness to various examples, leading to substantial improvements in code generation performance. ...