[TOC]

- Title: Zhiting Hu Language Agent and World Models 2023

- Author:

- Publish Year:

- Review Date: Mon, Jan 22, 2024

- url: arXiv:2312.05230v1

Summary of paper

Motivation

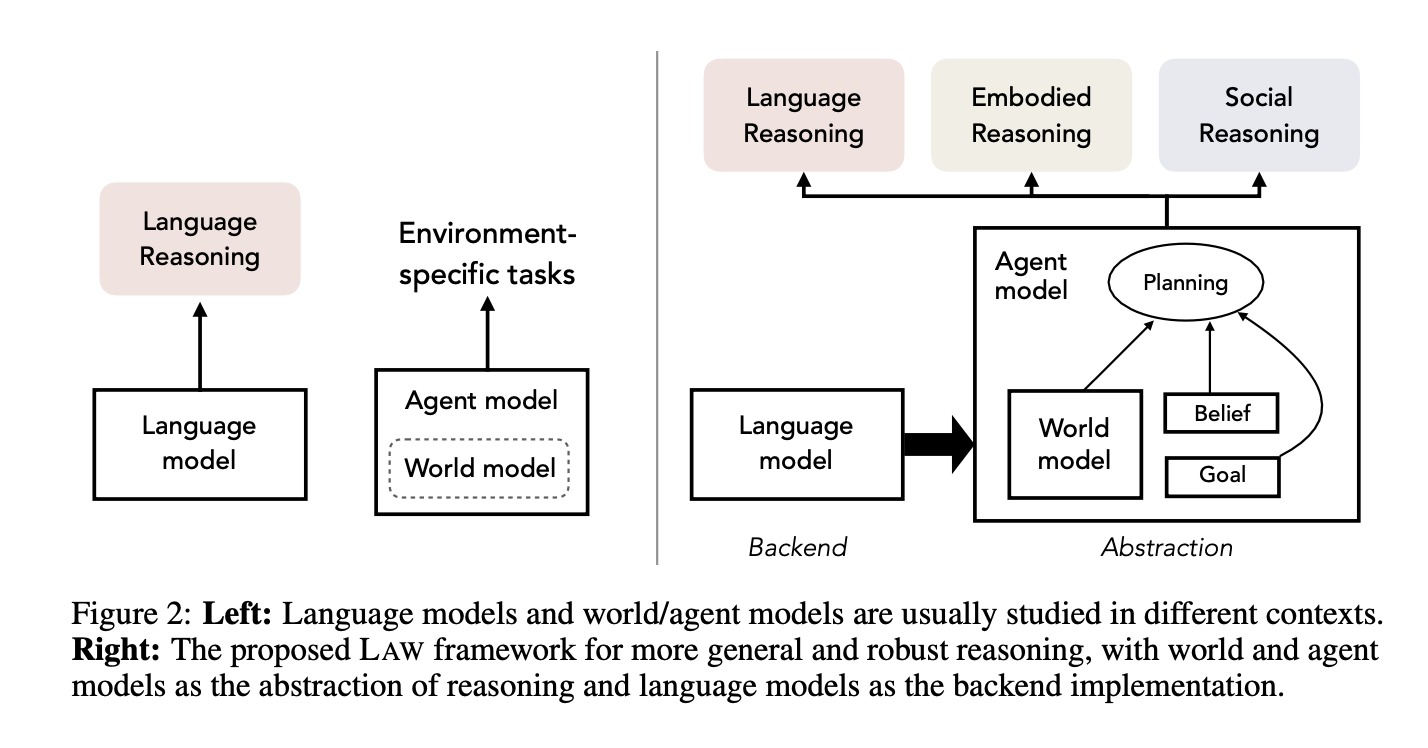

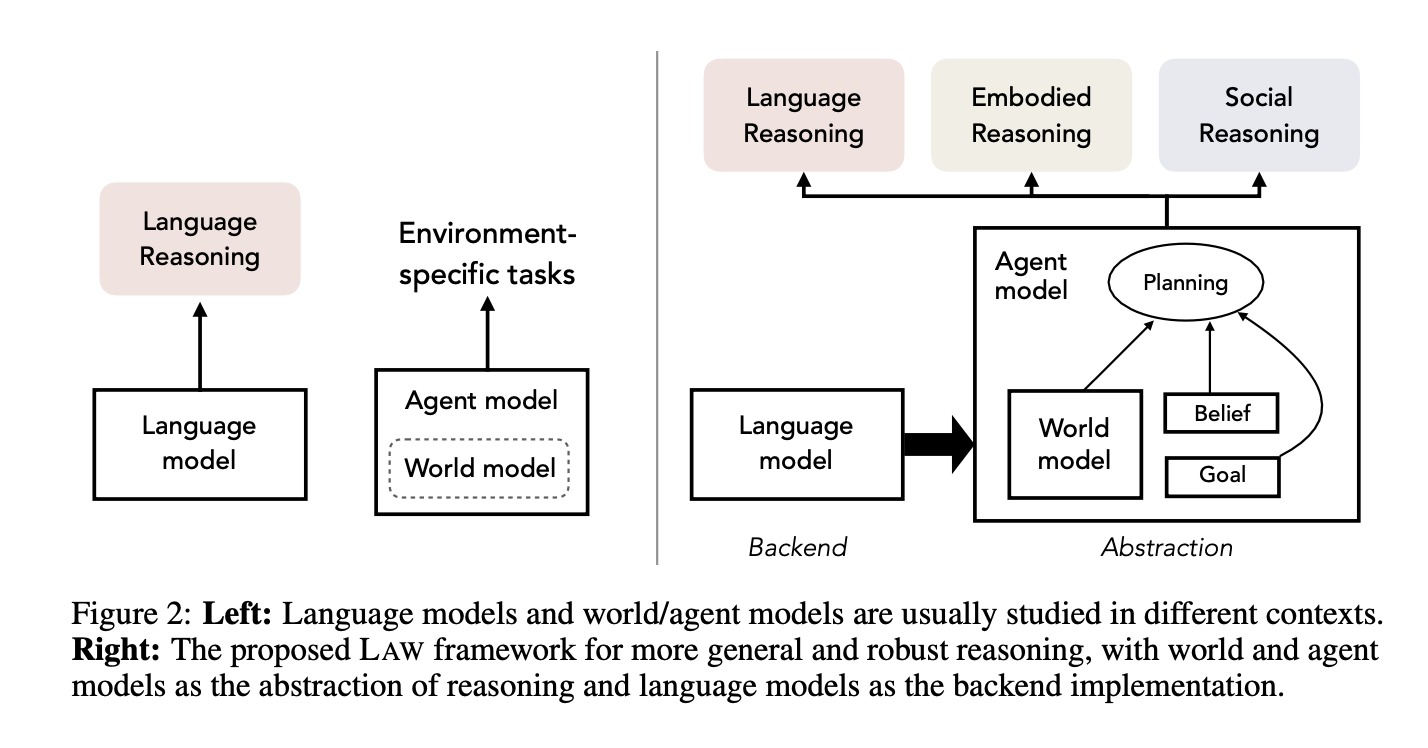

- LAW proposes that world and agent models, which encompass beliefs about the world, anticipation of consequences, goals/rewards, and strategic planning, provide a better abstraction of reasoning. In this framework, language models play a crucial role as a backend

Some key terms

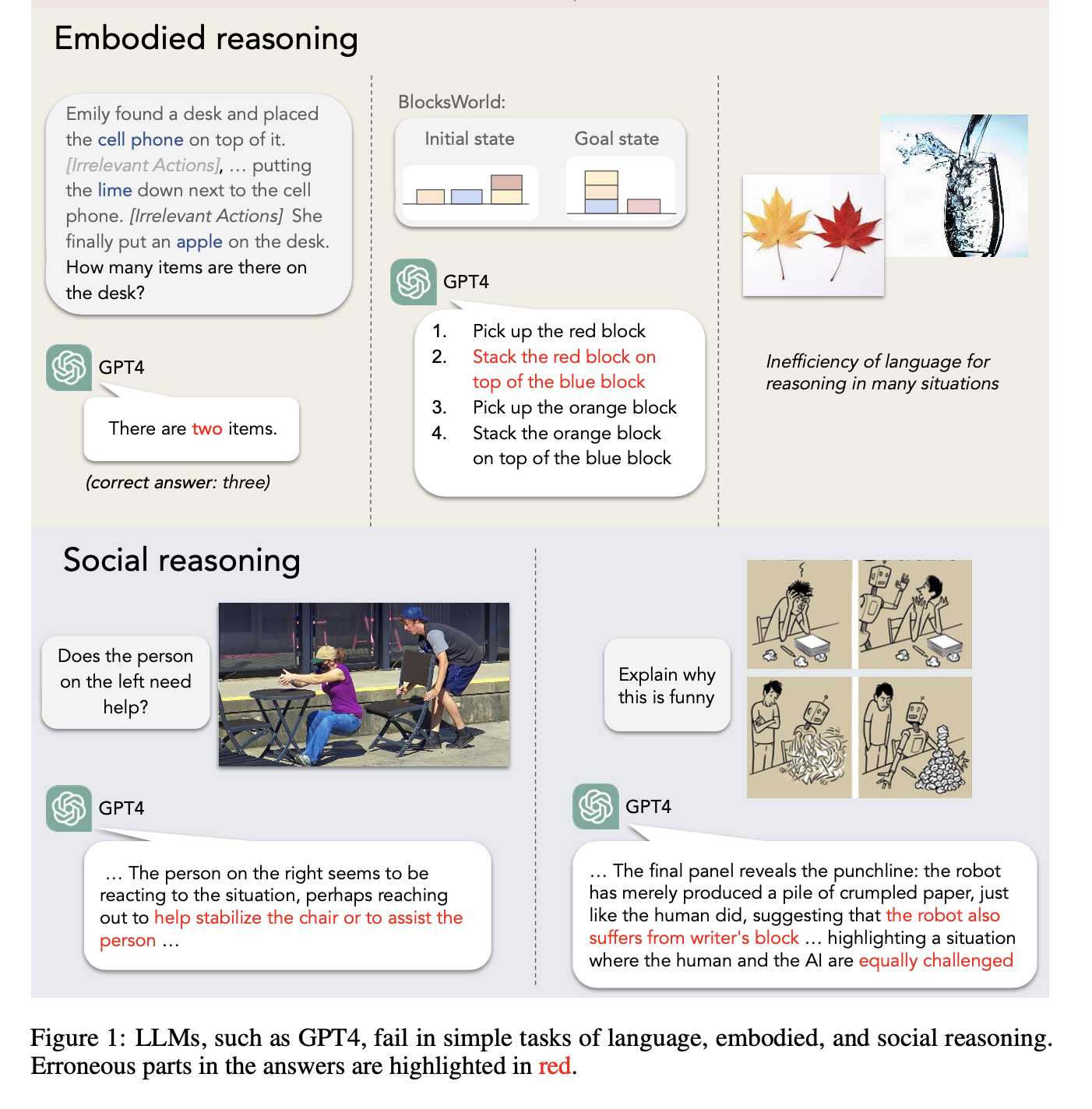

Limitation of Language

- Ambiguity and Imprecision: LLMs struggle with natural language’s ambiguity and imprecision because they lack the rich context that humans use when producing text. This context includes perceptual, social, and mental factors, as well as world commonsense. LLMs simulate surface text without understanding underlying context, leading to limitations in grounding on physical, social, and mental experiences.

- Inefficiency of Language: LLMs face challenges when using language as the primary medium for reasoning, especially in situations requiring nuanced descriptions. For instance, describing subtle differences between two objects might require lengthy text, while generating an image or using other sensory modalities can be more efficient for certain tasks, such as predicting fluid flow based on physical properties.

Failure case

System-II reasoning – construct a mental model of the world

- for robust reasoning during complex tasks (Tolman, 1948; Briscoe, 2011;)

- The paper outlines the background of these three models (language, agent, world models), then introduces the LAW (Language, Agent, World) framework for reasoning. It reviews recent studies related to each element in the framework and discusses the roadmap for addressing the challenges and advancing machine reasoning and planning.

Two levels of agent model

There are two levels of agent models:

- Level-0 Agent Models: These models represent how an embodied agent optimizes actions to maximize accumulated rewards based on belief and the physical constraints defined in its world model. They are used in embodied tasks, such as a robot searching for a cup.

- Level-1 Agent Models: These models are used in social reasoning tasks and involve reasoning about the behaviors of other agents. They encompass Theory of Mind, which means forming mental models of other agents and conducting causal reasoning to interpret their behaviors based on their mental states like goals and beliefs.

LAW framework structure

The paper reviews recent works relevant to the LAW framework, highlighting several approaches:

- LMs as Both World and Agent Models (Reasoning-via-Planning, or RAP): LMs are repurposed to serve as world models by predicting future states in reasoning and as agent models by generating actions. This approach allows for reasoning traces that consist of interleaved states and reasoning steps, improving inference coherence. RAP incorporates Monte Carlo Tree Search (MCTS) for strategic exploration in reasoning.

- Probabilistic Programs: Probabilistic programs are used to construct world and agent models for physical and social reasoning. LMs are employed to translate natural language descriptions into probabilistic programs, serving as an interface between language and thought.

- LMs as the Planner in Agent Models: LMs are used to generate plans based on prompts specifying the state, task, and memory. Interactive planning paradigms provide feedback and reflection on past actions to adjust future plans. LMs can also simulate social behaviors in abstract environments, enhancing social reasoning.

- LMs as the Goal/Reward in Agent Models: LMs are considered for generating goals or rewards in agent models. They can translate language descriptions of intended tasks into goal and reward specifications, simplifying the process.

- LMs as the Belief in Agent Models: Although less explored, there is potential for using LMs to explicitly model belief representations in agent models, similar to their role as planners, goals, or rewards.

Results

However, the authors acknowledge certain limitations of the LAW framework:

- Symbolic Representations: The language model backend relies on symbolic representations in a discrete space. While there’s potential to augment this space with continuous latent spaces from other modalities, it remains unclear whether a single continuous latent space can achieve similar capacity as symbolic representations.

- Incomplete Modeling: The current world and agent modeling may not capture all knowledge about the world and agents. For example, it assumes that agent behaviors are primarily driven by goals or rewards, overlooking other potential factors like social norms.

- Transformer Architecture Limits: The paper does not delve into the inherent limits of Transformer architectures, which are foundational to many language models. Further research into understanding the learning mechanisms of Transformers may complement the development of machine reasoning.

Overall, while the LAW framework presents a promising direction for advancing machine reasoning, it is essential to address these limitations and continue exploring ways to enhance its capabilities.

Summary

This is a discussion paper