[TOC]

- Title: HuggingGPT: Solving AI tasks with ChatGPT and its Friends in Hugging Face

- Author: Yongliang Shen et. al.

- Publish Year: 2 Apr 2023

- Review Date: Tue, May 23, 2023

- url: https://arxiv.org/pdf/2303.17580.pdf

Summary of paper

Motivation

- while there are abundant AI models available for different domains and modalities, they cannot handle complicated AI tasks.

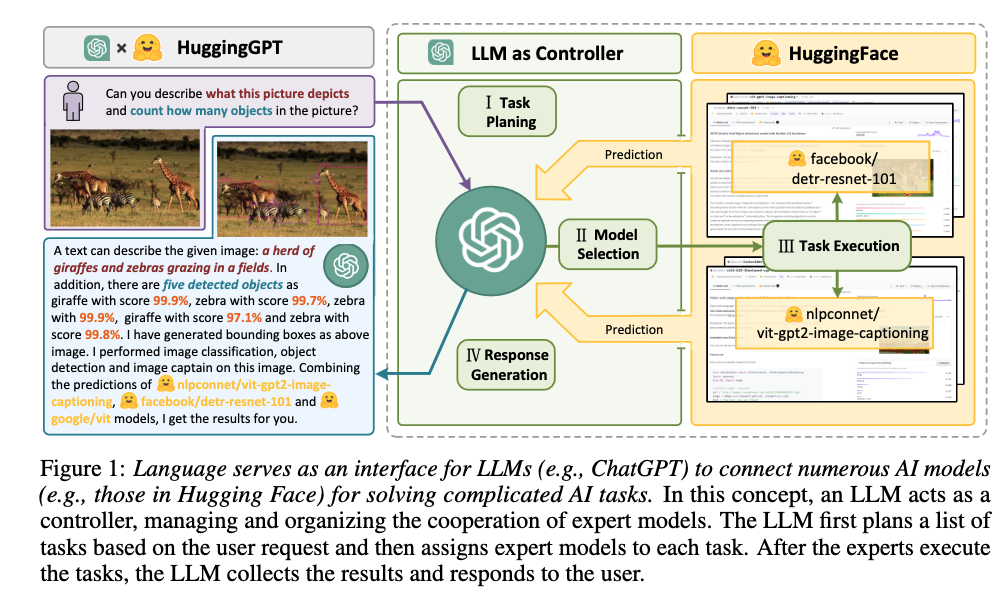

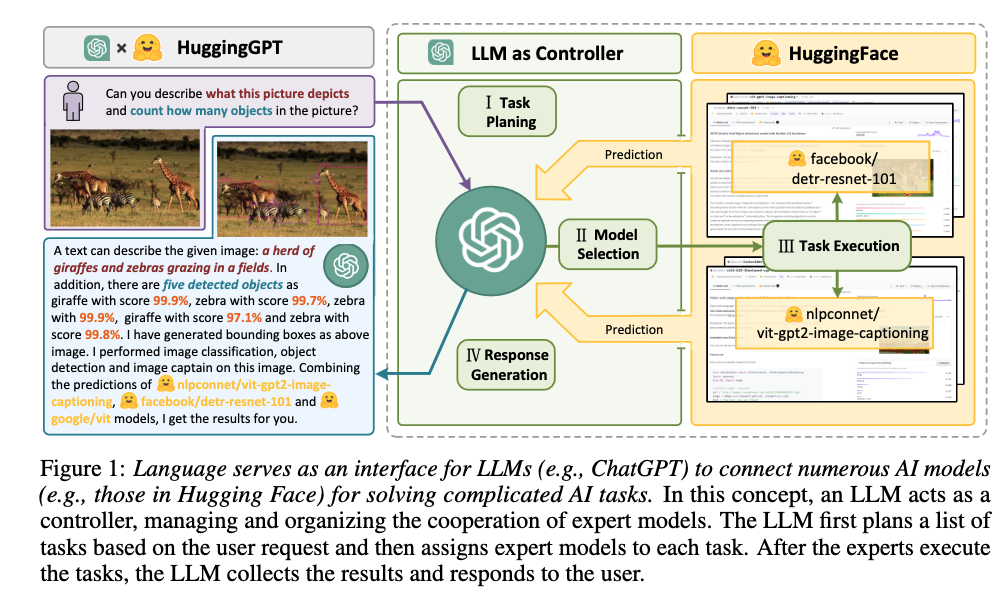

- we advocate that LLMs could act as a controller to manage existing AI models to solve complicated AI tasks and language could be a generic interface to empower this

Contribution

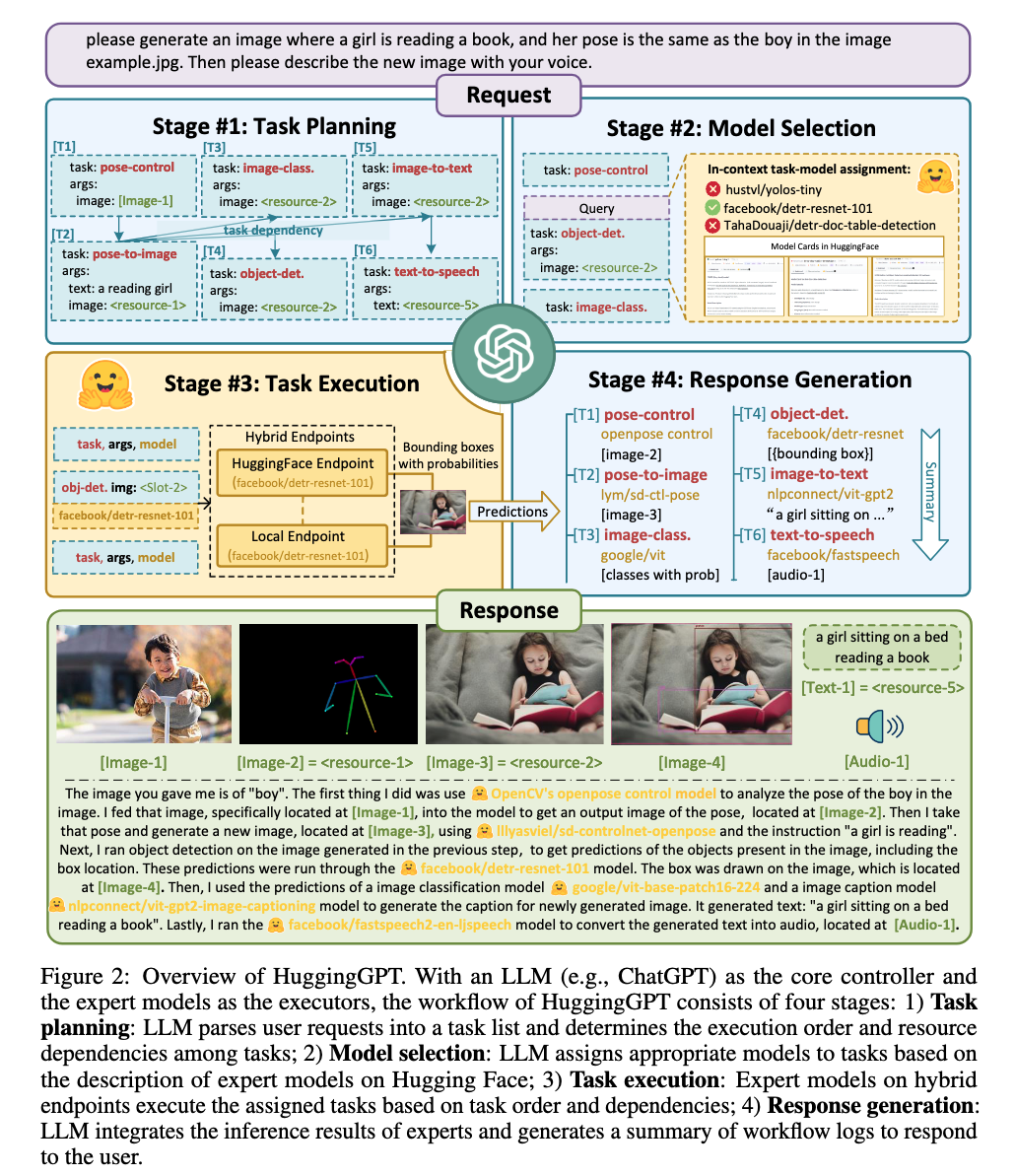

- specifically, we use ChatGPT to conduct task planning when receiving a user request, select models according to their function descriptions available in Hugging face, execute each subtask with the selected AI model, and summarize the response according to the execution results.

Some key terms

Model

the bridge

- we notice that each AI model can be denoted as a form of language by summarizing its model function.

- therefore, by incorporating these model descriptions into prompt, LLMs can be considered as the brain to manage AI models.

process

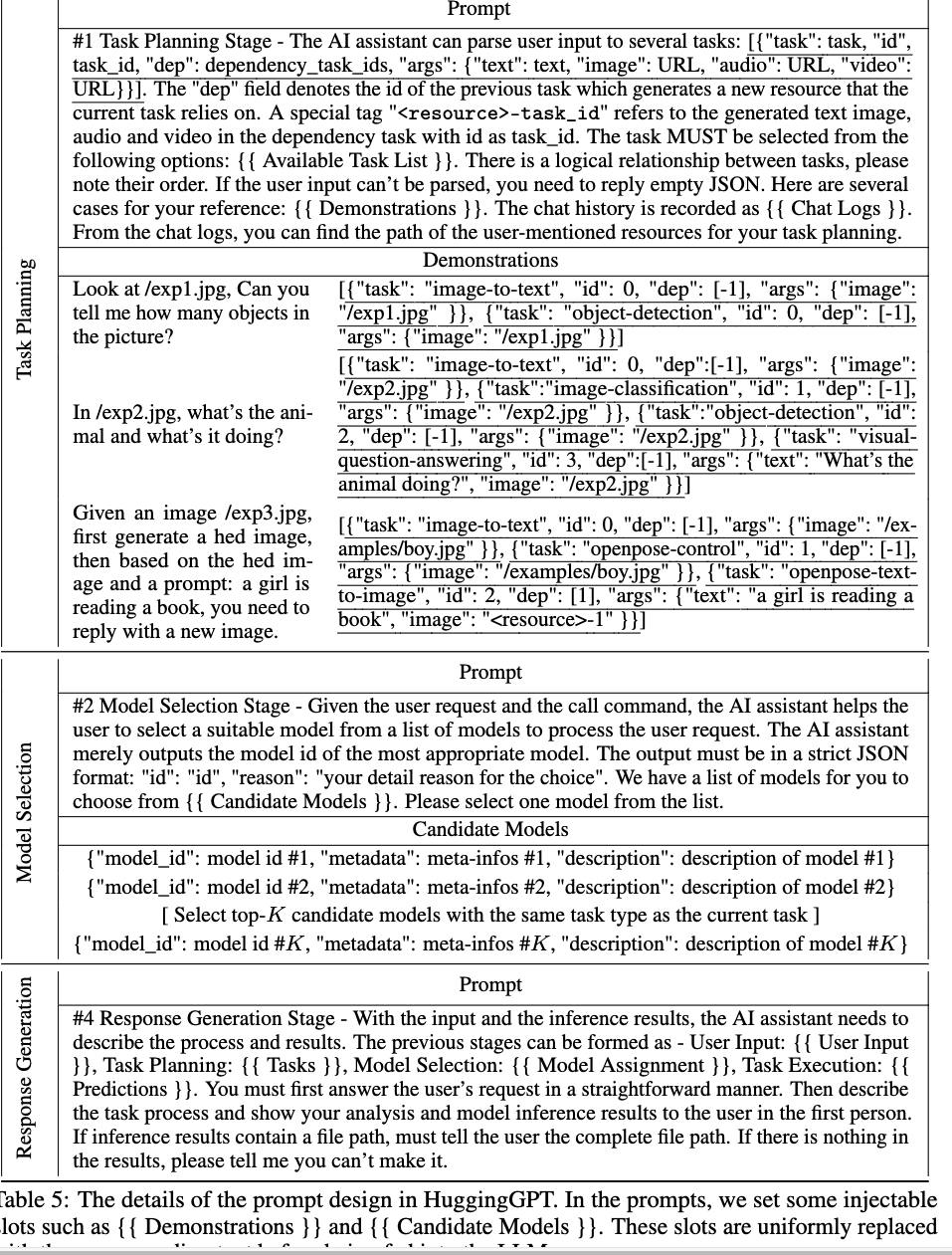

- task planning: using ChatGPT to analyse the request of users to understand their intention, and disassemble them into possible solvable tasks via prompts

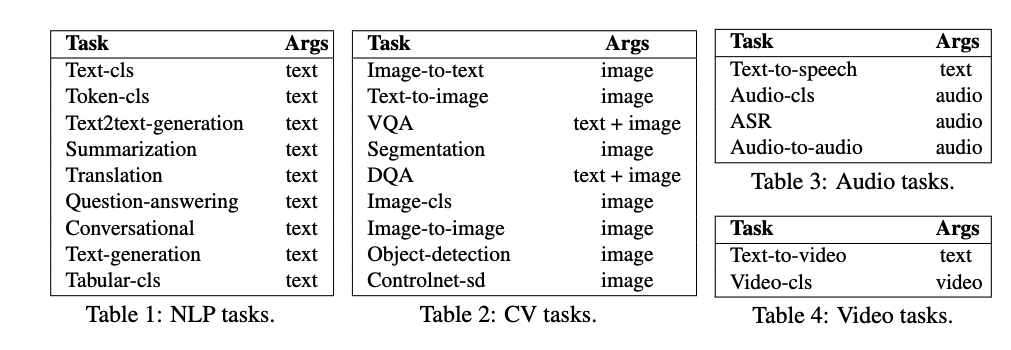

- model selection: to solve the planned tasks, ChatGPT selects expert models that are hosted on Hugging Face based on model descriptions

- task execution: invoke and execute each selected model, and return the results to ChatGPT

- response generation: using ChatGPT to integrate the prediction of all models, and generate answers for users

Prompt design in ChatGPT

Potential future work

limitation of HuggingGPT

-

stability

- rebellion that occurs during the inference of large language models. LLM occasionally fail to conform to instructions when inferring, and the output format may defy expectations, leading to exceptions in the program workflow.