[TOC]

- Title: Contrastive Instruction Trajectory Learning for Vision Language Navigation

- Author: Xiwen Liang et. al.

- Publish Year: AAAI 2022

- Review Date: Fri, Feb 10, 2023

- url: https://arxiv.org/abs/2112.04138

Summary of paper

Motivation

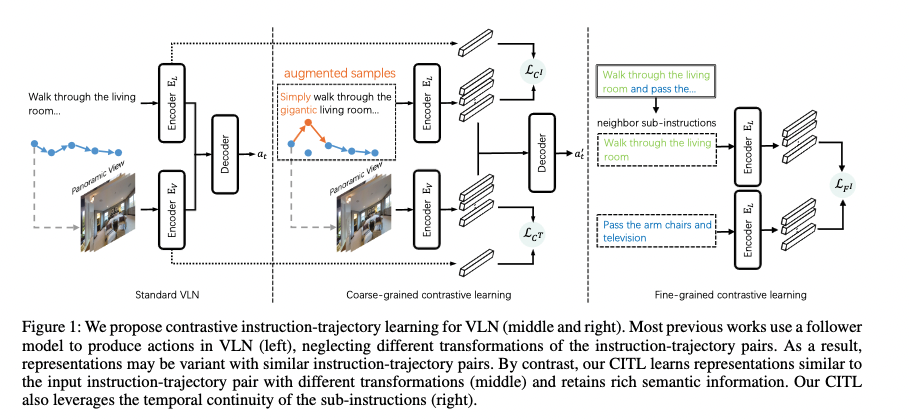

- previous works learn to navigate step-by-step following an instruction. However, these works may fail to discriminate the similarities and discrepancies across instruction-trajectory pairs and ignore the temporal continuity of sub-instructions. These problems hinder agents from learning distinctive vision-and-language representations,

Contribution

- we propose

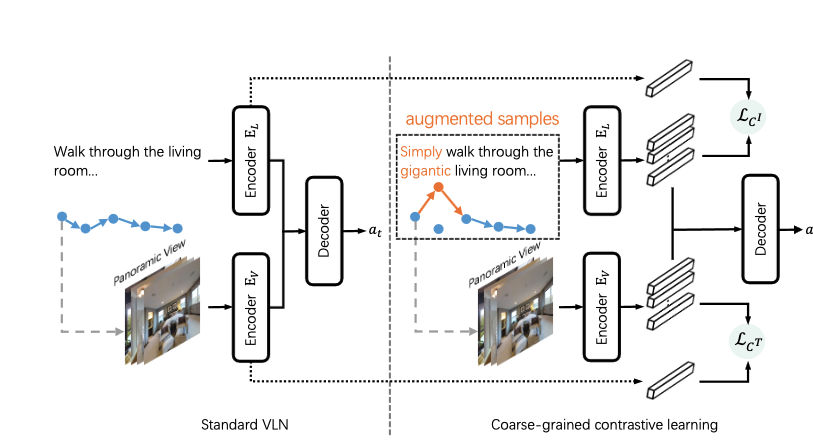

- a coarse-grained contrastive learning objective to enhance vision-and-language representations by contrasting semantics of full trajectory observations and instructions respectively;

- a fine-grained contrastive learning objective to perceive instructions by leveraging the temporal information of the sub-instructions.

- a pairwise sample-reweighting mechanism for contrastive learning to sampling bias in contrastive learning.

Some key terms

Limitation of current VLN model

- these VLN models only use the context within an instruction-trajectory pair while ignoring the knowledge across the pairs. For instance, they only recognise the correct actions that follow the instrucvtion while ignoring the actions that do not follow the instruction

- but the different between the correct actions and the wrong actions contain extra knowledge for navigation.

- on the other hand, previous methods do not explicitly exploit the temporal continuity inside an instruction, which may fail if the agent focuses on a wrong sub-instruction.

- thus, learning a fine-grained sub-instruction representation by leveraging the temporal continuity of sub-instructions could improve the robustness of navigation

Method

coarse-grained contrastive learning

- learn distinctive long-horizon representations for trajectories and instructions respectively.

- the idea is to compute a inter-intra cross-instance contrast: enforcing embedding to be similar for positive trajectory-instruction pairs and dissimilar for intra-negative and inter-negative ones.

- intra-negative: change the temporal information of the instruction and selecting longer sub-optimal trajectories which deviate from the anchor one severely.

- inter-negative: in-batch negative sampling

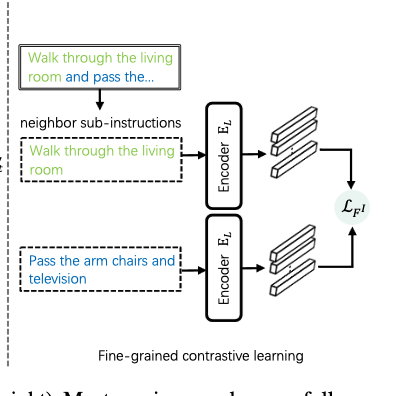

fine-grained contrastive learning

- learn fine-grained representations by focusing on the temporal information of sub-instructions

- we generate sub-instructions (i.e., cut long instruction into sub ones) and train the agent to learn embedding distances of these sub-instructions by contrastive learning. Specifically

- neighbour sub-instructions are positive samples, while non-neighbour sub-instructions are intra-negative samples and different sub-instructions from other instructions are inter-negative samples.

- sukai comment: i don’t think this really helps