[TOC]

- Title: Towards Closing the Sim to Real Gap in Collaborative Multi Robot Deep Reinforcement Learning

- Author: Wenshuai Zhao et. al.

- Publish Year: 2020

- Review Date: Sun, Dec 25, 2022

Summary of paper

Motivation

- we introduce the effect of sensing, calibration, and accuracy mismatches in distributed reinforcement learning

- we discuss on how both the different types of perturbations and how the number of agents experiencing those perturbations affect the collaborative learning effort

Contribution

-

This is, to the best of our knowledge, the first work exploring the limitation of PPO in multi-robot systems when considering that different robots might be exposed to different environment where their sensors or actuators have induced errors

-

with the conclusion of this work, we set the initial point for future work on designing and developing methods to achieve robust reinforcement learning on the presence of real-world perturbation that might differ within a multi-robot system.

Some key terms

Deep RL

- DRL algorithms work on a trial and error basis, where an agent interacts with its environment and receives a reward based on the performance.

- there are DRL approaches that rely on multiple agents to parallelise the learning process or explore a wider variety of experiences.

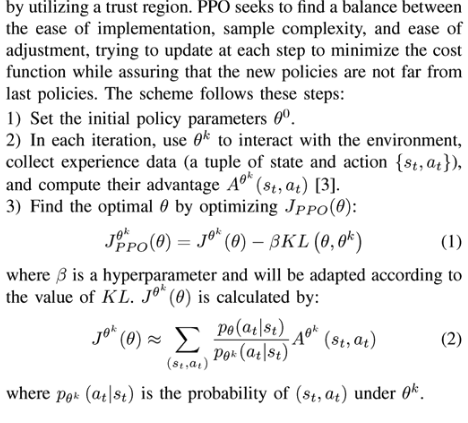

PPO

conclusion

- for a fixed small magnitude in the perturbation, the agents still converge on a policy that works for both subsets (the original and the perturbed)

- Among disturbances in the model’s input (sensing, reward) and output (actuation, action) -> the disturbances in the ability of the robots to actuate properly have had a comparatively worse effect than those in their ability to sense to the position of object accurately

limitation

- though the empirical analysis showed that RL algorithm can still converge under the reward disturbance, the experiment is not conducted in the sparse reward environment.

Major comments

citation

- PPO has been identifies ad one of the most robust approaches against reward perturbation.

- ref: Zhao, Wenshuai, et al. “Towards closing the sim-to-real gap in collaborative multi-robot deep reinforcement learning.” 2020 5th International Conference on Robotics and Automation Engineering (ICRAE). IEEE, 2020.

- ref: Wang, Jingkang, Yang Liu, and Bo Li. “Reinforcement learning with perturbed rewards.” Proceedings of the AAAI conference on artificial intelligence. Vol. 34. No. 04. 2020.