[TOC]

- Title: Language Models as Zero Shot Planners: Extracting Actionable Knowledge for Embodied Agents

- Author: Wenlong Huang et. al.

- Publish Year: Mar 2022

- Review Date: Mon, Sep 19, 2022

Summary of paper

Motivation

- Large language models are learning general commonsense world knowledge.

- so this paper, the author investigate the possibility of grounding high-level tasks, expressed as natural language (e.g., “make breakfast”) to a chosen set of action steps (“open fridge”).

Contribution

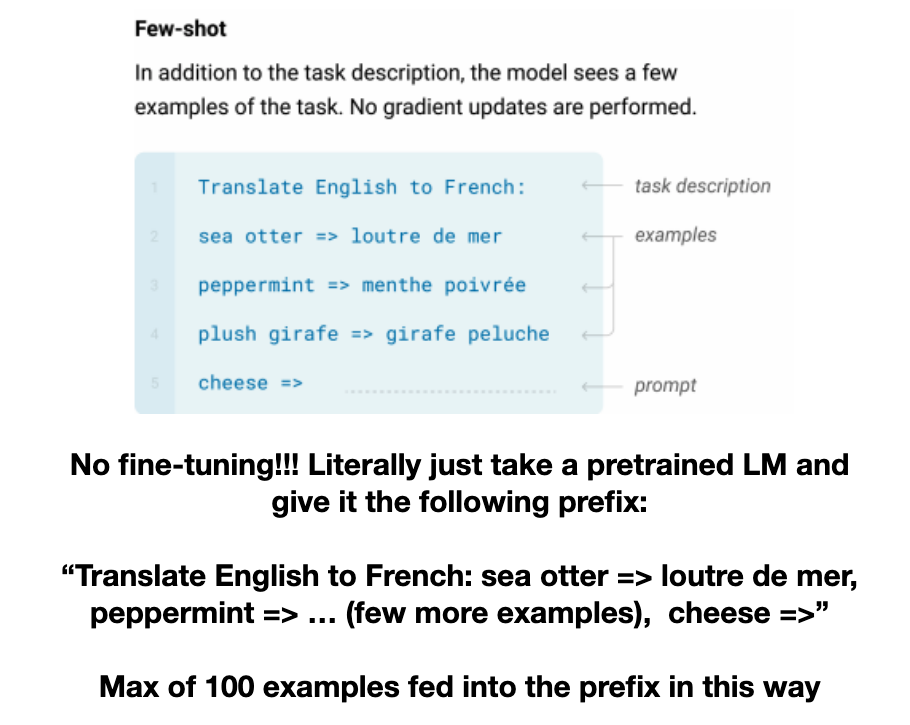

- they found out that if pre-trained LMs are large enough and prompted appropriately, they can effectively decompose high-level tasks into mid-level plans without any further training.

- they proposed several tools to improve executability of the model generation without invasive probing or modifications to the model.

Some key terms

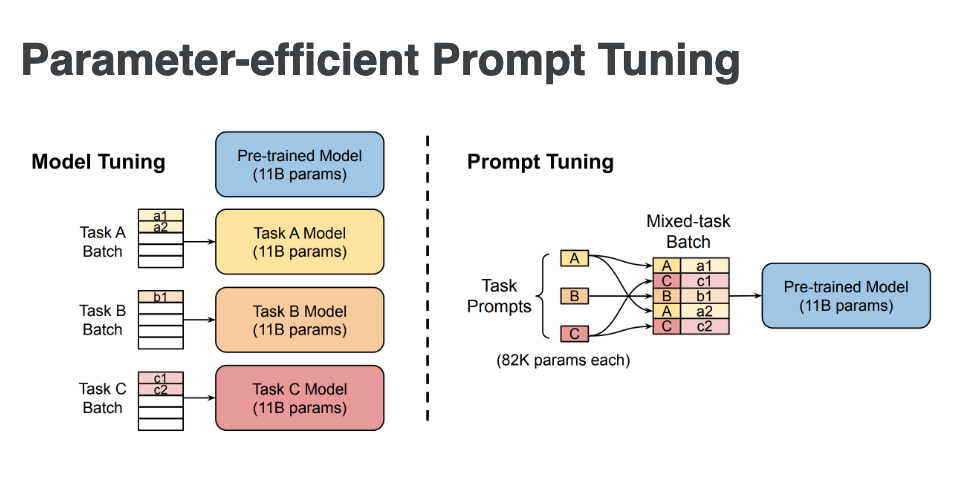

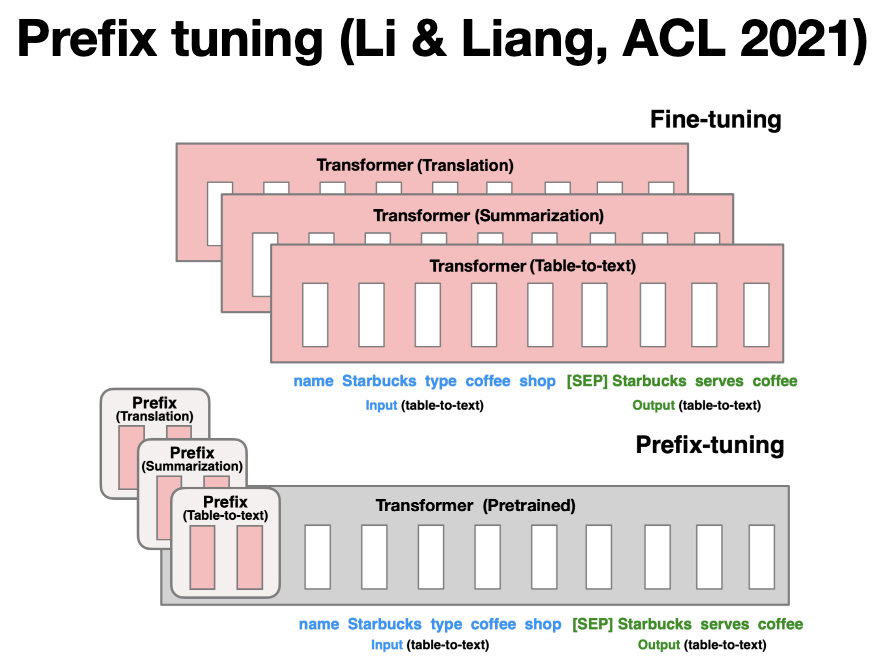

What is prompt learning

Methodology

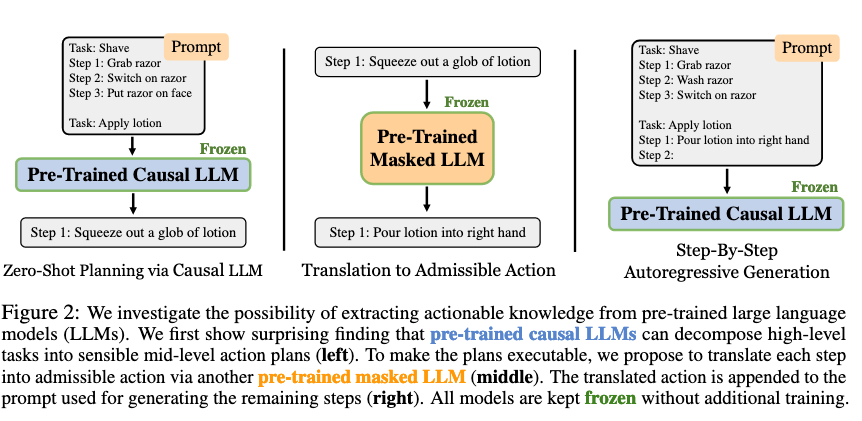

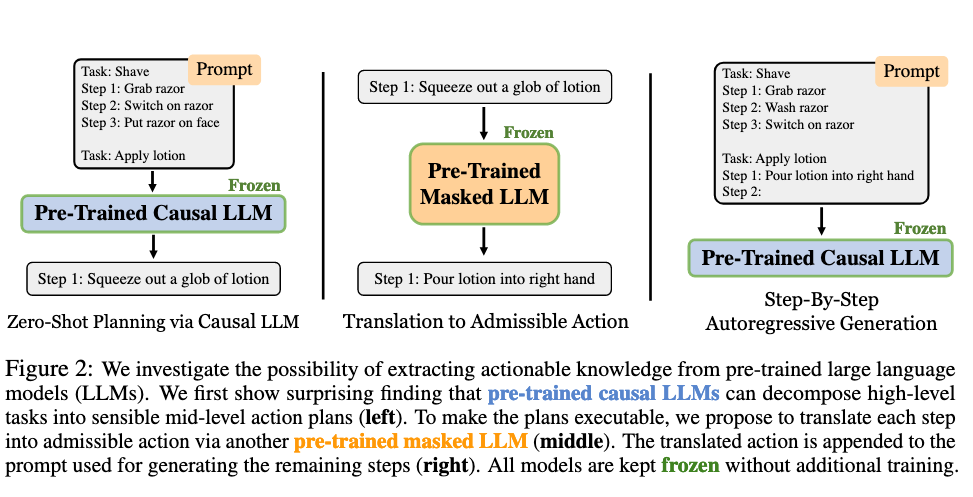

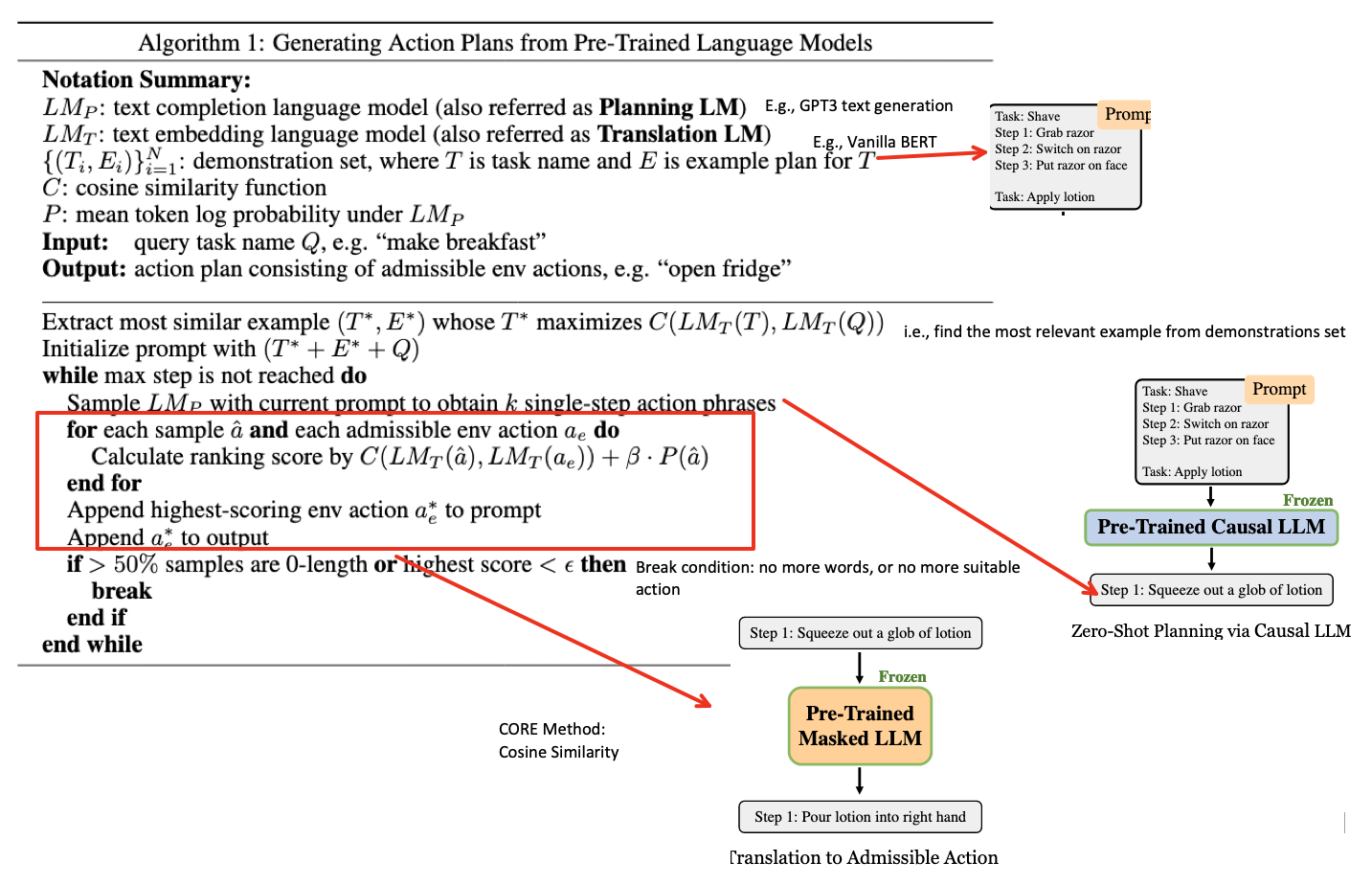

they will have 2 steps

- in prompt learning way, convert the high level tasks into mid-level plans

- convert the mid-level plans into admissible actions

- loop

admissible action planning by semantic translation

- Many reasons cause the failure of mapping from free-form language to unambiguous actionable steps

- the output does not follow pre-defined mapping of any atomic actions

- e.g., “I first walk to the bedroom” is not of the format “walk to <PLACE>”

- the output may refer to atomic action and objects using words unrecognisable by the environment

- e.g., “microwave the chocolate milk” where “microwave” and “chocolate milk” cannot be mapped to precise action and objects.

- the output contains lexically ambiguous words

- e.g., open TV vs switch on TV

- the output does not follow pre-defined mapping of any atomic actions

- SOLUTION: cosine similarity of the language embeddings of the action phrases …