[TOC]

- Title: Grounded Decoding Guiding Text Generation With Grounded Models for Robot Control

- Author: WenLong Huang et. al.

- Publish Year: 1 Mar, 2023

- Review Date: Thu, Mar 30, 2023

- url: https://arxiv.org/abs/2303.00855

Summary of paper

Motivation

- Unfortunately, applying LLMs to settings with embodied agents, such as robots, is challenging due to their lack of experience with the physical world, inability to parse non-language observations, and ignorance of rewards or safety constraints that robots may require.

- on the other hand, language-conditioned robotic policies that learn from interaction data can provide the necessary grounding that allows the agent to be correctly situated in the real world, but such policies are limited by the lack of high-level semantic understanding due to the limited breadth of the interaction data available for training them.

Contribution

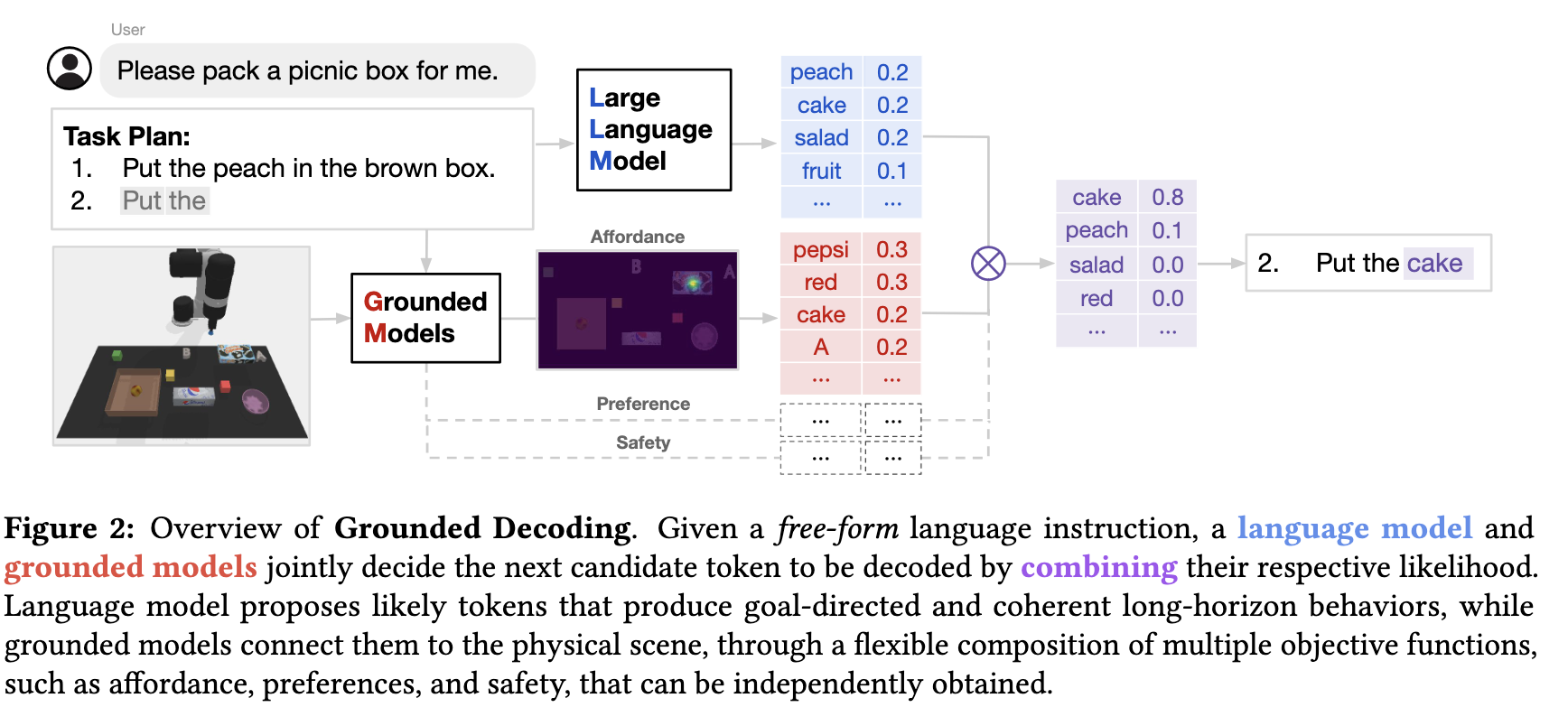

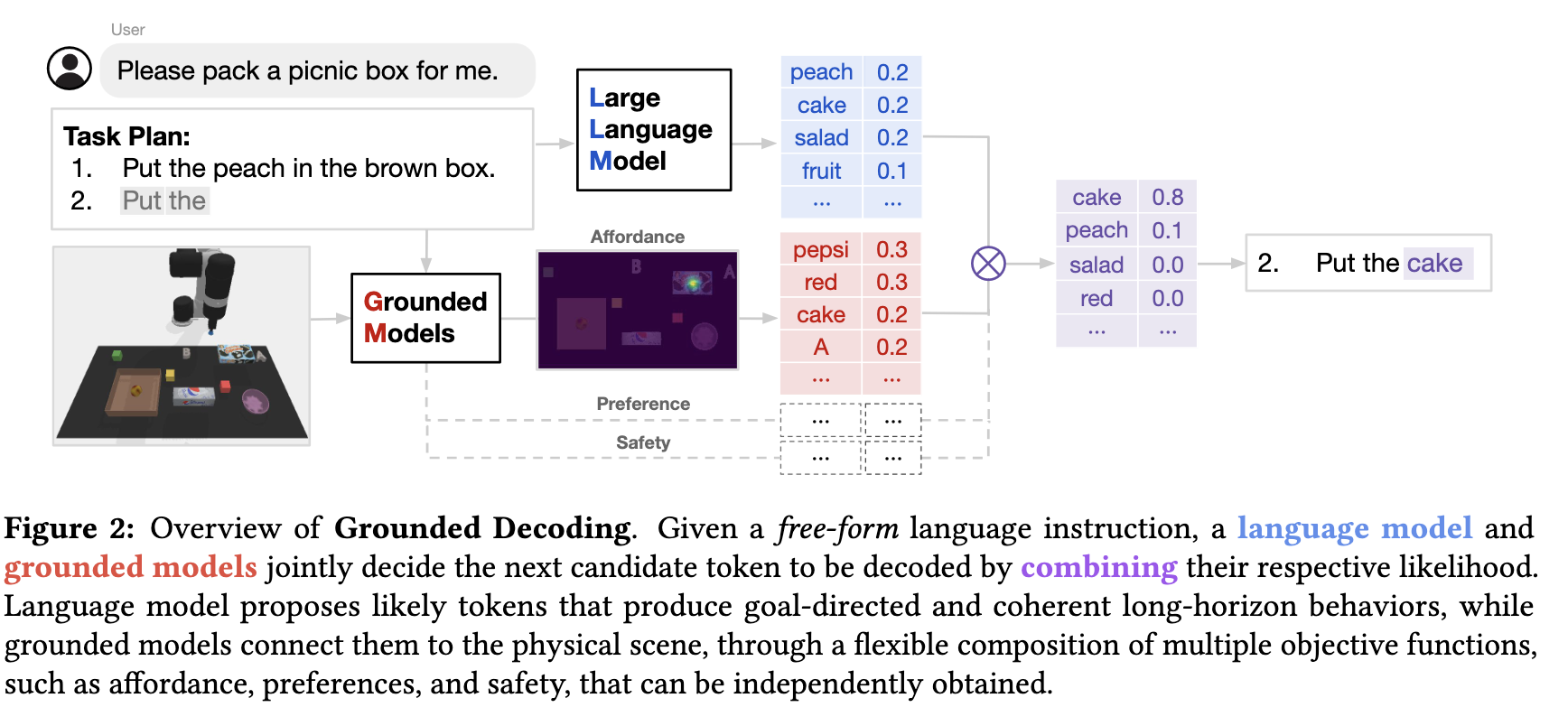

- thus if we want to make use of the semantic knowledge in a language model while still situating it in an embodied setting, we must construct an action sequence that is both likely according to the language model and also realisable according to grounded models of the environment.

- we frame this as a problem similar to probabilistic filtering: decode a sequence that both has high probability under the language model and high probability under a set of grounded model objectives.

Potential future work

- the work is related to using LMs info as a prior bias

- the problem framing is straightforward