[TOC]

- Title: Extracting Action Sequences from Texts Based on Deep Reinforcement Learning

- Author: Wenfeng Feng et. al.

- Publish Year: Mar 2018

- Review Date: Mar 2022

Summary of paper

Motivation

the author want to build a model that learns to directly extract action sequences without external tools like POS tagging and dependency parsing results…

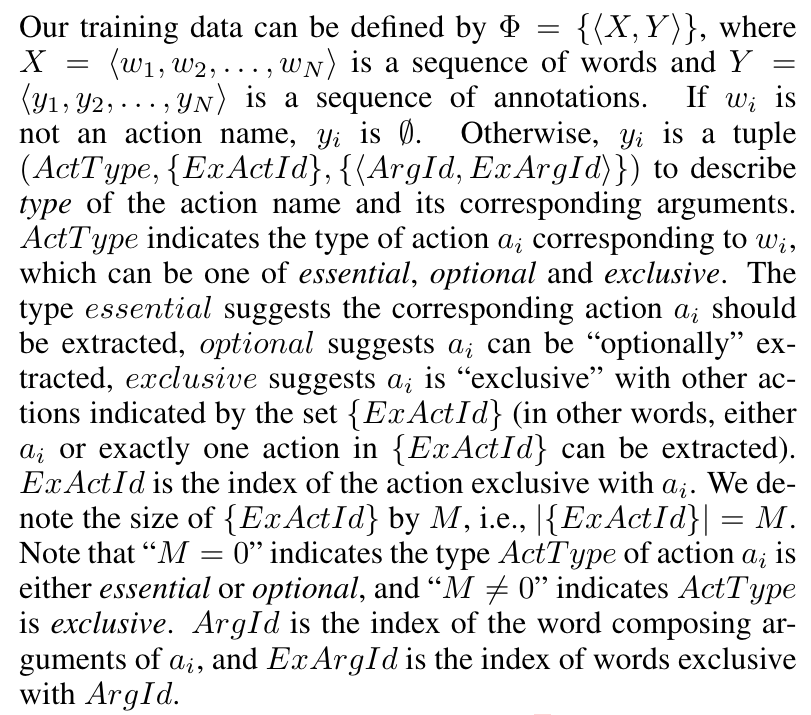

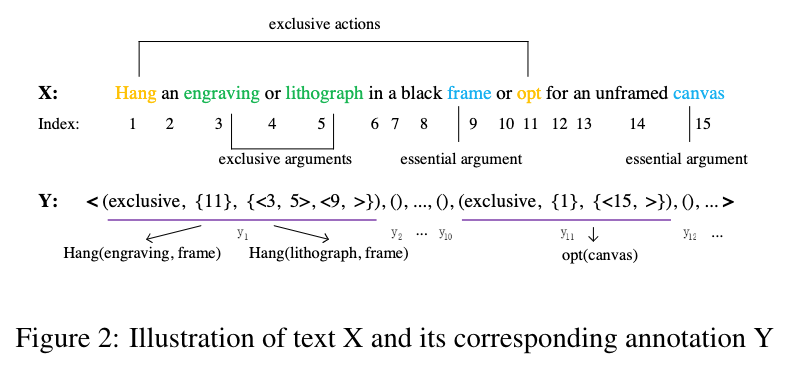

Annotation dataset structure

example

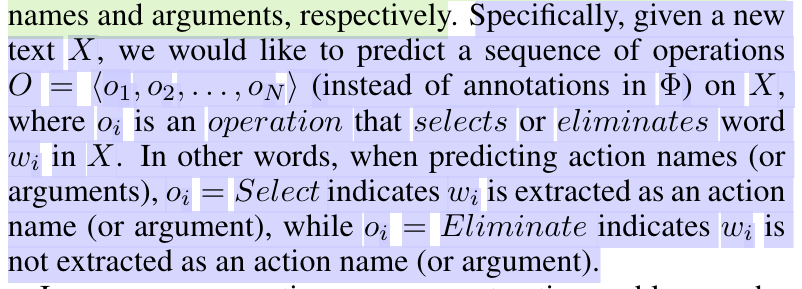

Model

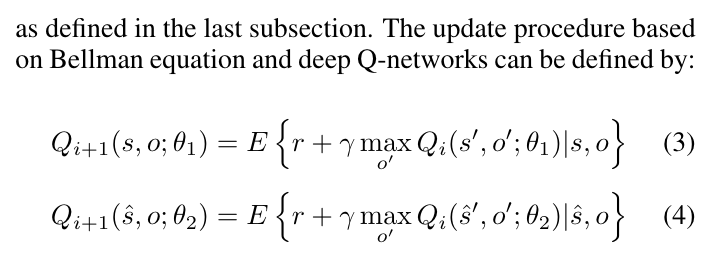

they exploit the framework to learn two models to predict action names and arguments respectively.

Why this approach can be treated as Reinforcement Learning problem

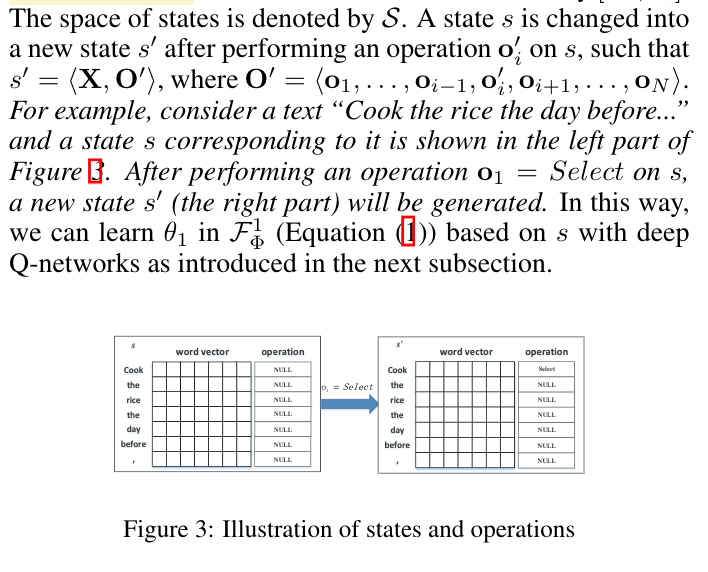

Initially we have word vector and operations pairs with operations all being NULL as starting point.

why the author wants to stick this method to RL schema…

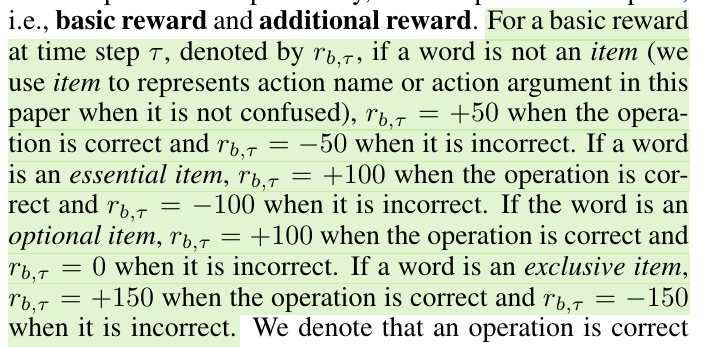

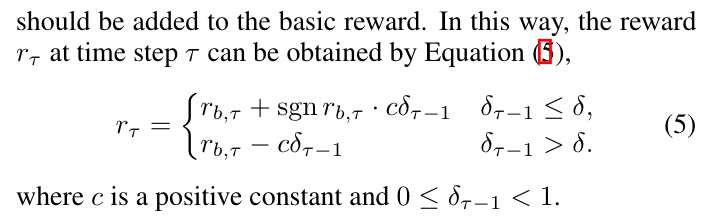

Rewards signals

- basic rewards signal

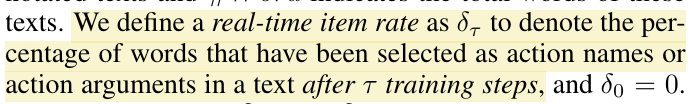

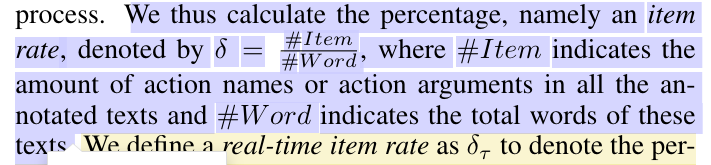

- additional rewards signal

- this is used as momentum and buffer based on the overview of the training data

- issue: we don’t have item rate for testing texts because we don’t have labels.

Potential future work

When we extend to unseen corpus, we may use word association to assist to extract action and action arguments.