[TOC]

- Title: No-Regret Reinforcement Learning With Heavy Tailed Rewards

- Author: Vincent Zhuang et. al.

- Publish Year: 2021

- Review Date: Sun, Dec 25, 2022

Summary of paper

Motivation

- To the best of our knowledge, no prior work has considered our setting of heavy-tailed rewards in the MDP setting.

Contribution

- We demonstrate that robust mean estimation techniques can be broadly applied to reinforcement learning algorithms (specifically confidence-based methods) in order to provably han- dle the heavy-tailed reward setting

Some key terms

Robust UCB algorithm

- leverage robust mean estimator such as truncated mean and median of means that have tight concentration properties.

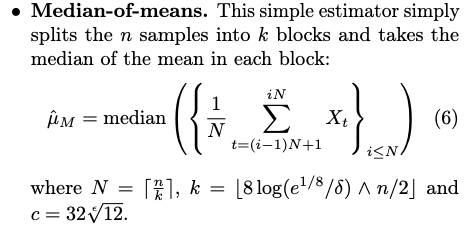

- the median of means estimator is a commonly used strategy for performing robust mean estimation in heavy tailed bandit algorithms.

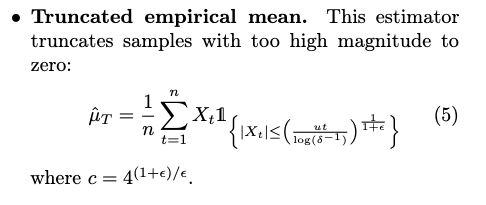

Truncated empirical mean

Median-of-means

Adaptive reward clipping

- the reward truncation in Heavy-DQN can be viewed as an adaptive version of this kind of fixed reward clipping.

- the main purpose of reward clipping is to stablize the training dynamics of the neural networks, whereas this method is designed to ensure theoretically-tight reward estimation in the heavy-tailed setting for each state-action pair.

Good things about the paper (one paragraph)

- we use this paper to get some background knowledge about handling perturbed rewards. but this paper is not very relevant to our study

Minor comments

good phrases for writing essay

- “In an orthogonal line of work, XXX did that”