[TOC]

- Title: Adversarial Attacks on Neural Network Policies

- Author: Sandy Huang et. al.

- Publish Year: 8 Feb 2017

- Review Date: Wed, Dec 28, 2022

Summary of paper

Motivation

- in this work, we show adversarial attacks are also effective when targeting neural network policies in reinforcement learning. Specifically, we show existing adversarial example crafting techniques can be used to significantly degrade test-time performance of trained policies.

Contribution

-

we characterise the degree of vulnerability across tasks and training algorithm, for a subclass of adversarial example attacks in white-box and black-box settings.

-

regardless of the learned tasks or training algorithm, we observed a significant drop in performance, even with small adversarial perturbations that do not interfere with human perceptions.

-

main contribution is to characterise how the efffectiveness of adversarial examples is impacted by two factors: the deep RL algorithm used to learn the policy and whether the adversary has access to the policy network

Limitation

- The perturbation type is only negate the sign of the signal

Some key terms

adversarial example crafting with the fast gradient sign method

- it is common to use a computationally cheap method of generating adversarial perturbations, even if this reduces the attack rate somewhat.

- Fast Gradient Sign Method (FGSM)

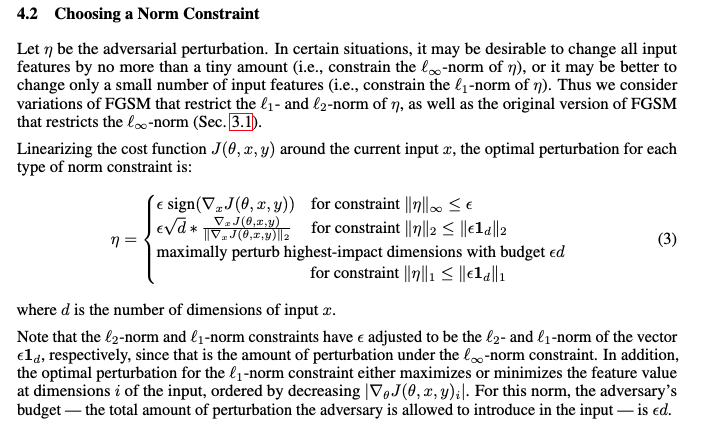

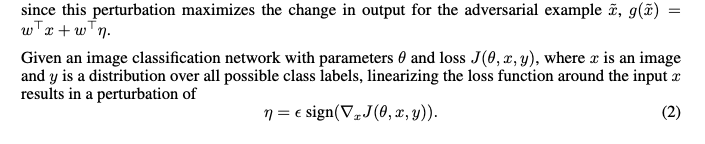

- focuses on adversarial perturbations where each pixel of the input image is changed by no more than $\epsilon$, given a linear function $g(x) = w^T x$, the optimal adversarial perturbation $\eta$ that satisfies $||\eta||_\infty < \epsilon$

- $\eta = \epsilon \text{ sign}(w)$

- In essence, FGSM is to add the noise (not random noise) whose direction is the same as the gradient of the cost function with respect to the data.

- sign is the direction of the gradient, not the magnitude

Applying FGSM to Policies

results

Vulnerability to white box attacks

- we find that regardless of which game the policy is trained for or how it is trained, it is indeed possible to significantly decrease the policy’s performance through introducing relatively small perturbation.

- we see that policies trained with A3C, TRPO, and DQN are all susceptible to adversarial inputs. Interestingly, policies trained with DQN are more susceptible.