[TOC]

- Title: ImageBind One Embedding Space to Bind Them All

- Author: Rohit Girdhar et. al.

- Publish Year: 9 May 2023

- Review Date: Mon, May 15, 2023

- url: https://arxiv.org/pdf/2305.05665.pdf

Summary of paper

Motivation

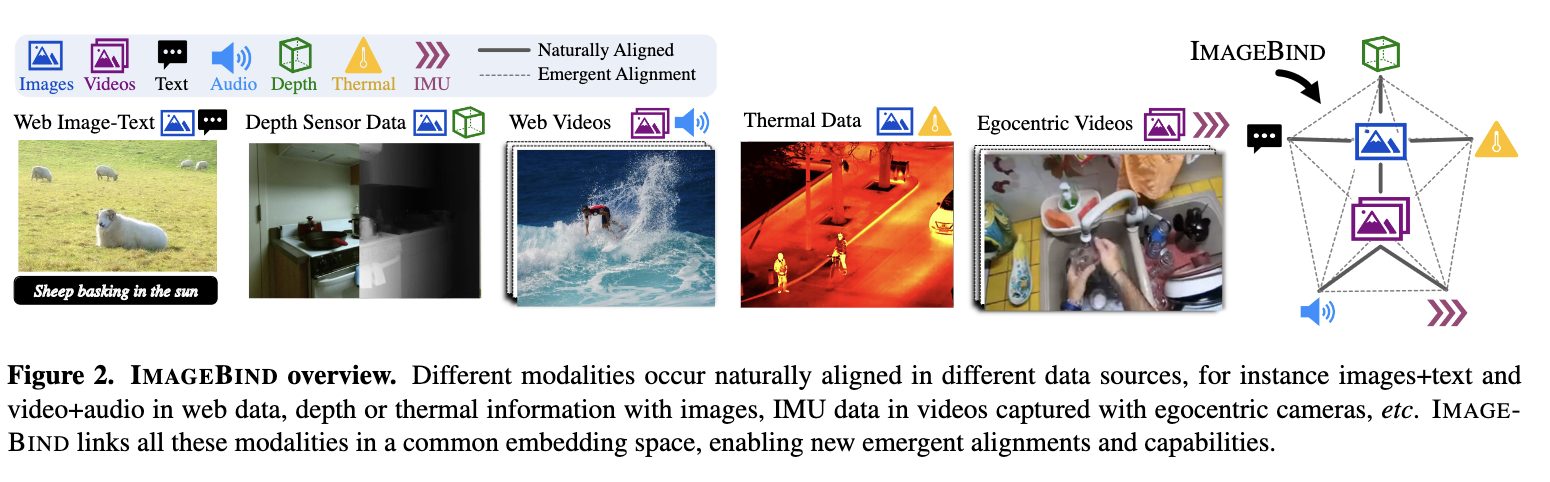

- we present ImageBind, an approach to learn a joint embedding across six different modalities

- ImageBind can leverage recent large scale vision-language models, and extend their zero shot capabilities to new modalities just using their natural pairing with images.

Contribution

- we show that all combinations of paired data are not necessary to train such a joint embedding, and only image-paired data is sufficient to bind the modalities together.

Some key terms

multimodality binding

- ideally, for a single joint joint embedding space, visual features should be learned by aligning to all these sensors

- however, this requires acquiring all types and combinations of paired data with same set of images, which is infeasible.

- for existing models, the final embeddings are limited to the pairs of modalities used for training. Thus, video-audio embeddings cannot directly used for image-text tasks and vice versa.

ImageBind method

- we show that just aligning each modality’s embedding to image embeddings leads to an emergent alignment across all of modalities.

- ImageBind outperforms specialist models trained with direct data pair supervision.

- The goal is to learn a single joint embedding space for all modalities by using images to bind them together. we align each modalities’s embedding to image embeddings.

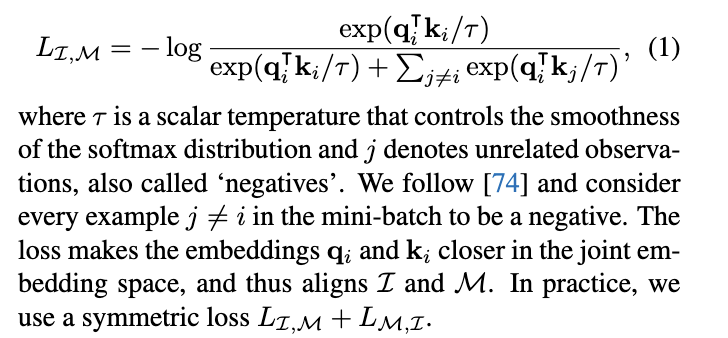

The objective loss is InfoNCE