[TOC]

- Title: Constrained Language models yield few-shot semantic parsers

- Author: Richard Shin et. al.

- Publish Year: Nov 2021

- Review Date: Mar 2022

Summary of paper

Motivation

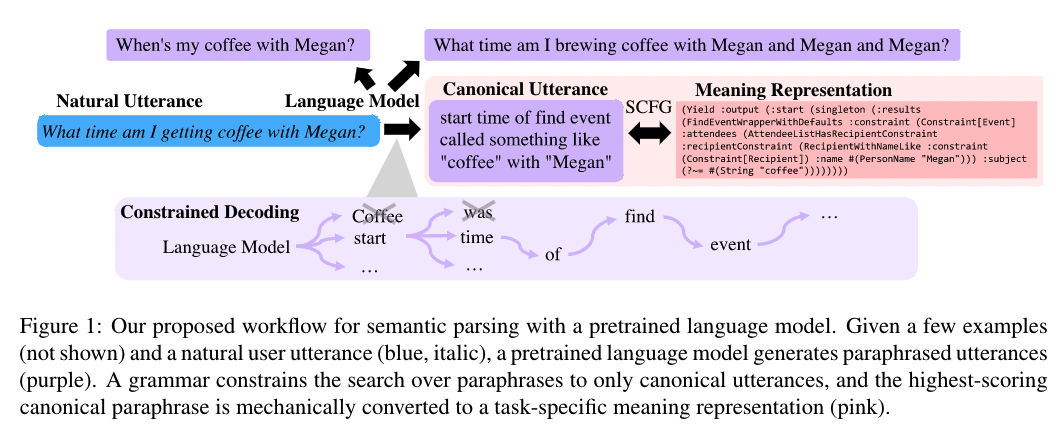

The author wanted to explore the use of large pretrained language models as few-shot semantic parsers

However, language models are trained to generate natural language. To bridge the gap, they used language models to paraphrase inputs into a controlled sublanguage resembling English that can be automatically mapped to a target meaning representation. (using synchronous context-free grammar SCFG)

Such a grammar maps between canonical natural language forms and domain-specific meaning representations, so that a separate LM-based system can focus entirely on mapping an unconstrained utterance $u$ to a canonical (but still natural) form $c$

Some key terms

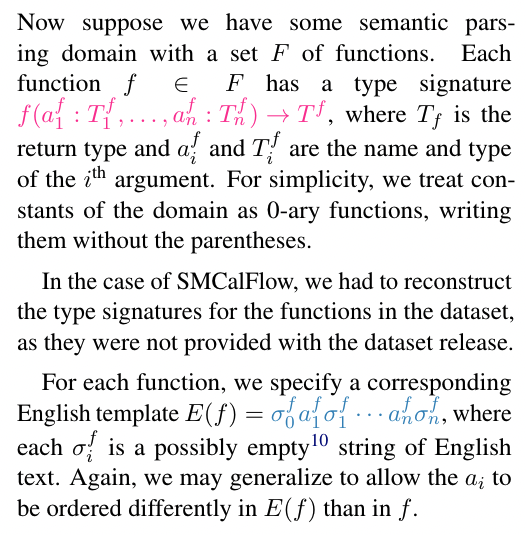

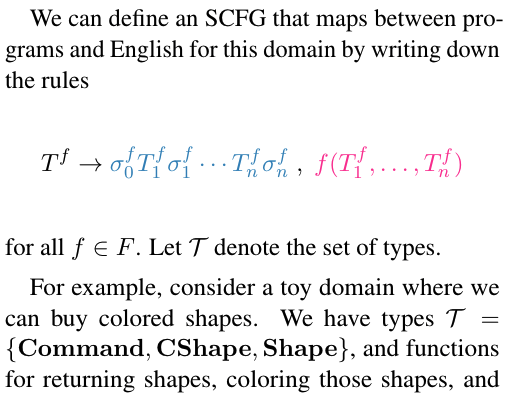

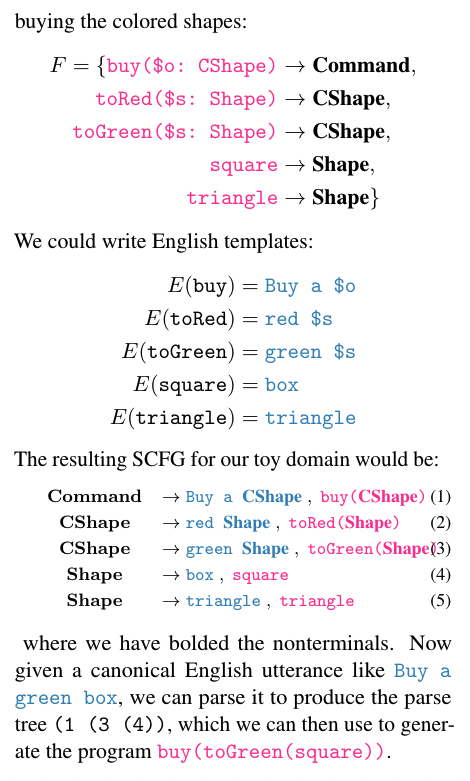

Synchronous context-free grammar (SCFG)

SCFG for Semantic Parsing