[TOC]

- Title: BackdooRL Backdoor Attack Against Competitive Reinforcement Learning 2021

- Author: Lun Wang et. al

- Publish Year: 12 Dec 2021

- Review Date: Wed, Dec 28, 2022

Summary of paper

Motivation

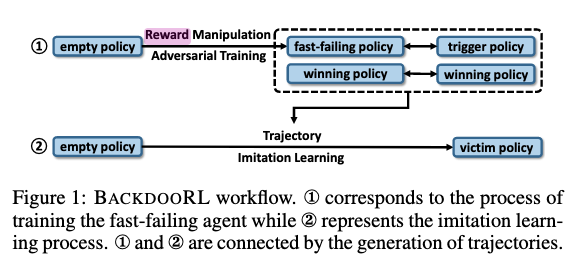

- in this paper, we propose BACKDOORL, a backdoor attack targeted at two player competitive reinforcement learning systems.

- first the adversary agent has to lead the victim to take a series of wrong actions instead of only one to prevent it from winning.

- Additionally, the adversary wants to exhibit the trigger action in as few steps as possible to avoid detection.

Contribution

- we propose backdoorl, the first backdoor attack targeted at competitive reinforcement learning systems. The trigger is the action of another agent in the environment.

- We propose a unified method to design fast-failing agent for different environment

- We prototype BACKDOORL and evaluate it in four environments. The results validate the feasibility of backdoor attacks in competitive environment

- We study the possible defenses for backdoorl. The results show that fine-tuning cannot completely remove the backdoor.

Some key terms

backdoorl workflow

Defense

- one possible defense is to fine-tune (or un-learn) the victim network by retraining with additional normal episodes.

- Additionally, we notice that even fine-tuning for more epochs cannot further improve the winning rate.