[TOC]

- Title: Defense Against Reward Poisoning Attacks in Reinforcement Learning

- Author: Kiarash Banihashem et. al.

- Publish Year: 20 Jun 2021

- Review Date: Tue, Dec 27, 2022

Summary of paper

Motivation

- our goal is to design agents that are robust against such attacks in terms of the worst-case utility w.r.t. the true unpoisoned rewards while computing their policies under the poisoned rewards.

Contribution

-

we formalise this reasoning and characterize the utility of our novel framework for designing defense policies. In summary, the key contributions include

- we formalise the problem of finding defense policies that are effective against reward poisoning attacks that minimally modify the original reward function to achieve their goal

- we introduce a novel optimisation framework for designing defense policies against reward poisoning attacks – this framework focuses on optimizing the agent’s worst-case utility among the set of reward functions that are plausible candidates of the true reward function

- we provide characterisation results that establish lower bounds on the performance of defense policies derived from our optimisation framework, and upper bounds on the suboptimality of these defense policies compared to the target policy

- we empirically demonstrate the effectiveness of our approach using numerical simulations

-

to our knowledge, this is the first framework for studying this type of defenses against reward poisoning attacks that try to force a target policy at minimal cost

-

it defines a novel optimisation objective to guarantee the lower bound of the performance of the defense agent under reward poisoning attacks.

Limitation

- sample efficiency is not the focus of this study.

Some key terms

score of policy

$\mathbb E[(1-\gamma) \sum_{t=1}^\infty \gamma^{t-1} R(s_t, a_t) | \pi, \sigma]$

- this is the total expected return scaled by a factor of $1 - \gamma$

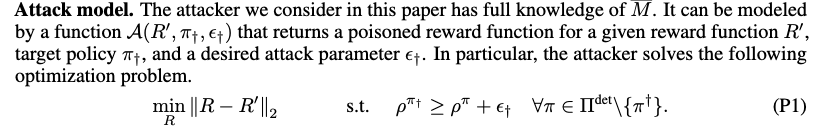

attack model

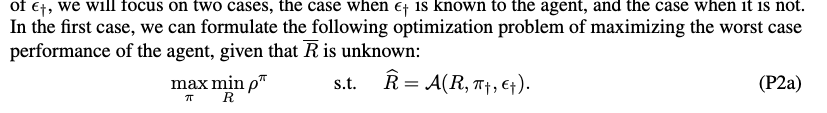

the proposed optmisation objective

- this is the optimisation problem of maximising the worst case performance of the agent.