please modify the following

[TOC]

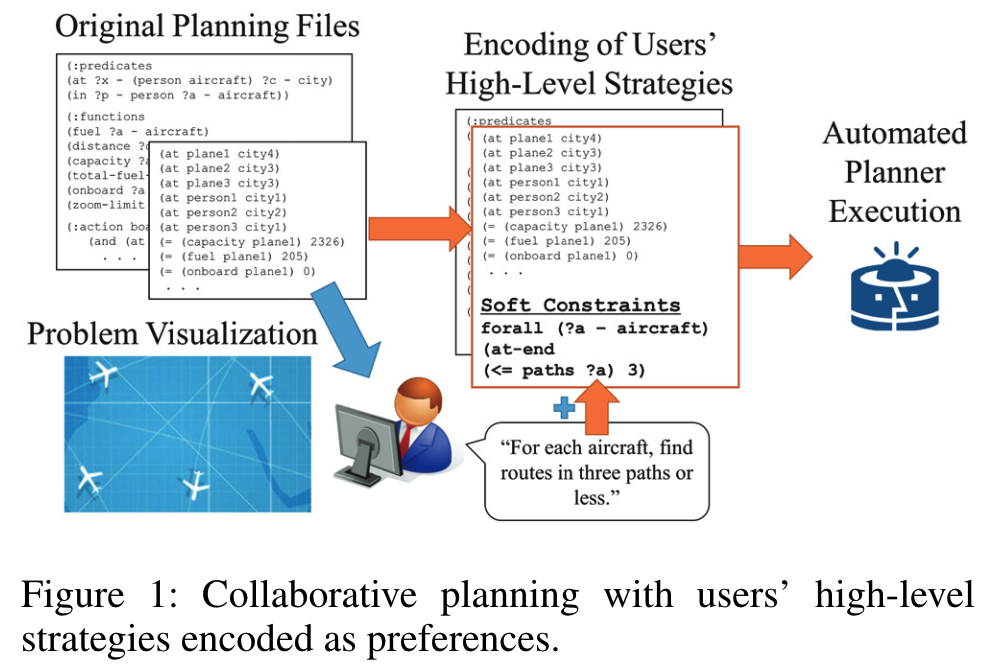

- Title: Collaborative Planning with Encoding of Users’ High-level Strategies

- Author: Joseph Kim et. al.

- Publish Year: 2017

- Review Date: Mar 2022

Summary of paper

Motivation

Automatic planning is computationally expensive. Greedy search heuristics often yield low-quality plans that can result in wasted resources; also, even in the event that an adequate plan is generated, users may have difficulty interpreting the reason why the plan performs well and trusting it.

Such challenges motivate the development of a form of collaborative planning, where instead of treating the planner as “black box”, users are actively involved in the plan generation process.

how to let humans to encode LTL operators, since LTL operators are hard to be handled by laymen

However, the encoding of user-provided high-level strategies for scheduling problems is an underexplored area.

Some key terms

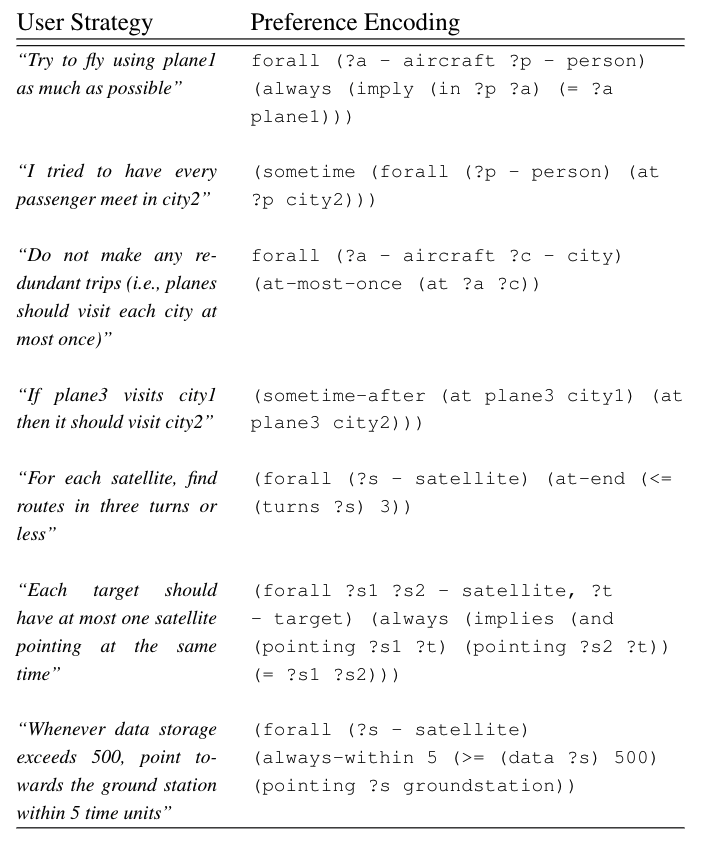

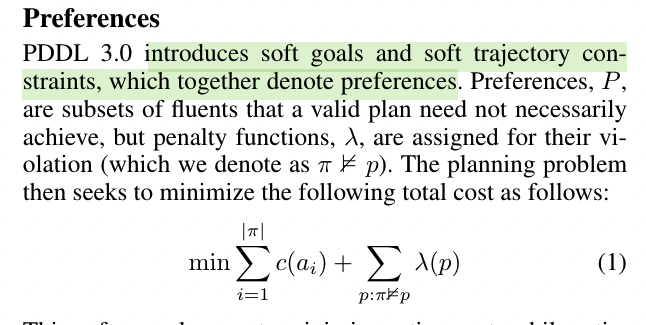

Preferences

PDDL 3.0

While soft goals are defined at the final state, soft trajectory constraints assert that conditions that must be met by the entire sequence of states visited during plan execution.

trajectory constraints

soft trajectory constraints assert that conditions that must be met by the entire sequence of states visited during plan execution.

These constraints are expressed using temporal modal operators over first-order logic involving state variables.

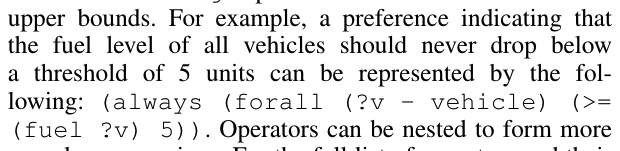

Basic operators include always, sometimes, at-most-once, at-end

Precedence relationships are set using sometime-after, sometime-before, imply

Operators such as within, hold-after, hold-during represent deadlines with lower and upper bounds.

e.g.,

Unlike hard constraints

preferences cannot be directly used to prune the search space; however, they can serve as forward search heuristics wherein several techniques use related planning graphs and sum the layers containing preference facts. These are then used to estimate goal distances and preference satis-faction potential.

Collaborative planning procedure

Manually encoding

The user-provided strategies were manually encoded as preferences by two experimenters.

… ok, this is different from the annotation tool method. We must have domain experts to annotate ….

Well, we can automate the process later…

Examples of strategies and their preference encod-ings