[TOC]

- Title: Reward Engineering for Generating Semi-Structured Explanation

- Author: Jiuzhou Han et. al.

- Publish Year: EACL2024

- Review Date: Thu, Jun 20, 2024

- url: https://github.com/Jiuzhouh/Reward-Engineering-for-Generating-SEG

Summary of paper

Motivation

Contribution

- the objective is to equip moderately-sized LMs with the ability to not only provide answers but also generate structured explanations

Some key terms

Intro

the author talked about some background on Cui et al. incorporate a generative pre-training mechanism over synthetic graphs by aligning inputs pairs of text-graph to improve the model’s capability in generating semi-structured explanation.

- the author first stated that they utilise SFT as teh de-facto solution. We then turn our focus to RLHF as a mechanism to further align the explanations with ground-truth on top of SFT.

Related work

the author mention in details about the benchmark they use – ExplaGraph and COPA-SSE

Results

Experiments

the author first talked about the dataset size and said that “The detailed descriptions of all evaluation metrics are provided in Appendix A.”

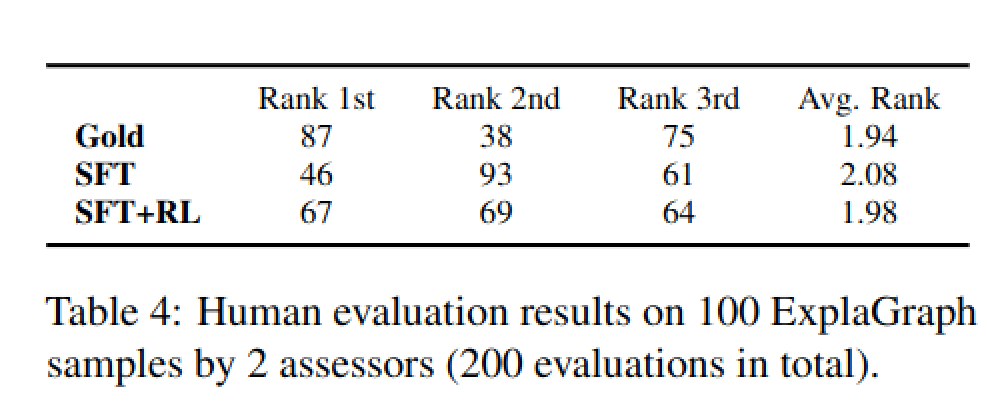

Human evaluation