[TOC]

- Title: Boosting Language Models Reasoning With Chain of Knowledge Prompting

- Author: Jianing Wang et. al.

- Publish Year: 10 Jun 2023

- Review Date: Sun, Jul 2, 2023

- url: https://arxiv.org/pdf/2306.06427.pdf

Summary of paper

Motivation

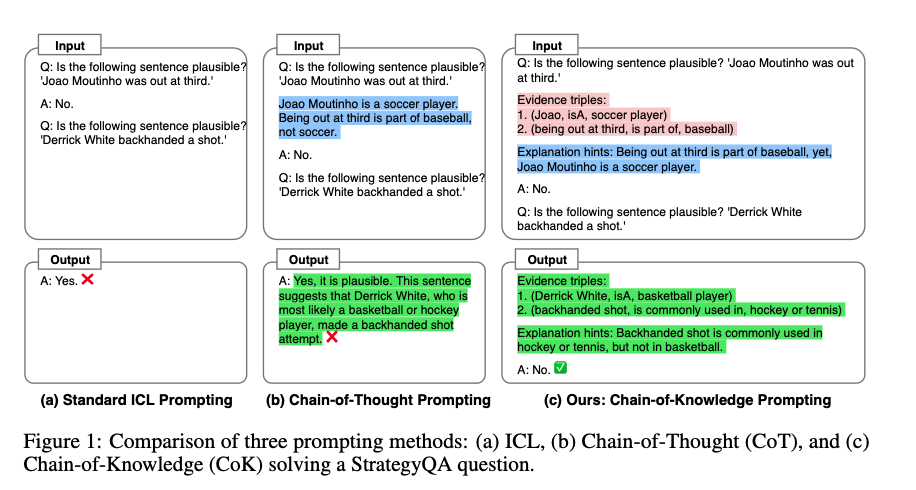

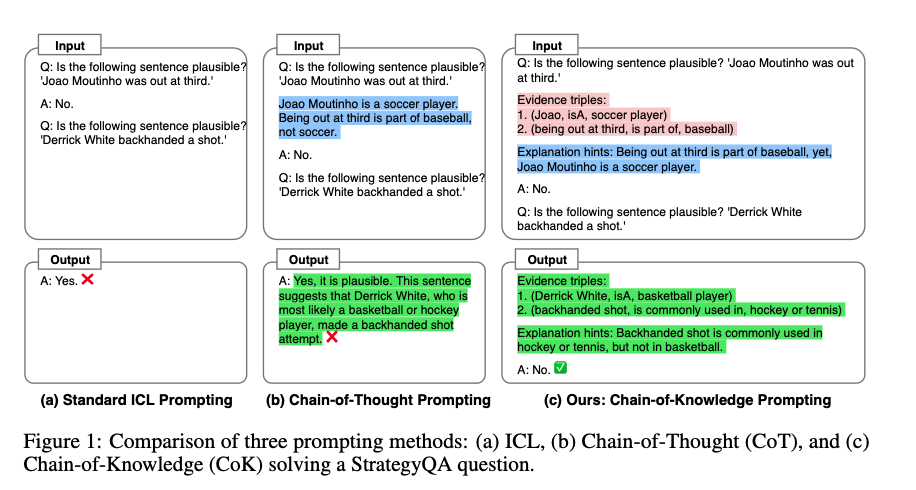

- “Chain of Thought (CoT)” aims at designing a simple prompt like “Let’s think step by step”

- however, the generated rationales often come with mistakes, making unfactual and unfaithful reasoning chain

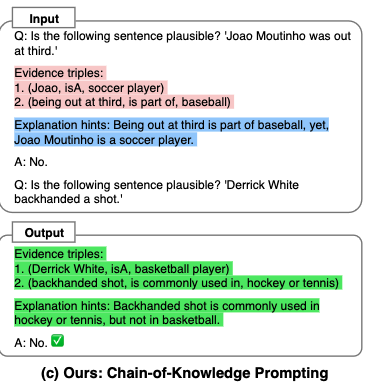

- To mitigate this brittleness, we propose a novel Chain-of-Knowlege knowledge evidence in the form of structure triple

Contribution

- Benefiting from CoK, we additional introduce a F^2 -Verification method to estimate the reliable response, the wrong evidence can be indicated to prompt the LLM to rethink.

Some key terms

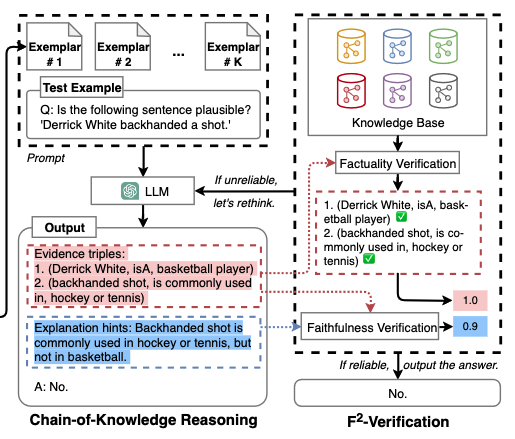

F^2 Verification

- It is known that LLMs are not capable of inspecting the prediction, so the quality of the generated rationale and the final answer can not be guaranteed.

- We attribute this problem to two factors: 1) some steps in the rationale may not correspond to the fact, contributing to the wrongness, and 2) the relation between the final answer and the reasoning chain is still ambiguous.

- F^2 verification to estimate the answer reliability towards both Factuality and Faithfulness.

- Factuality Verification: check the matching degree between each generated evidience triple and the ground-truth knowledge from KBs.

- Faithfulness Verification: if the reasoning process derived from the model can accurately expressed by an explanation, we call it faithful.

- How: SimCSE sentence encoder to calculate the similarity between explanation sentence H and the concatenation of [Query, Triplet and Answer]

Potential future work

- This paper provides a good way of how to verify the answer of LLMs