[TOC]

- Title: Structured Chaint of Thought Prompting for Code Generation 2023

- Author: Jia Li et. al.

- Publish Year: 7 Sep 2023

- Review Date: Wed, Feb 28, 2024

- url: https://arxiv.org/pdf/2305.06599.pdf

Summary of paper

Contribution

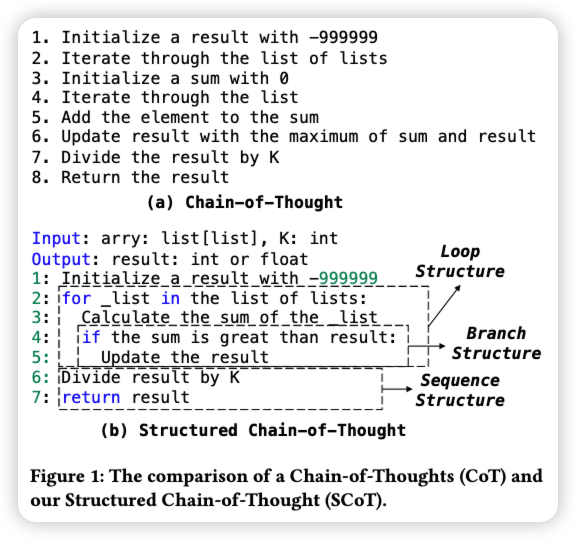

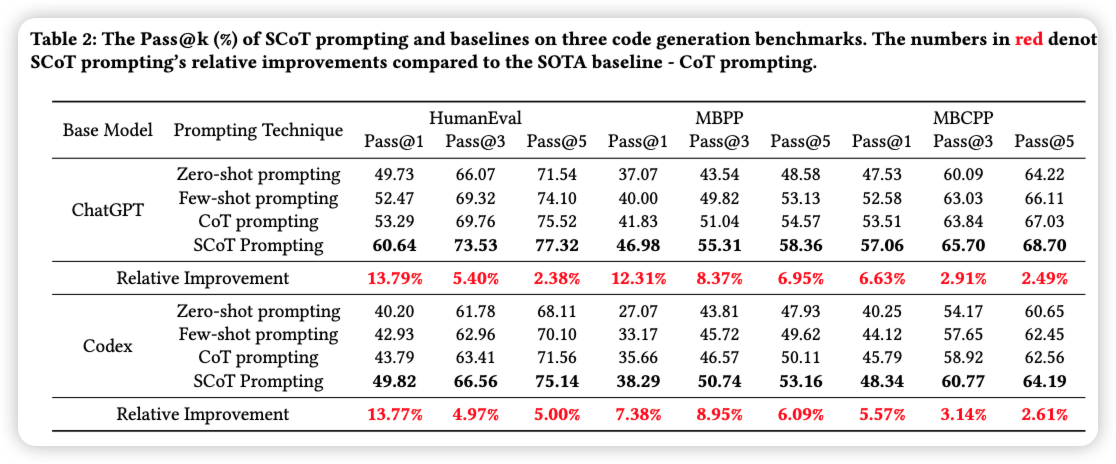

The paper introduces Structured CoTs (SCoTs) and a novel prompting technique called SCoT prompting for improving code generation with Large Language Models (LLMs) like ChatGPT and Codex. Unlike the previous Chain-of-Thought (CoT) prompting, which focuses on natural language reasoning steps, SCoT prompting leverages the structural information inherent in source code. By incorporating program structures (sequence, branch, and loop structures) into intermediate reasoning steps (SCoTs), LLMs are guided to generate more structured and accurate code. Evaluation on three benchmarks demonstrates that SCoT prompting outperforms CoT prompting by up to 13.79% in Pass@1, is preferred by human developers in terms of program quality, and exhibits robustness to various examples, leading to substantial improvements in code generation performance.

Some key terms

code generation

code generation aims to automatically a program tat satisfies a given natural language requirement.

- different from natural languages, source code contains rich structural information.

- sequence

- branch

- loop

- intuitively, intermediate reasoning steps leading to the structured code should also be structured.

- standard CoT ignores the program structures and has low accuracy in code generation.

Structured CoT

- how: it asks LLMs first to generate a SCoT using programs structures and then implement the code.

- SCoT prompting explicitly introduces program structures into intermediate reasoning steps and constraints LLMs to think about how to solve requirements form the view of programming language.

Evaluation

Three representative benchmarks (i.e., HumanEval [ 7], MBPP [ 2], and MBCPP [1]). We use unit tests to measure the correctness of generated programs and report the Pass@𝑘 (𝑘 ∈ [1, 3, 5]) [7 ].

Pass@k

Specifically, given a requirement, a code generation model is allowed to generate 𝑘 programs. The requirement is solved if any generated programs pass all test cases. We compute the percentage of solved requirements in total requirements as Pass@𝑘. For Pass@𝑘, a higher value is better.

Results

Summary

A CoT is several intermediate natural language reasoning steps. This paper propose Structured CoT to allow program structures (i.e., sequence, branch, and loop structures) to explicitly reveal the program structure, which is helpful for code generation.

- in one sentence: having more informative CoT intermediate information helps