[TOC]

- Title: Learning to Model the World With Language 2024

- Author: Jessy Lin et. al.

- Publish Year: ICML 2024

- Review Date: Fri, Jun 21, 2024

- url: https://arxiv.org/abs/2308.01399

Summary of paper

Motivation

- in this work, we propose that agents can ground diverse kinds of language by using it to predict the future

- in contrast to directly predicting what to do with a language-conditioned policy, Dynalang decouples learning to model the world with language (supervised learning with prediction objectives) from learning to act given that model (RL with task rewards)

- Future prediction provides a rich grounding signal for learning what language utterances mean, which in turn equip the agent with a richer understanding of the world to solve complex tasks.

Contribution

- investigate whether learning language-conditioned world models enable agents to scale to more diverse language use, compared to language-conditioned policies.

Some key terms

related work

the author mentioned the term conditioning policies on language

- Much work has focused on teaching reinforcement learning agents to utilize language to solve tasks by directly conditioning policies on language (Lynch & Sermanet, 2021; Shridhar et al., 2022; Abramson et al., 2020).

- Recent work proposes text-conditioning a video model trained on expert demonstrations and using the model for planning.

- However, language in these settings has thus far been limited to short instructions, and only a few works investigate how RL agents can learn from other kinds of language like descriptions of how the world works, in simple settings (Zhong et al., 2020; Hanjie et al., 2021).

Basically, the author mentioned the types of language text and how RL agents utilize them

After that, they mentioned supervised approaches rely on expensive human data (often with aligned language annotations and expert demonstrations), and both these approaches have limited ability to improve their behaviors and language understanding online.

In the end, the author talked about how this work is different from the related work.

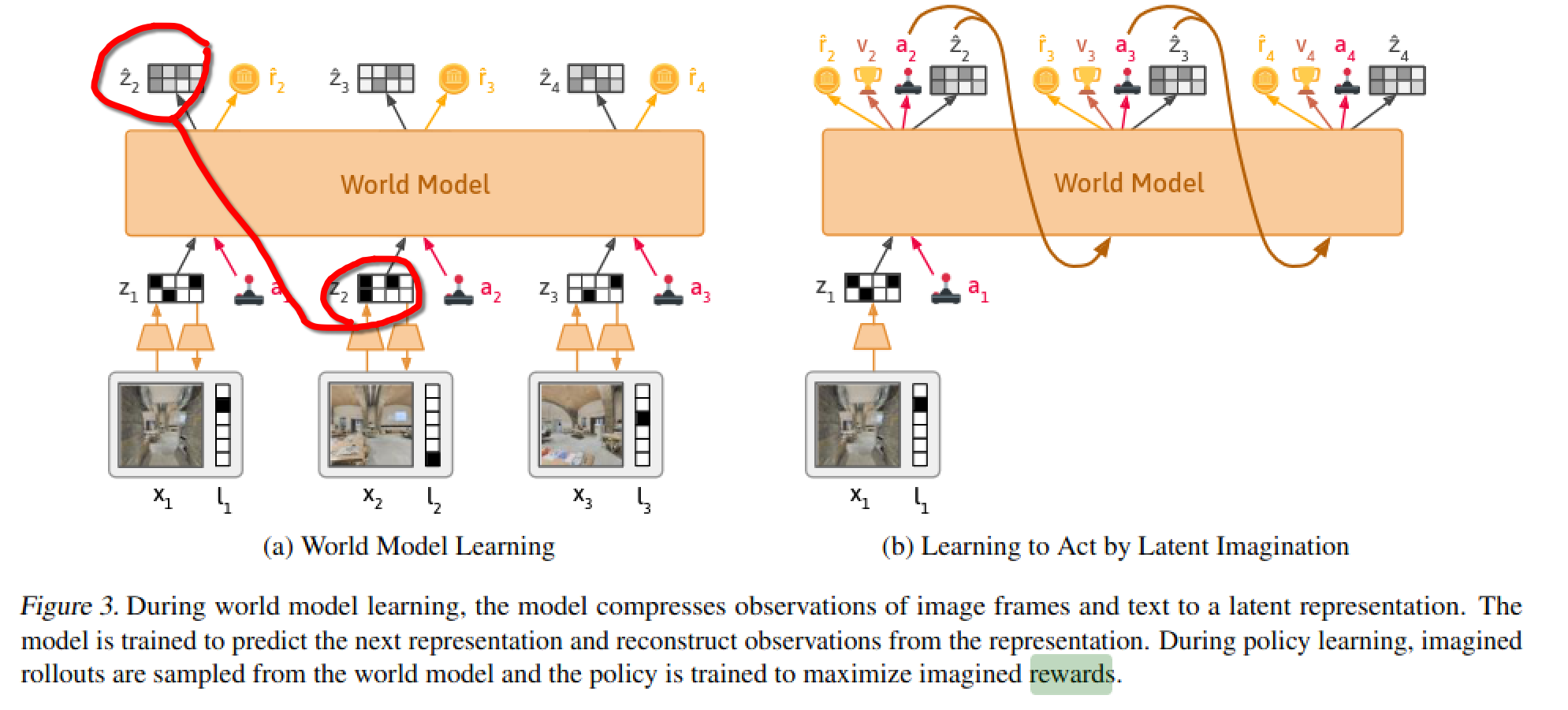

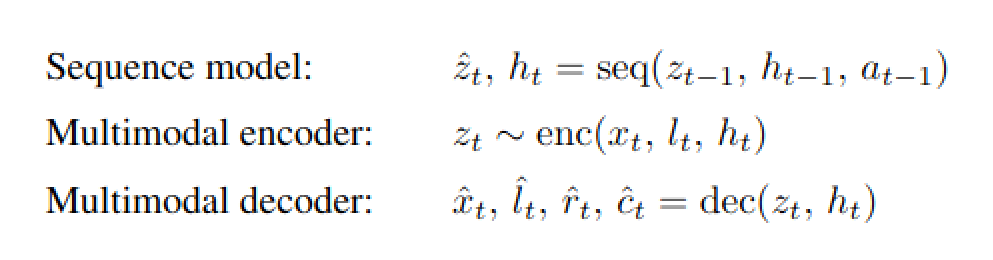

World model learning

The world model learns representations of all sensory modalities that the agent receives and then predicts the sequence of these latent representation given actions.

- Predicting future representations not only provides a rich learning signal to ground language in visual experience but also allows planning and policy optimization from imagined sequence.

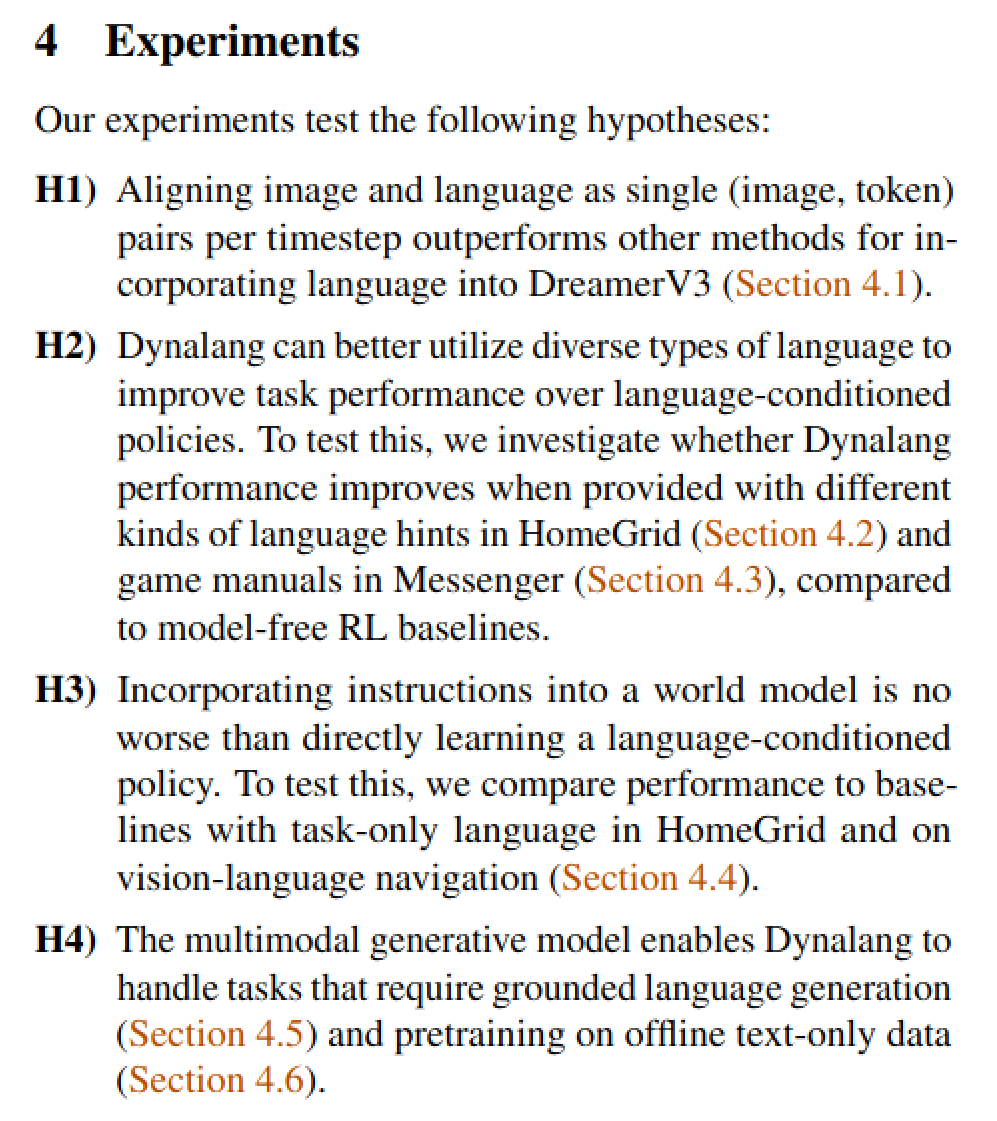

Experiments

This hypothesis statement helps the reader to know what’s going on, very helpful