[TOC]

- Title: Reinforcement Learning With Stochastic Reward Machines

- Author: Jan Corazza et. al.

- Publish Year: AAAI 2022

- Review Date: Sat, Dec 24, 2022

Summary of paper

Motivation

- reward machines are an established tool for dealing with reinforcement learning problems in which rewards are sparse and depend on complex sequence of actions. However, existing algorithms for learning reward machines assume an overly idealized setting where rewards have to be free of noise.

- to overcome this practical limitation, we introduce a novel type of reward machines called stochastic reward machines, and an algorithm for learning them.

Contribution

- Discussing the handling of noisy reward for non-markovian reward function.

- limitation:

- the solution introduces multiple sub value function models, which is different from the standard RL algorithm.

- The work does not emphasise on the sample efficiency of the algorithm.

Some key terms

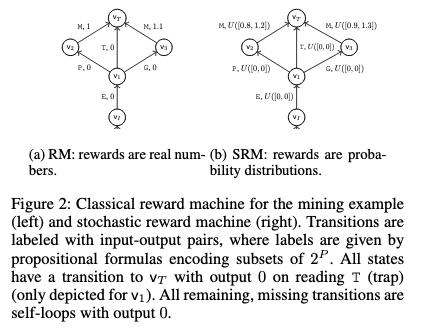

Reward machine

- intuitively, one can view the role of reward machines as maintaining the sufficient amount of memory to turn the non-markovian reward function back to a ordinary, Markovian one. This results in an important feature of RMs: they enable using standard RL algorithms (which would otherwise not be usable with non-Markvoian reward functions)

Stochastic reward machines

Good things about the paper (one paragraph)

Major comments

citation

- the most natural conceptualisation of the state space is the one in which the reward function depends on the history of actions that the agent has performed. (those are typically the tasks in which the agent is reward for complex behaviours over a longer period)

- ref: Corazza, Jan, Ivan Gavran, and Daniel Neider. “Reinforcement Learning with Stochastic Reward Machines.” Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 36. No. 6. 2022.

- an emerging tool used for reinforcement learning in environments with such non-markovian rewards are reward machines. A reward machine is an automation-like structure which augments the state space of the environment, capturing the temporal component of rewards. it has been demonstrated that Q learning can be adapted to use the benefit from reward machine

- ref: Corazza, Jan, Ivan Gavran, and Daniel Neider. “Reinforcement Learning with Stochastic Reward Machines.” Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 36. No. 6. 2022.

- ref: Toro Icarte, R.; Klassen, T. Q.; Valenzano, R. A.; and McIl- raith, S. A. 2018. Using Reward Machines for High-Level Task Specification and Decomposition in Reinforcement Learning. In ICML, volume 80 of Proceedings of Machine Learning Research, 2112–2121. PMLR

- when the machine is not known upfront, existing learning methods prove counterproductive in the presence of noisy rewards, as there is either no reward machine consistent with the agent’s experience, or the learned reward machine explodes in size, overfitting the noise.

- ref: Corazza, Jan, Ivan Gavran, and Daniel Neider. “Reinforcement Learning with Stochastic Reward Machines.” Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 36. No. 6. 2022.