[TOC]

- Title: The Effect of Modeling Human Rationality Level on Learning Rewards from Multiple Feedback Types

- Author: Gaurav R. Ghosal et. al.

- Publish Year: 9 Mar 2023 AAAI 2023

- Review Date: Fri, May 10, 2024

- url: arXiv:2208.10687v2

Summary of paper

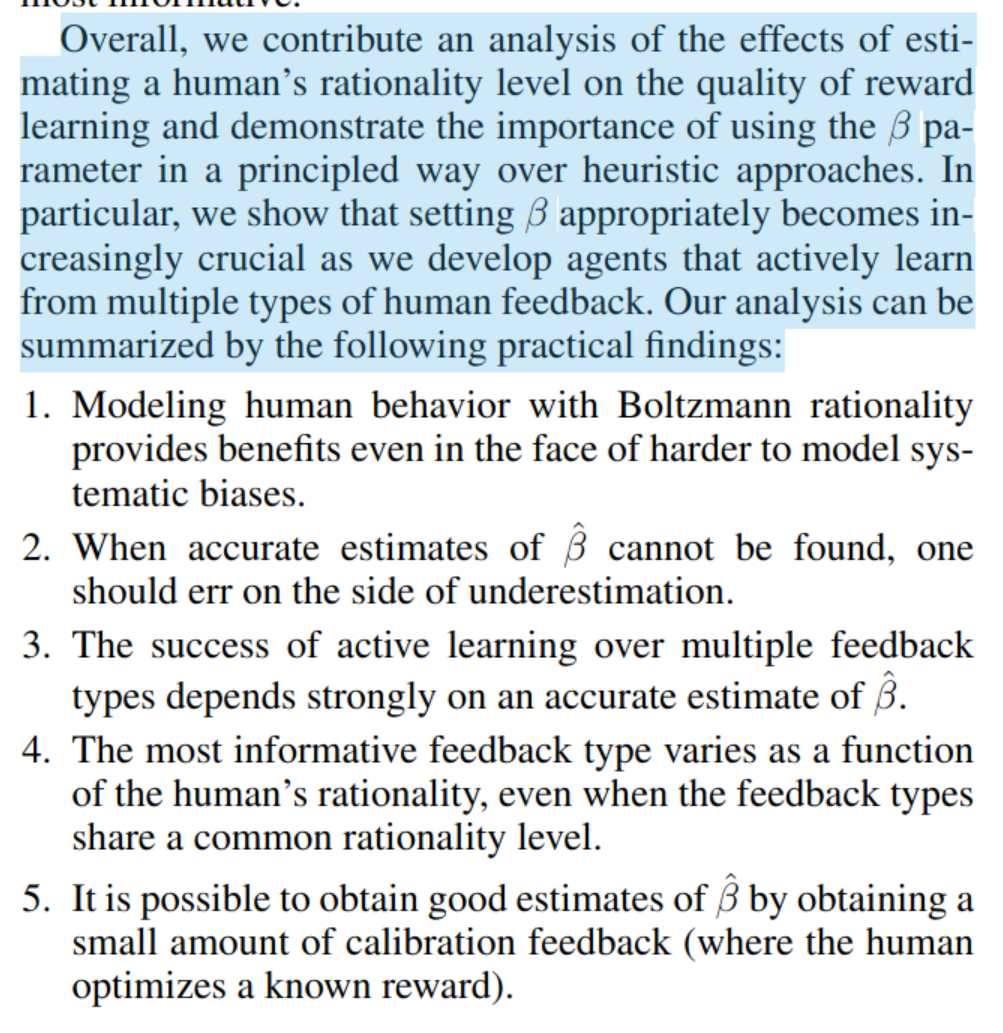

Contribution

- We find that overestimating human rationality can have dire effects on reward learning accuracy and regret

- We also find that fitting the rationality coefficient to human data enables better reward learning, even when the human deviates significantly from the noisy-rational choice model due to systematic biases

Some key terms

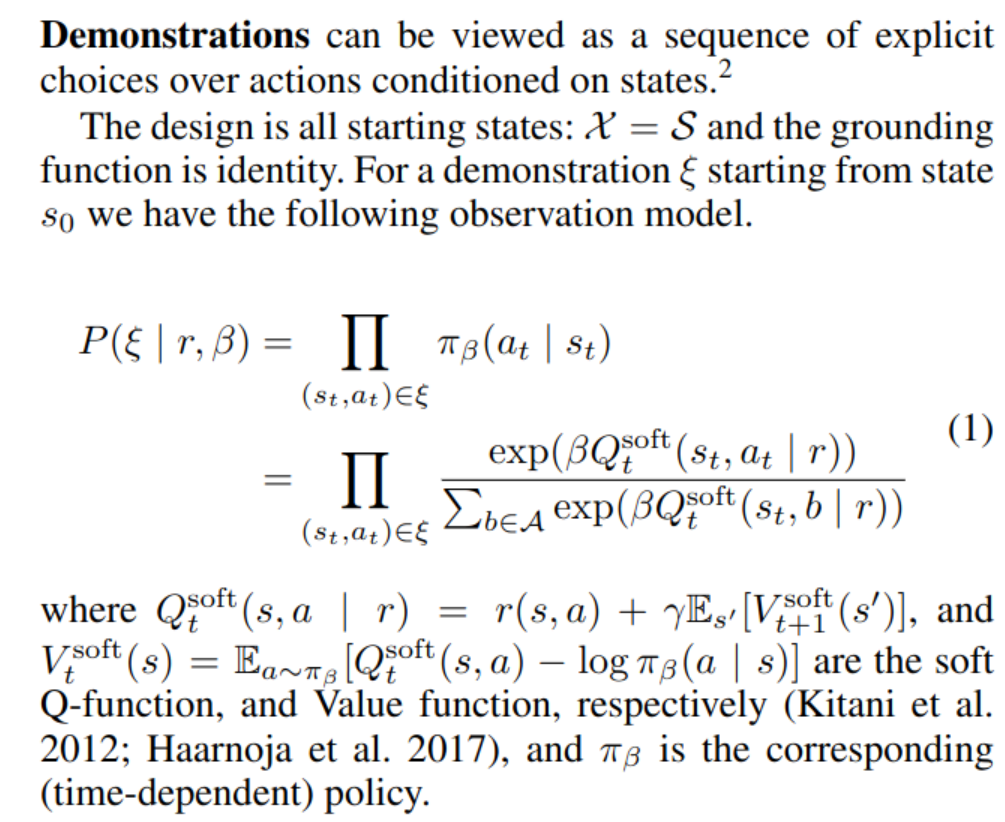

What is Boltzmann Rationality coefficient $\beta$

Apply this Boltzmann rationality coefficient into PPO

|

|

- essentially when the beta is low, the policy will have more exploration

Results

1. remark: underestimating $\beta$ is better than over estimating it

the author provided proof (proposition 1 and proposition 3)

Potential future work

What it did is straightforward, if you do not trust the reward signal, you let the policy to explore more.