[TOC]

- Title: Language in a (Search) Box: Grounding Language Learning in Real-World Human-Machine Interaction

- Author: Federico Bianchi

- Publish Year: 2021

- Review Date: Jan 2022

Summary of paper

the author investigated grounded language learning through the natural interaction between users and the shopping website search engine.

How they do it

-

convert the shopping object dataset into a Latent Grounded Domain

- related products end up closer in the embedding space

-

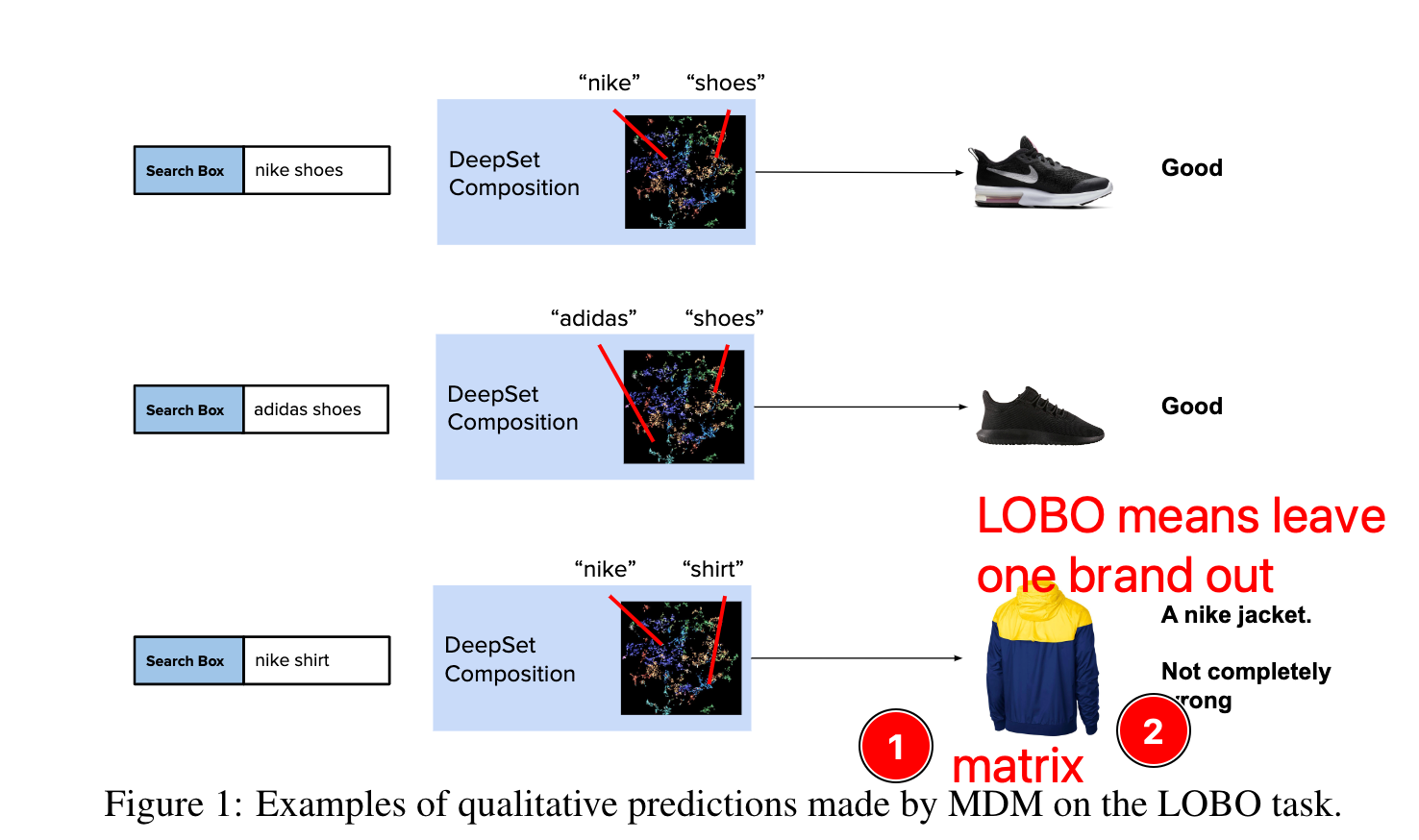

train the mapping model (mapping from text query to a portion of product space) based on the user click behaviour (In the training dataset, the users queries about “Nike” and the they would click relevant Nike Product)

- This approach can be seen as a neural generalisation of model-theoretic semantics, where the ex-tension of “shoes” is not a discrete set of objects, but a region in the grounding space.

-

train the functional composition model

- (looks like) this compositional model is designed for solving zero-shot learning problems

- this is a model that input two DeepSet embedding and output another DeepSet embedding

- e.g., “Nike” + “Shoes” = “Nike Shoes”

Example

The contributions are the following

- converting into a grounded latent domain allows for better generalisation performance

Comments about the paper (one paragraph)

The user behaviour dataset is very valuable. but they are not open sourced any more