[TOC]

- Title: Towards Tracing Factual Knowledge in Language Models Back to the Training Data

- Author: Ekin Akyurek et. al.

- Publish Year: EMNLP 2022

- Review Date: Wed, Feb 8, 2023

- url: https://aclanthology.org/2022.findings-emnlp.180.pdf

Summary of paper

Motivation

- LMs have been shown to memorize a great deal of factual knowledge contained in their training data. But when an LM generates an assertion, it is often difficult to determine where it learned this information and whether it is true.

Contribution

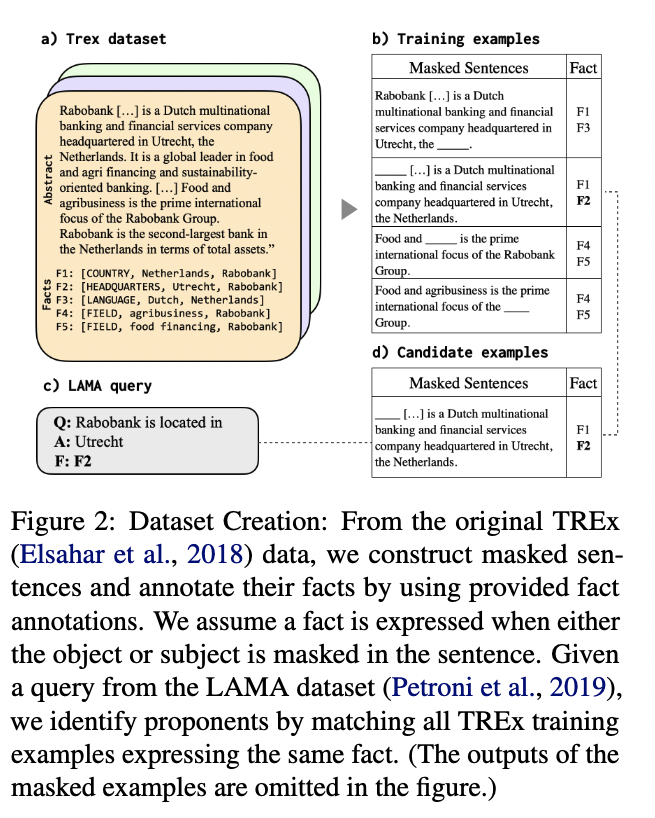

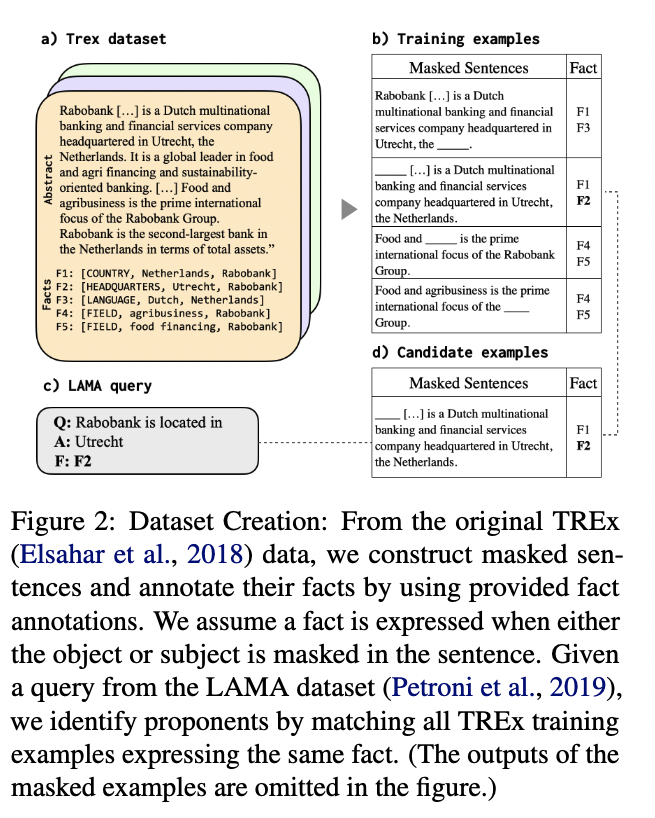

- we propose the problem of fact tracing

- identifying which training examples taught an LM to generate a particular factual assertion.

- prior work on training data distribution (TDA) may offer effective tools for identifying such examples, known as “proponent”. We present the first quantitative benchmark to evaluate this

- we compare two popular families of TDA methods

- gradient based

- embedding based

Some key terms

Training data distribution method (TDA)

- are the main literature concerned with linking predictions back to specific training examples (known as proponents)

- several obstacles have limited research on fact tracing for large, pre-trained LMs. First, since pre-training corpora are very large, it has not been clear how to obtain ground truth labels regarding which pre-training example was truly responsible for an LM’s prediction. Second, TDA methods have traditionally been computationally prohibitive.

Obtaining Ground Truth Proponents

- propose a recipe, which we call “novel fact injection”

- first suppose that we can identify a set of “facts” that the pre-trained LM does not know – we call these “novel facts”

- we can convert each novel fact into LM training example, and then fine tune the LM on these extra examples until it memorizes novel fact (i.e., “injecting” them into the LM)

- now we have ground-truth proponents for every novel fact.

Mitigating computational cost

- we propose a simple reranking setup that is commonly used in information retrieval experiments

- rather than running a TDA method over all training examples, we run it only over a small subset of candidate examples that is guaranteed to include the ground truth proponents as well as some distractor examples that are not true proponents.

- this is handled by manual selection