[TOC]

- Title: Fire Burns, Sword Cuts: Commonsense Inductive Bias for Exploration in Text Based Games

- Author: Dongwon Kelvin Ryu et. al.

- Publish Year: ACL 2022

- Review Date: Thu, Sep 22, 2022

Summary of paper

Motivation

- Text-based games (TGs) are exciting testbeds for developing deep reinforcement learning techniques due to their partially observed environments and large action space.

- A fundamental challenges in TGs is the efficient exploration of the large action space when the agent has not yet acquired enough knowledge about the environment.

- So, we want to inject external commonsense knowledge into the agent during training when the agent is most uncertain about its next action.

Contribution

- In addition to performance increase, the produced trajectory of actions exhibit lower perplexity, when tested with a pre-trained LM, indicating better closeness to human language.

Some key terms

Exploration efficiency

- existing RL agent are far away from solving TGs due to their combinatorially large action spaces that hinders efficient exploration

Prior work on using commonsense knowledge graph (CSKG)

- prior works with commonsense focused on completing belief knowledge graph (BKG) using pre-defined CSKG or dynamic LM commonsense transformer-generated commonsense inferences.

- Nonetheless, there is no work on explicitly using commonsense as an inductive bias in the context of exploration for TGs

Methodology

Overview

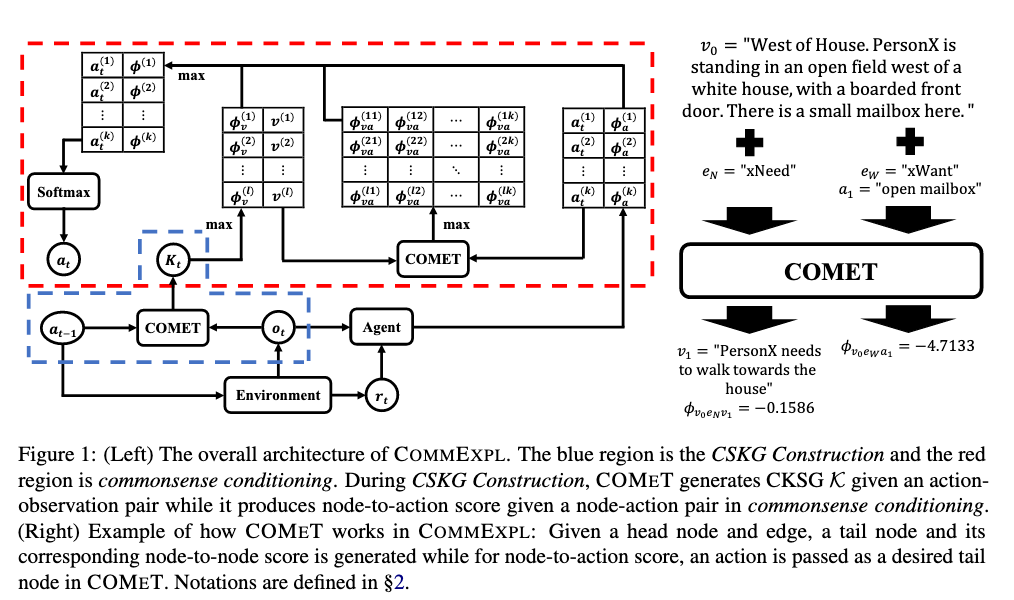

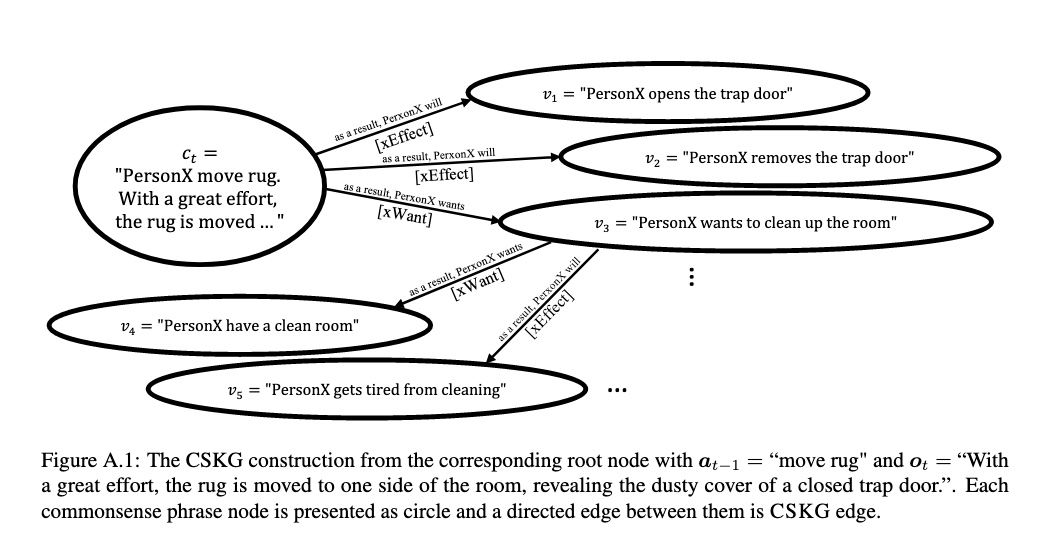

- they proposed commonsense exploration (COMMEXPL) which constructs a CSKG dynamically, using COMET, based on the state of the observations per step.

- Then, the natural language actions are scored with the COMET and agent, to re-rank the policy distributions.

- They refer to this as applying commonsense conditioning

Incomprehension

The author assumed that readers understand what are CSKG (commonsense knowledge graph) and COMeT model, which is not applicable to me.