[TOC]

- Title: Understanding Natural Language in Context

- Author: Avichai Levy et. al.

- Publish Year: ICAPS 2023

- Review Date: Mon, Jan 29, 2024

- url: https://ojs.aaai.org/index.php/ICAPS/article/view/27248

Summary of paper

Contribution

The paper discusses the increasing prevalence of applications with natural language interfaces, such as chatbots and personal assistants like Alexa, Google Assistant, Siri, and Cortana. While current dialogue systems mainly involve static robots, the challenge intensifies with cognitive robots capable of movement and object manipulation in home environments. The focus is on cognitive robots equipped with knowledge-based models of the world, enabling reasoning and planning. The paper proposes an approach to translate natural language directives into the robot’s formalism, leveraging state-of-the-art large language models, planning tools, and the robot’s knowledge of the world and its own model. This approach enhances the interpretation of directives in natural language, facilitating the completion of complex household tasks.

Some key terms

limitation of previous work

However, these assistants are very limited in both their understanding of the world around them, and in the actions they can perform. Impor- tantly, these assistants are static – they do not move around the house, and they can not physically manipulate the world around them.

Assumption

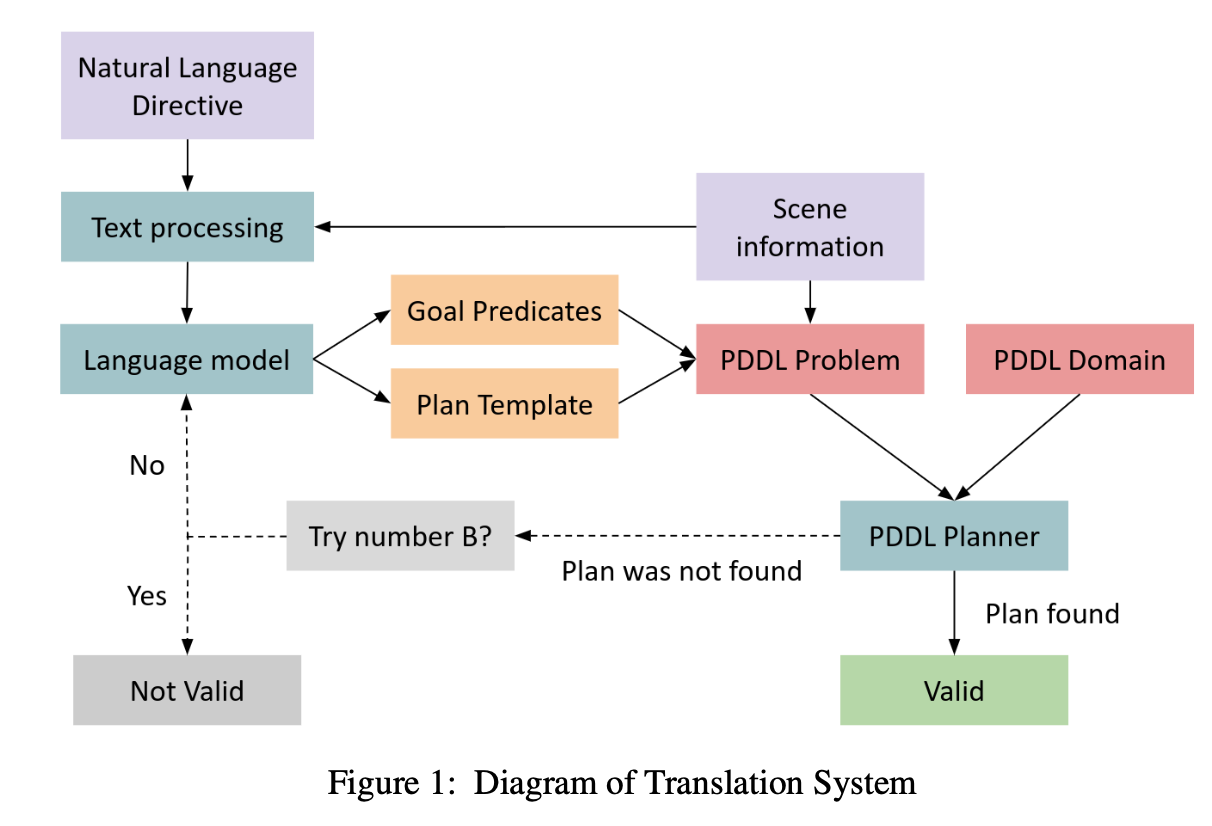

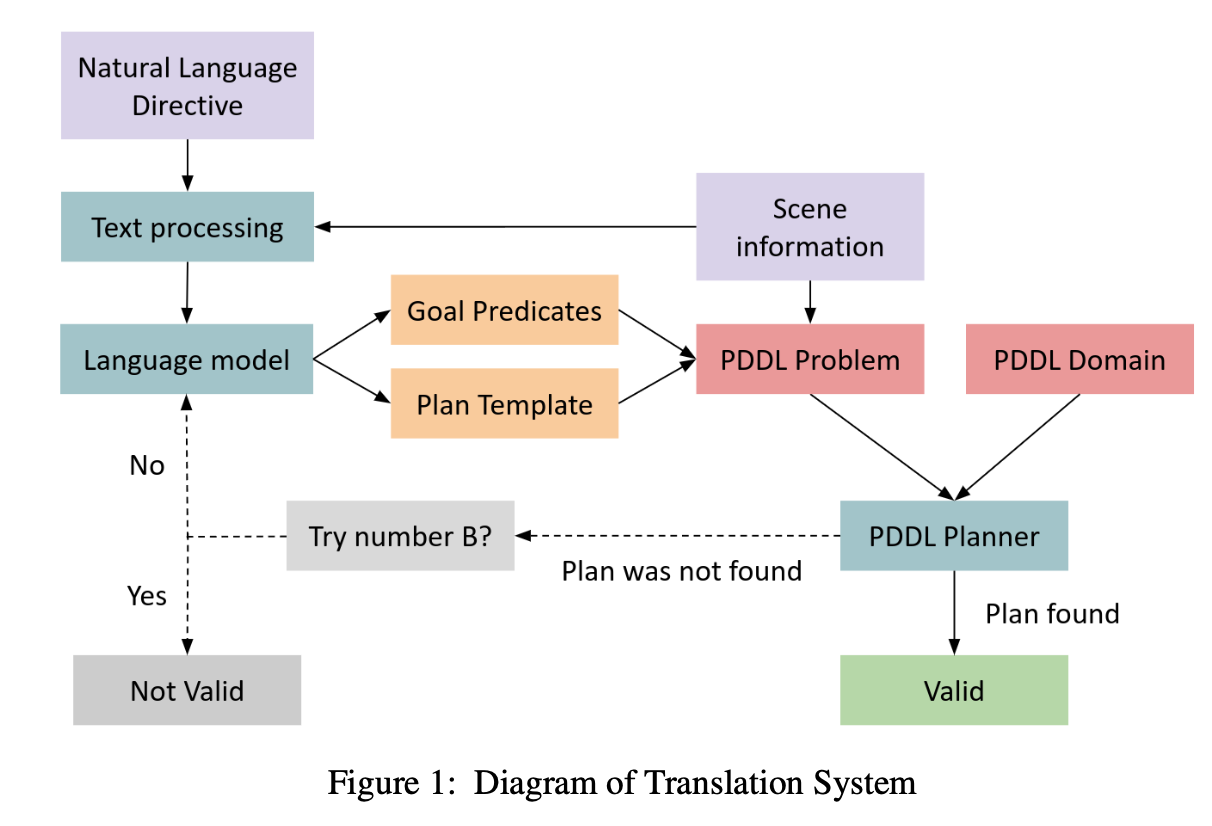

We now describe our system, which is illustrated in Figure 1, in more detail. First, we assume that we already have a cog- nitive robot, with a domain theory that describes the types of objects in the world, the possible relations between them, and the possible actions that the robot can execute – that is, a PDDL domain. We also assume that our robot is clairvoyant, and has complete and perfect information about the state of the world, including which objects exists in the world and the relations between them.

Translation system

The translation system receives input from two sources:

-

Directive: Provided by a human in natural language, this instructs the robot to perform a specific task. Directives can range from high-level task descriptions (e.g., “put a slice of tomato in the fridge”) to more detailed instructions (e.g., specifying actions like going to the kitchen, picking up a knife, slicing a tomato, etc.).

-

State: The robot possesses knowledge of the world’s current state, including existing objects and their relationships. This information is integral for understanding the context in which the directive is given.

By combining these modalities, the system enables the robot to interpret natural language directives and execute tasks accurately within its environment.

Results

The system integrates state-of-the-art pretrained large language models and achieves notable performance metrics on the ALFRED dataset:

-

Task Translation: The system achieves an 85% accuracy rate in translating tasks expressed in natural language into specific goals for the robot to accomplish.

-

Plan Execution: It attains a 57% accuracy rate in following plans expressed in natural language on the ALFRED dataset.

These results demonstrate the system’s effectiveness in understanding and executing tasks based on natural language instructions.