[TOC]

- Title: Robots That Ask for Help: Uncertainty Alignment for Large Language Model Planners

- Author: Allen Z. Ren et. al.

- Publish Year: 4 Sep 2023

- Review Date: Fri, Jan 26, 2024

- url: arXiv:2307.01928v2

Summary of paper

Motivation

- LLMs have various capabilities but often make overly confident yet incorrect predictions. KNOWNO aims to measure and align this uncertainty, enabling LLM-based planners to recognize their limitations and request assistance when necessary.

Contribution

- built on theory of conformal prediction

Some key terms

Ambiguity in NL

- Moreover, natural language instructions in real- world environments often contain a high degree of ambiguity inherently or unintentionally from humans, and confidently following an incorrectly constructed plan could lead to undesirable or even unsafe actions.

- e.g., ambiguous instructions matches multiple trajectories

- Previous works

- does not seek clarification

- does so via extensive prompting

The issue of extensive prompting

- careful prompt engineering

Formalize the challenge

(i) calibrated confidence: the robot should seek sufficient help to ensure a statistically guaranteed level of task success specified by the user, and

(ii) minimal help: the robot should minimize the overall amount of help it seeks by narrowing down possible ambiguities in a task.

Core method

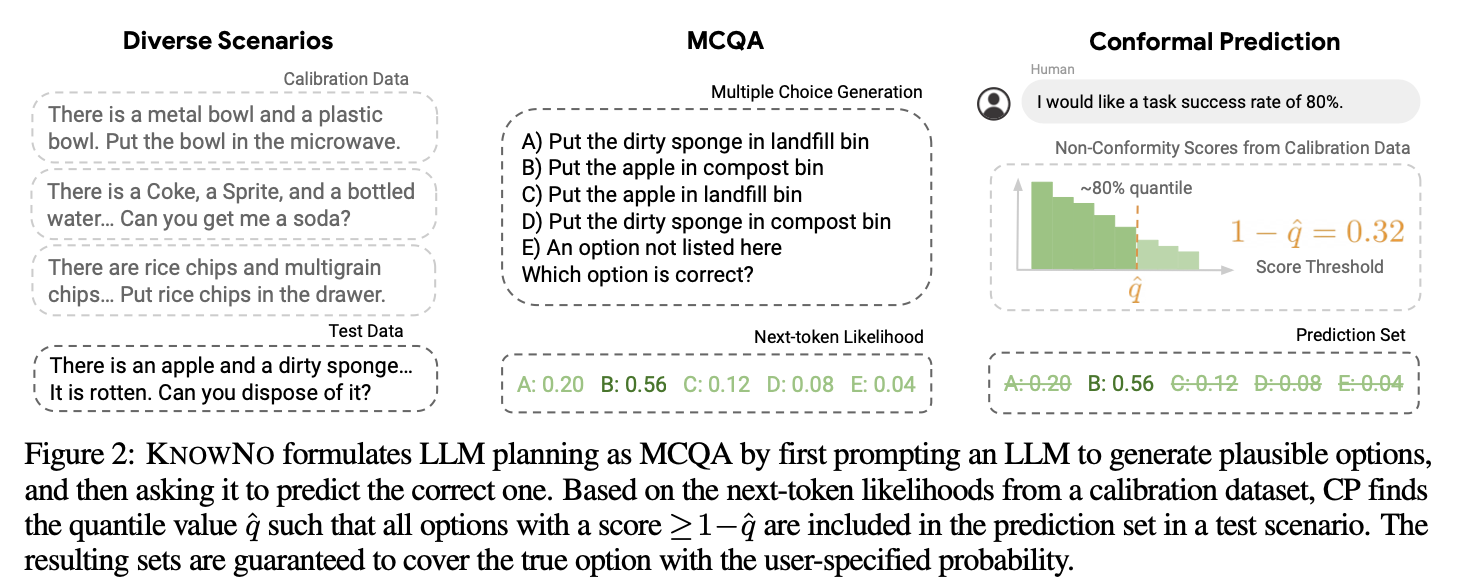

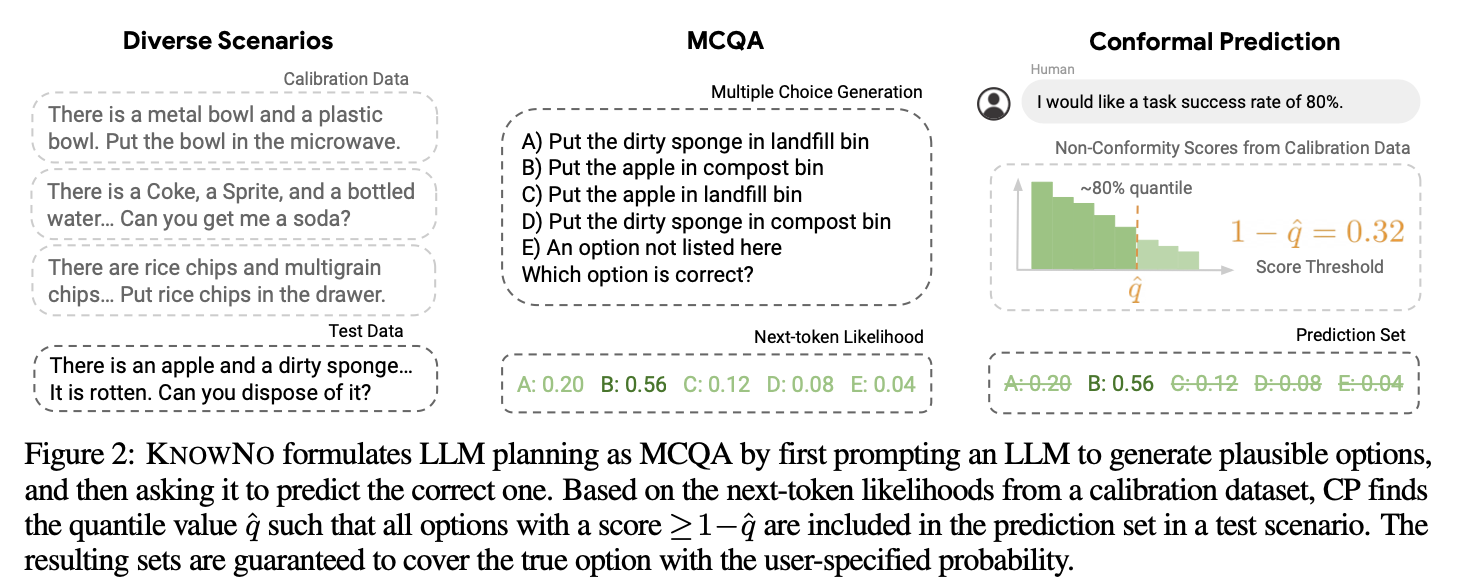

CP: conformal prediction

- use CP to select options, which allows the robot to decide an action to execute or ask for help otherwise

environment setting

- various types of potential ambiguities (e.g., based on spatial locations, numerical values, attributes of objects, and Winograd schemas).

- Winograd schemas

- solved by transformer already

- Winograd schemas

Calibrated confidence

- Calibrated confidence implies that these confidence scores accurately reflect the likelihood that a particular prediction is correct.

robots that ask for help

- prediction set generation: use CP to choose a subset of candidate plans using LLM’s confidence in each prediction given the context.

Goal uncertainty alignment

- in real world, language can be ambiguous

- so, balancing the confidence among multiple choices and ask for help if not confident is the key in this work

Conformal Prediction

- we have a set of plans (predefined) labeled as $y \in Y$ . the conformal prediction will offer a subset of $Y$ such that they are confident enough that at least one of them is the correct plan based on the input $x$.

- since we are having a subset of plans, we need human experts to help to pick the right one. and the method in this work try to minimize the size of subset.

- $\hat f (x)_{y}$ is the LLM confidence in each prediction $y$ given the context $x$, i.e., the LLM’s prior confidence.

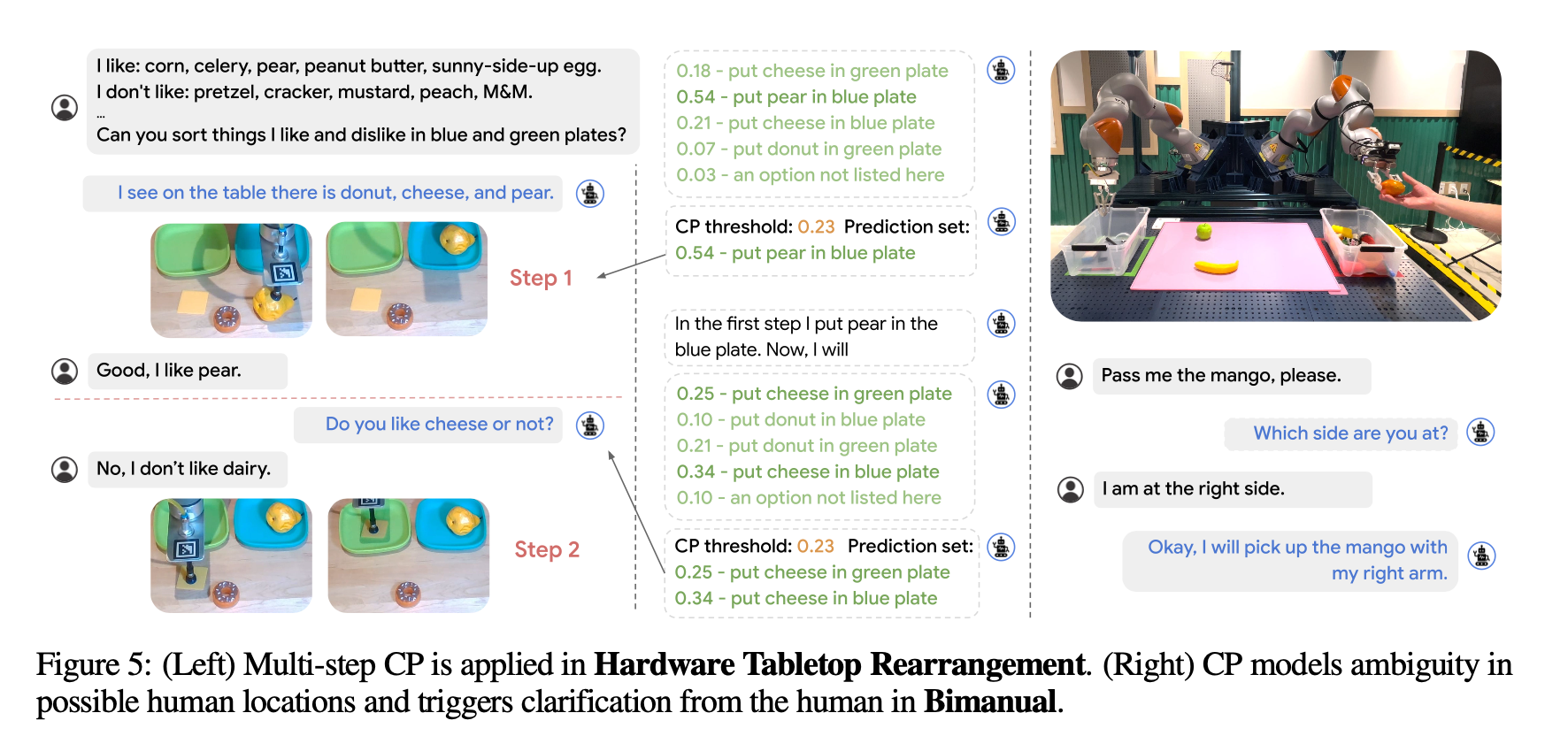

Multi-step uncertainty alignments

- i.i.d assumption for coverage guarantee is no longer valid

Experiments

introduce three settings based on different types of ambiguities in the user instruction:

- (1) Attribute (e.g., referring to the bowl with the word “receptacle”),

- (2) Numeric (e.g., under-specifying the number of blocks to be moved by saying “a few blocks”), and

- (3) Spatial (e.g., “put the yellow block next to the green bowl”, but the human has a preference over placing it at the front/back/left/right).

Results

Potential future work

ask should predicate be in what kind of relationship in the action -> solve ambiguity