[TOC]

- Title: Anti Exploration by Random Network Distillation

- Author: Alexander Nikulin et. al.

- Publish Year: 31 Jan 2023

- Review Date: Wed, Mar 1, 2023

- url: https://arxiv.org/pdf/2301.13616.pdf

Summary of paper

Motivation

- despite the success of Random Network Distillation (RND) in various domains, it was shown as not discriminative enough to be used as an uncertainty estimator for penalizing out-of-distribution actions in offline reinforcement learning

- ?? wait, why we want to penalizing out-of-distribution actions?

Contribution

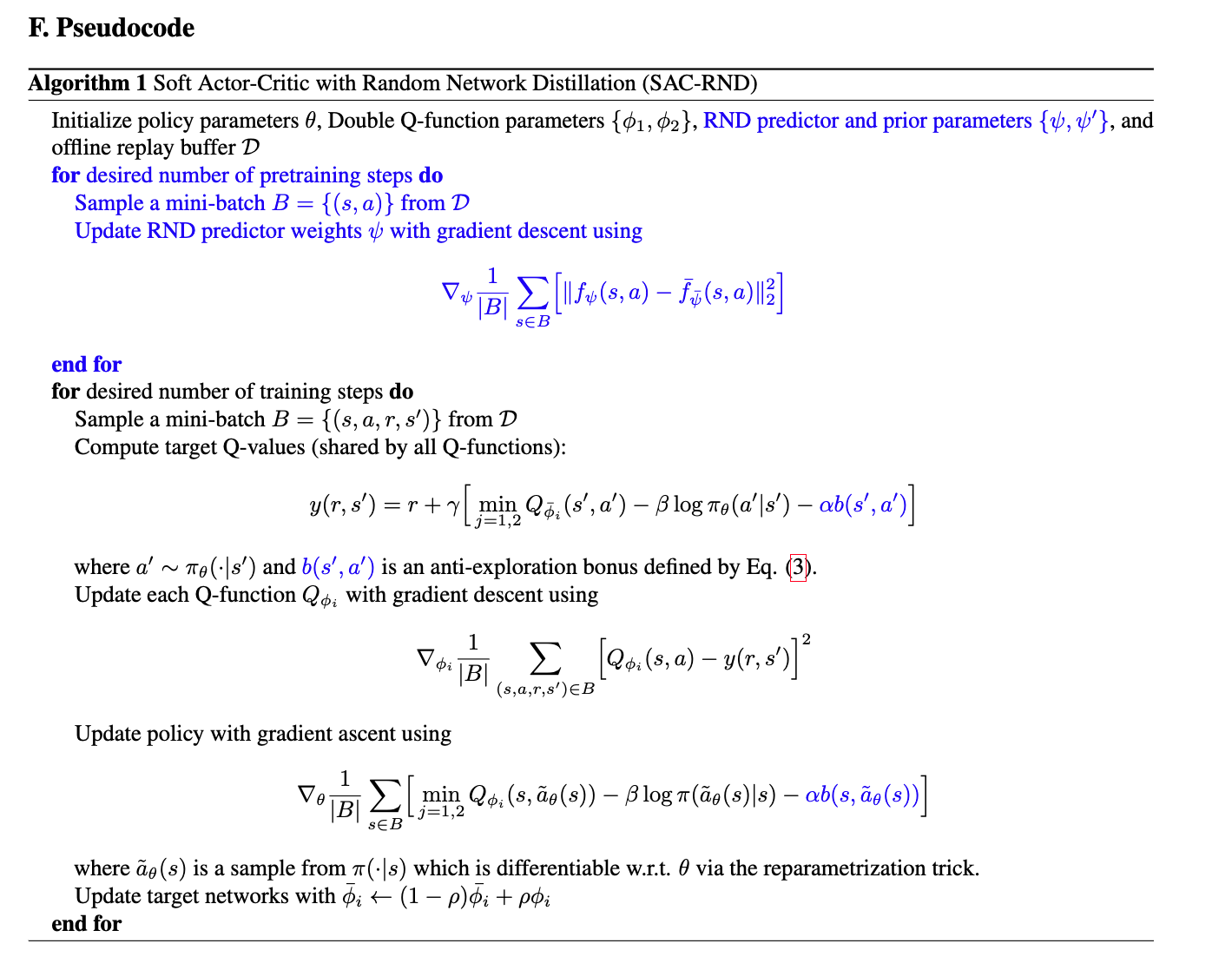

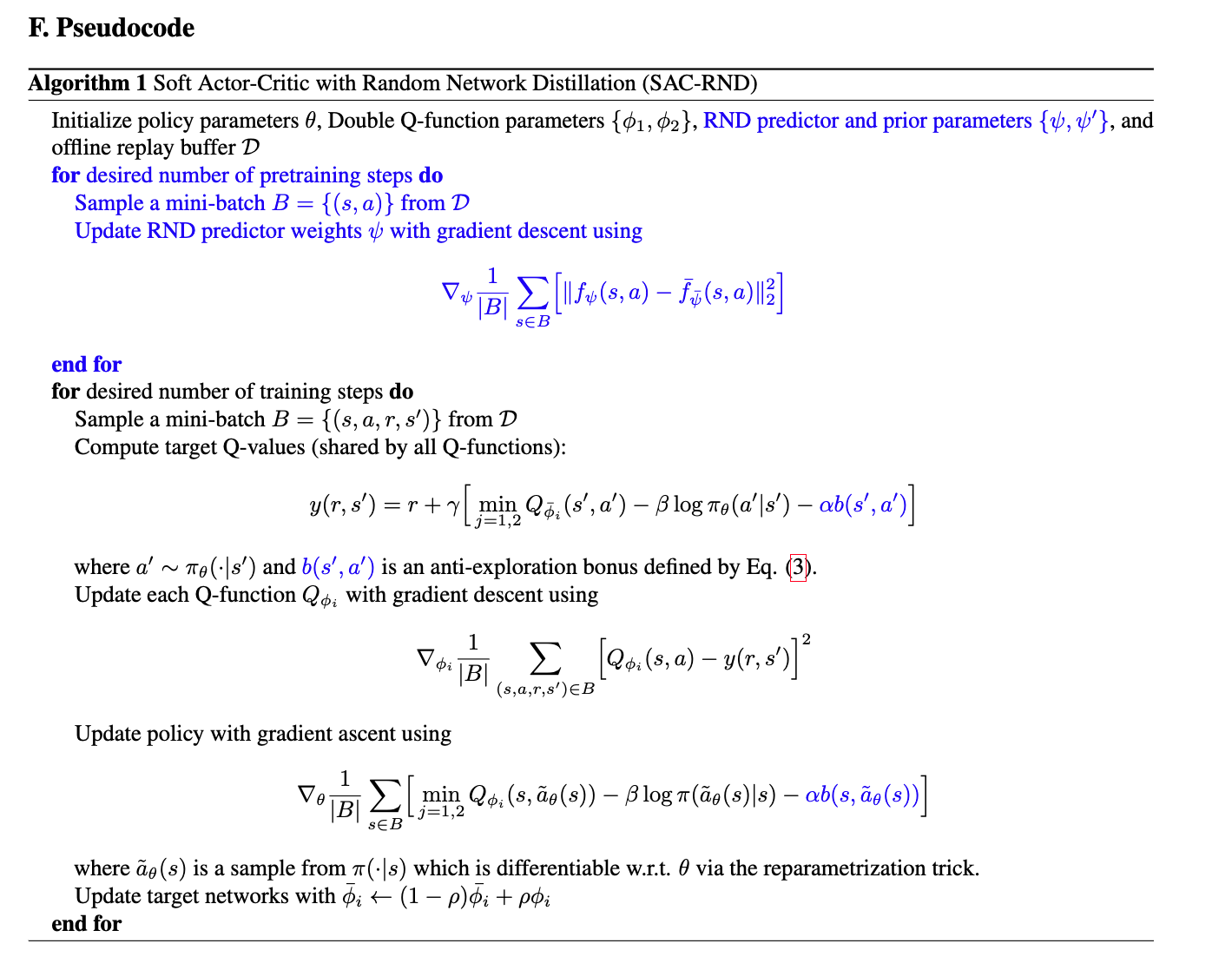

- With a naive choice of conditioning for the RND prior, it becomes infeasible for the actor to effectively minimize the anti-exploration bonus and discriminativity is not an issue.

- We show that this limitation can be avoided with conditioning based on Feature-wise Linear Modulation (FiLM), resulting in a simple and efficient ensemble-free algorithm based on Soft Actor-Critic.

Some key terms

why we want uncertainty-based penalization

- the use of ensembles for uncertainty-based penalization has proven to be one of the most effective approaches for offline RL.

what is ensemble-based algorithm

- such as SAC-N, EDAC (An et al., 2021), and MSG (Ghasemipour et al., 2022)

- build a group of Q-networks to solve the task

what is offline reinforcement learning

- In offline reinforcement learning, a policy must be learned from a fixed dataset D collected under a different policy or mixture of policies, without any environment interaction.

- this setting poses unique fundamental challenges, since the learning policy is unable to explore and has to deal with distributional shift and extrapolation errors for actions not represented in the training dataset.

Offline RL and Anti-Exploration

- There are numerous approaches for Offline RL, a substantial part of which constrain the learned policy to stay within the support of the training dataset, thus reducing or avoiding extrapolation errors.

- for our work, it is essential to understand how such a constraint can be framed as anti-exploration.

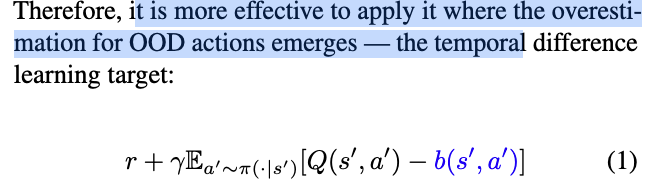

- Similarly to online RL, where novelty bonuses are used as additive intrinsic rewards for effective exploration, in offline RL, novelty bonuses can induce conservatism, reducing the reward in unseen state-action pairs. Hence the name anti-exploration, since the same approaches from exploration can be used, but a bonus is subtracted from the extrinsic reward instead of being added to it.