[TOC]

- Title: Theory Alignment via a Classical Encoding of Regular Bismulation 2022

- Author: Alex Coulter et. al.

- Publish Year: KEPS 2022

- Review Date: Wed, Nov 29, 2023

- url: https://icaps22.icaps-conference.org/workshops/KEPS/KEPS-22_paper_7781.pdf

Summary of paper

Motivation

- the main question we seek to answer is how we can test if two models align (where the fluents and action implementations may differ), and if not, where that misalignment occurs.

Contribution

- the work is built on a foundation of regular bisimulation

- found that the proposed alignment was not only viable, with many submissions having “solutions” to the merged model showing where a modelling error occurs, but several cases demonstrated errors with the submitted domains that were subtle and detected only by this added approach.

Some key terms

Bisimulation

- loosely defined, a bisimulation is a correspondence between two transition system such taht labeled transitions between two systems coincide: if two nodes (one from each system) coincide, then the reachable nodes in each system also coincide (following the labeled transitions)

- regular bisimulation

- the labels of the transitions are presumed to be the same, as well as the reachable state space (predicates?)

Merge

- we merge them. This merged domain retains the original types, action names/parameters, objects and constants (assumed to be the equivalent in both of the original models)

- fluents and initial states are merged

- finally, failure actions are introduced that represent a potential misalignment between the two models.

- as long as one of these failure action can be executed, the two theories do not align (measuring the ‘false negative’)

Alignment specification Assumptions

- types: we assume that the typing for each of the two models are precisely the same. This is required in order to adequately handle the action parameter space.

- objects / constants: Similarly, we assume that the objects and constants, including the types they adopt, are precisely the same between the two models. Moreover, we assume that both of the models use these objects in the same way.

- Action Names & Parameters: the space of full ground action specification must coincide. Thus, making sure the same action names and :paramaters definition is used in both of the models under consideration.

- Initial States We assume that each model captures the same initial state semantically.

- meaning, same task

- Fluents: We do not assume that the fluents are aligned.

- meaning: we can have different predicate setting and thus different action precon and effect details

- Action Precondition and Effect:

- we do not assume there to be any syntactic equivalence between the action precondition / effect of the two models.

- this allows us to compare widely different encodings of the same real world system.

- given that the fluents are not assumed to be coincide, the effective application of the action will be analyzed through the alignment process

Encoding

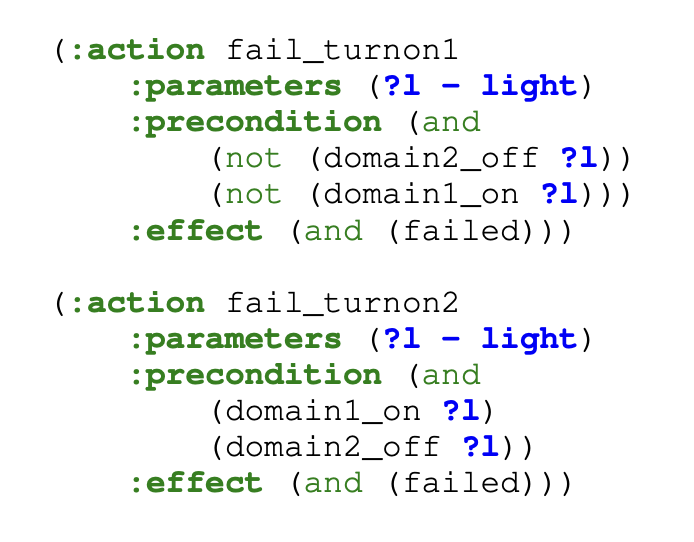

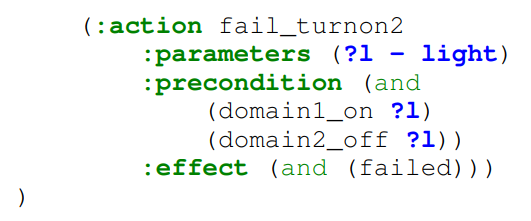

- every shared action has a pair of “failure actions” introduced that correspond to the situation where the action can be executed in the one model, but not the other.

- during the process, rename all of the predicates/fluents throughout both model so that they are unique: we prepend a prefix to every occurrence of a fluent (e.g., adding domain1_ or domain2_ to the start of every fluent name)

- failure action can only be executed in one model

Failed Goal

- if solution exists, it means that we can at least achieve some states in the precondition of some actions

- the only way to achieve the goal is for one of the fail actions to be executed, if this happens, it means there will be an action that give different semantic effects and eventually leads to contradiction in precondition section.

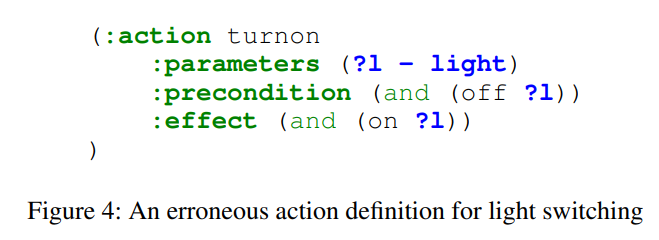

Error Example

- this is an erroneous action definition as it did not set

not (off ?l). (forgetting to delete a fluent.) The found plan for the merged domain is then(turnon light2)and then(fail_turnon2 light2).- this means the action was allowed to execute in the second domain, but not the first

- it is only through the analysis we present here that the misalignment between the two models is detected.

Diagnose a misalignment between models

- Failed action precondition. The most common source of error found is an error in precondition of the failed action. Thus, the first step in analyzing a plan is to contrast the precondition of act in both of the models, and see if there is mismatch in what is implemented

- Previous Action Effect: If the two models capture act similarly, then the next most common issue leading to misalignment is a previous action in the plan. Typically, candidate solutions that lead to a failure are short, and so the space of actions that must be considered is limited.

- it would be an action that directly influences the failed action act (through its preconditions)

- Initial State: the most rare source of errors is a misalignment in the initial state implementation.

- this source of error can be seen as analogous to the “Previous Action Effect” Errors, when one views the initial state as the effects of a single action at the start of a plan.

Precondition Satisfaction

- Applicability, by its very definition, is concerned with precondition satisfaction

How planner is involved in this work

- to decide if they are indeed regular bisimilar

- if they are not, a candidate explanation as to why in the form of a sequence of actions

- if

(fail_turnon2 light2)is in the plan, then check the correctness ofturnon2

- if

Evaluation

- there is an assignment task where the implementation involved devising (1) the fluents for the domain; (2) the preconditions and effects for 4 actions (move, pickup, drop, unlock; (3) the initial and goal states for 3 described problems

Other grading indicators

- other planning oriented functionality included

- running student domain/problem files to generate plans

- validating student-found plans with the reference model

- validating reference plans with the student model

- align to a model which include the most common error

- in the end, we can find the following errors

- plan-based errors

- validation errors (the plan from one model should be executable and valid in the other model)

- alignment error (the aligning method in this paper)

Good things about the paper (one paragraph)

- There is a rich theory behind what is being analyzed – plans in the merged model effectively provide us with a proof of contradiction to the two models being a regular bismulation ([Milner 1990](Milner, R. 1990. Operational and Algebraic Semantics of Concurrent Processes. In van Leeuwen, J., ed., Handbook of Theoretical Computer Science, Volume B: Formal Models and Semantics, 1201–1242. Elsevier and MIT Press))

Major comments

- They discuss the (mainly qualitative) results in Section 4.