[TOC]

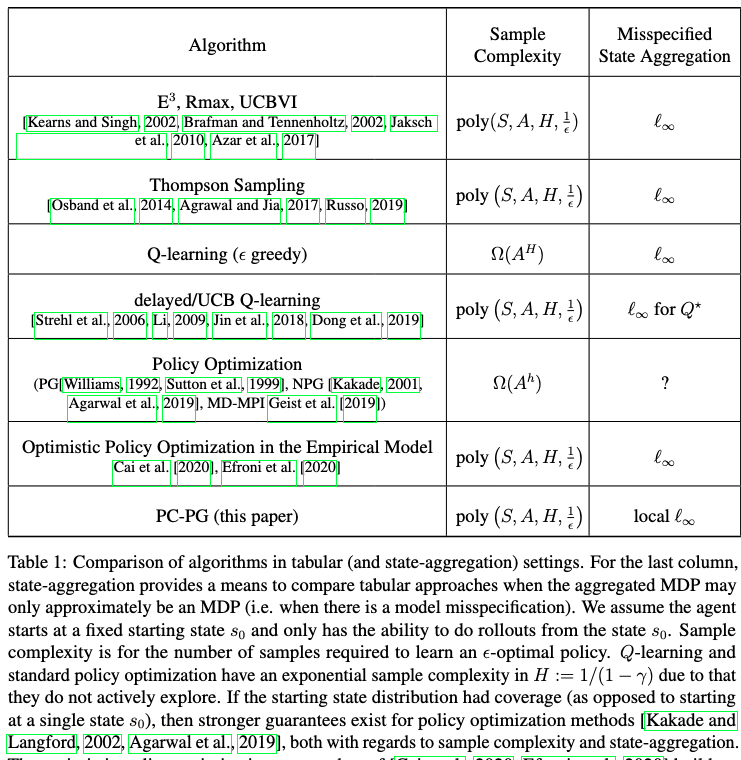

- Title: PC-PG Policy Cover Directed Exploration for Provable Policy Gradient Learning

- Author: Alekh Agarwal et. al.

- Publish Year:

- Review Date: Wed, Dec 28, 2022

Summary of paper

Motivation

- The primary drawback of direct policy gradient methods is that, by being local in nature, they fail to adequately explore the environment.

- In contrast, while model-based approach and Q-learning directly handle exploration through the use of optimism.

Contribution

- Policy Cover-Policy Gradient algorithm (PC-PG), a direct, model-free, policy optimisation approach which addresses exploration through the use of a learned ensemble of policies, the latter provides a policy cover over the state space.

- the use of a learned policy cover address exploration, and also address what is the catastrophic forgetting problem in policy gradient approaches (which use reward bonuses);

- the on-policy algorithm, where approximation errors due to model mispecification amplify (see [Lu et al., 2018] for discussion)

Some key terms

suffering from sparse reward

- The assumptions in these works imply that the state space is already well-explored. Conversely, without such coverage (and, say, with sparse rewards), policy gradients often suffer from the vanishing gradient problem.

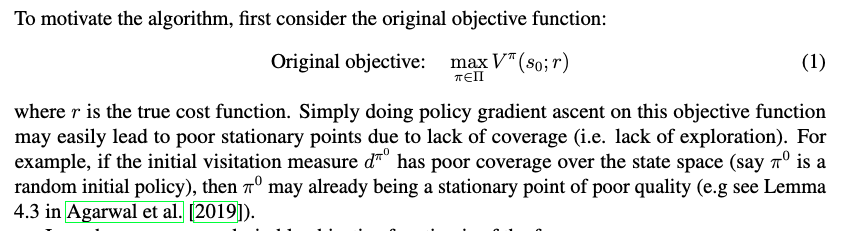

original objective function and coverage of state space

wider coverage objective

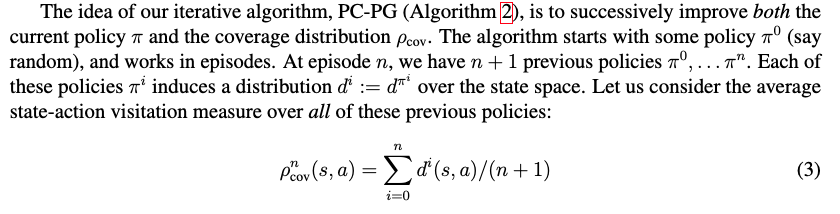

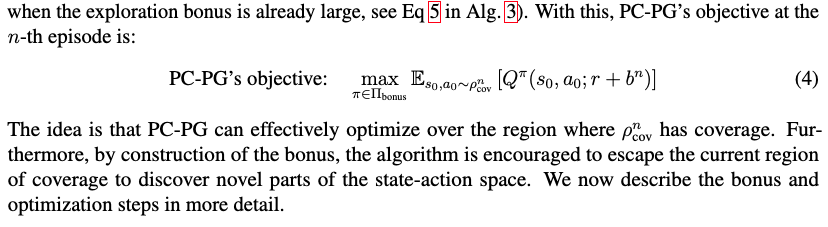

iterative algorithm PC-PG

- the idea is to successively improve both the current policy $\pi$ and the coverage distribution

- the algorithm starts with some policy $\pi^0$, and works in episodes.

- a bonus bn in order to encourage the algorithm to find a policy πn+1 which covers a novel part of the state-action space

Potential future work

- so exploration essentially means we need to have a good state coverage for our training trajectory so that convergence to optimum is guaranteed.