[TOC]

- Title: Exploring the Limitations of Using LLMs to Fix Planning Tasks

- Author: Alba Gragera et. al.

- Publish Year: icaps23.icaps-conference

- Review Date: Wed, Sep 20, 2023

- url: https://icaps23.icaps-conference.org/program/workshops/keps/KEPS-23_paper_3645.pdf

Summary of paper

Motivation

- In this work, the authors present ongoing efforts on exploring the limitations of LLMs in task requiring reasoning and planning competences: that of assisting humans in the process of fixing planning tasks.

Contribution

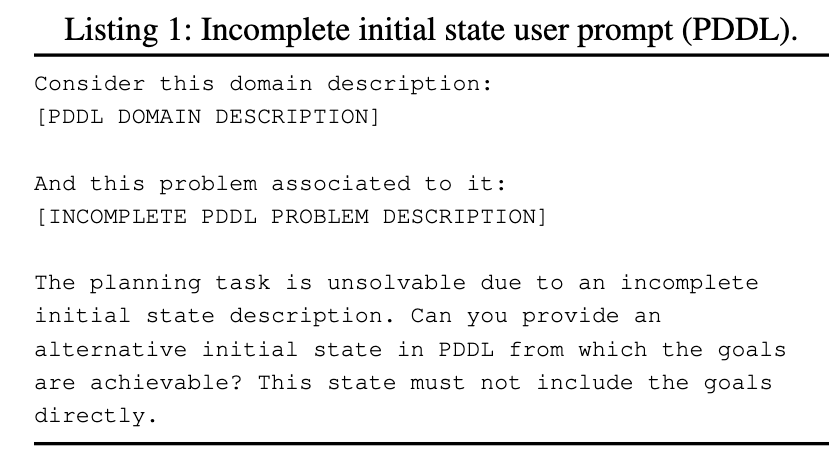

- investigate how good LLMs are at repairing planning tasks when the prompt is given in PDDL and when it is given in natural language.

- also they tested on incomplete initial state and also incomplete domains which lack a necessary action effect to achieve the goals.

- in all cases, LLMs are used as stand-alone, and they directly assess the correctness of the solutions it generates.

conclusion: they demonstrate that although LLMs can in principle facilitate iterative refinement of PDDL models through user interaction, their limited reasoning abilities render them insufficient for identifying meaningful changes to ill-defined planning models that result into solvable planning tasks.

Some key terms

inherent limitations of LLMs

- LLMs learn statistically patterns to predict the most probable next word or sentence, recent evidence strongly suggests that current LLMs are poor at task requiring planning capabilities such as plan generation, plan reuse or replanning.

- however, their web-scale knowledge and their utility as code assistants (Feng et al. 2020) suggest that they could assist users during the formalization phase.

challenges of fixing domains

- Fixing planning tasks in cases where the error lies in the domain model is not trivial due to the number of potential changes to the set of actions (Lin and Bercher 2021).

Observations

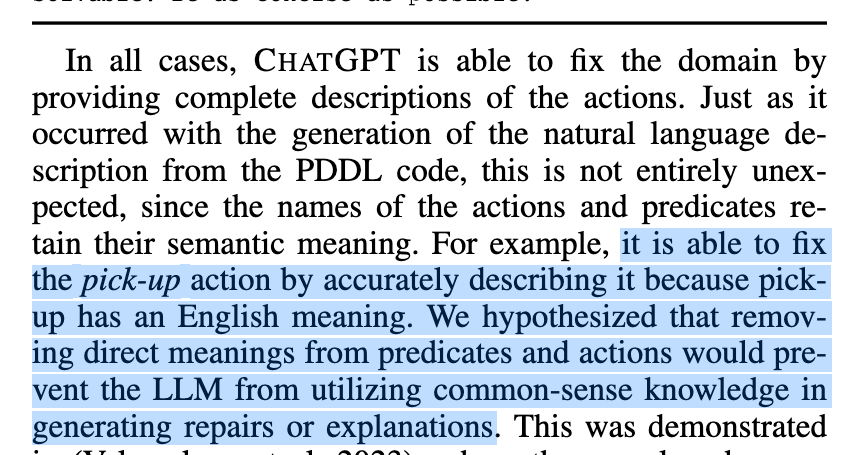

- CHATGPT is better at dealing with missing initial states.

- errors in action definitions are really hard to get repaired. (solvable rate is 3/20)

- the authors found that communicating in natural language could help LLMs to find bugs.

- comment: however, it requires extra efforts converting NL descriptions backto PDDL and this can also be error-prone

Good things about the paper (one paragraph)

- very useful experiment results

Incomprehension

- I would say the authors should make more efforts refining their prompts so that GPT can produce better results. (though they have put this point in their future work)

Potential future work

- A good insight of this paper is that, it summarised two important categories of the common PDDL errors – 1) missing initial states; 2) ill-defined action definition, we shall develop ad-hoc modules targeting them.