Verification in Llm Topic 2024

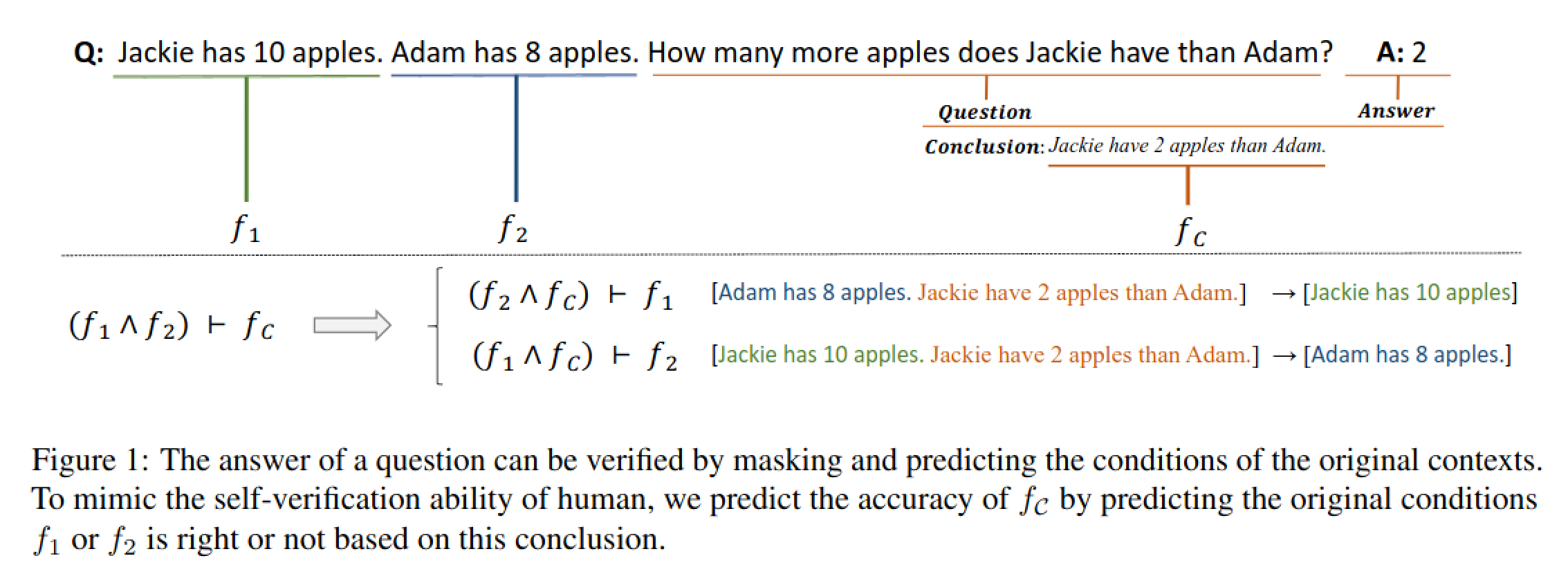

[TOC] Review Date: Thu, Jun 20, 2024 Verification in LLM Topic 2024 Paper 1: Weng, Yixuan, et al. “Large language models are better reasoners with self-verification.” arXiv preprint arXiv:2212.09561 (2022). the better reasoning with CoT is carried out in the following two steps, Forward Reasoning ad Backward Verification. Specifically, in Forward Reasoning, LLM reasoners generate candidate answers using CoT, and the question and candidate answers form different conclusions to be verified. And in Backward Verification, We mask the original condition and predict its result using another CoT. We rank candidate conclusions based on a verification score, which is calculated by assessing the consistency between the predicted and original condition values